MoviePass brings back its unlimited movie plan, with a limited time price of $9.95

MoviePass is bringing back a version of the plan that made it so popular in the first place — a subscription where you pay a monthly fee and get an unlimited number of 2D movie tickets.

MoviePass Uncapped will have a regular price of $19.95 per month, but the company is offering cheaper deals for what it says is a limited time. If you’re willing to pay for a full year (via ACH payment), it will cost the same as that original unlimited plan, namely $9.95 per month. If you don’t want to make a full-year commitment, it will cost $14.95 per month.

Now, you may be thinking that this kind of deal is exactly what got MoviePass into so much trouble last year, to the point where it nearly ran out of money and began announcing new pricing plans and restrictions on a seemingly constant basis.

However, the company’s announcement today includes multiple references to its ability to “combat violations” of MoviePass’ terms of use. And those terms do say that “MoviePass has the right to limit the selection of movies and/or the times of available movies should your individual use adversely impact MoviePass’s system-wide capacity or the availability of the Service for other subscribers.”

So if you’re a heavy MoviePass user, the plan may not be truly unlimited.

In addition, you’ll only be able to reserve tickets three hours before showtime, and you’ll need to check in to the theater between 10 and 30 minutes before the movie starts.

This new plan replaces the ones announced in December. If you’ve already signed up, you can stick to those subscriptions, but new users won’t have that option.

In a statement, Ted Farnsworth, CEO of MoviePass parent company Helios and Matheson Analytics, said:

We are – and have been – listening to our subscribers every day, and we understand that an uncapped subscription plan at the $9.95 price point is the most appealing option to our subscribers. While we’ve had to modify our service a number of times in order to continue delivering a movie-going experience to our subscribers, with this new offering we are doing everything we can to bring people a version of the service that originally won their hearts.

Powered by WPeMatico

AI has become table stakes in sales, customer service and marketing software

Artificial intelligence and machine learning has become essential if you are selling sales, customer service and marketing software, especially in large enterprises. The biggest vendors from Adobe to Salesforce to Microsoft to Oracle are jockeying for position to bring automation and intelligence to these areas.

Just today, Oracle announced several new AI features in its sales tools suite and Salesforce did the same in its customer service cloud. Both companies are building on artificial intelligence underpinnings that have been in place for several years.

All of these companies want to help their customers achieve their business goals by using increasing levels of automation and intelligence. Paul Greenberg, managing principal at The 56 Group, who has written multiple books about the CRM industry, including CRM at the Speed of Light, says that while AI has been around for many years, it’s just now reaching a level of maturity to be of value for more businesses.

“The investments in the constant improvement of AI by companies like Oracle, Microsoft and Salesforce are substantial enough to both indicate that AI has become part of what they have to offer — not an optional [feature] — and that the demand is high for AI from companies that are large and complex to help them deal with varying needs at scale, as well as smaller companies who are using it to solve customer service issues or minimize service query responses with chatbots,” Greenberg explained.

This would suggest that injecting intelligence in applications can help even the playing field for companies of all sizes, allowing the smaller ones to behave like they were much larger, and for the larger ones to do more than they could before, all thanks to AI.

The machine learning side of the equation allows these algorithms to see patterns that would be hard for humans to pick out of the mountains of data being generated by companies of all sizes today. In fact, Greenberg says that AI has improved enough in recent years that it has gone from predictive to prescriptive, meaning it can suggest the prospect to call that is most likely to result in a sale, or the best combination of offers to construct a successful marketing campaign.

Brent Leary, principle at CRM Insights, says that AI, especially when voice is involved, can make software tools easier to use and increase engagement. “If sales professionals are able to use natural language to interact with CRM, as opposed to typing and clicking, that’s a huge barrier to adoption that begins to crumble. And making it easier and more efficient to use these apps should mean more data enters the system, which result in quicker, more relevant AI-driven insights,” he said.

All of this shows that AI has become an essential part of these software tools, which is why all of the major players in this space have built AI into their platforms. In an interview last year at the Adobe Summit, Adobe CTO Abhay Parasnis had this to say about AI: “AI will be the single most transformational force in technology,” he told TechCrunch. He appears to be right. It has certainly been transformative in sales, customer service and marketing.

Powered by WPeMatico

Trello aims for the enterprise

Trello, Atlassian’s project management tool, is doubling down on its efforts to become a better service for managing projects at work. To do so, the team is launching thirteen new features in Trello Enterprise today, making this one of the company’s biggest feature releases since the launch of the enterprise version in 2015.

As the company also announced today, one million teams now actively use the service.

Most of these new features are for paying users, but even Trello’s free users are getting access to a few new goodies. In return, though, Trello is taking away the ability to create an unlimited number of boards for free Teams users (not regular users outside of a team). Going forward, they can only have 10 boards open in Trello at any given time. Teams without a subscription that already use more than 10 boards will continue to use them but will have to subscribe to a paid plan to add more. To help make all of this a bit easier, Trello will let existing free teams add up to 10 additional boards until May 1, 2019 — and they’ll be able to keep them going forward.

“We’re making this change to accelerate our ability to bring world-class business features to market, and Trello Business Class and Enterprise will get more useful and powerful to address our customers’ pain points in the workplace,” the company’s co-founder and today’s head of Trello Michael Pryor writes in today’s announcement — and to do bring those feature to market, it surely helps to convert a few more free users into paying ones.

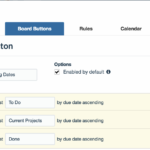

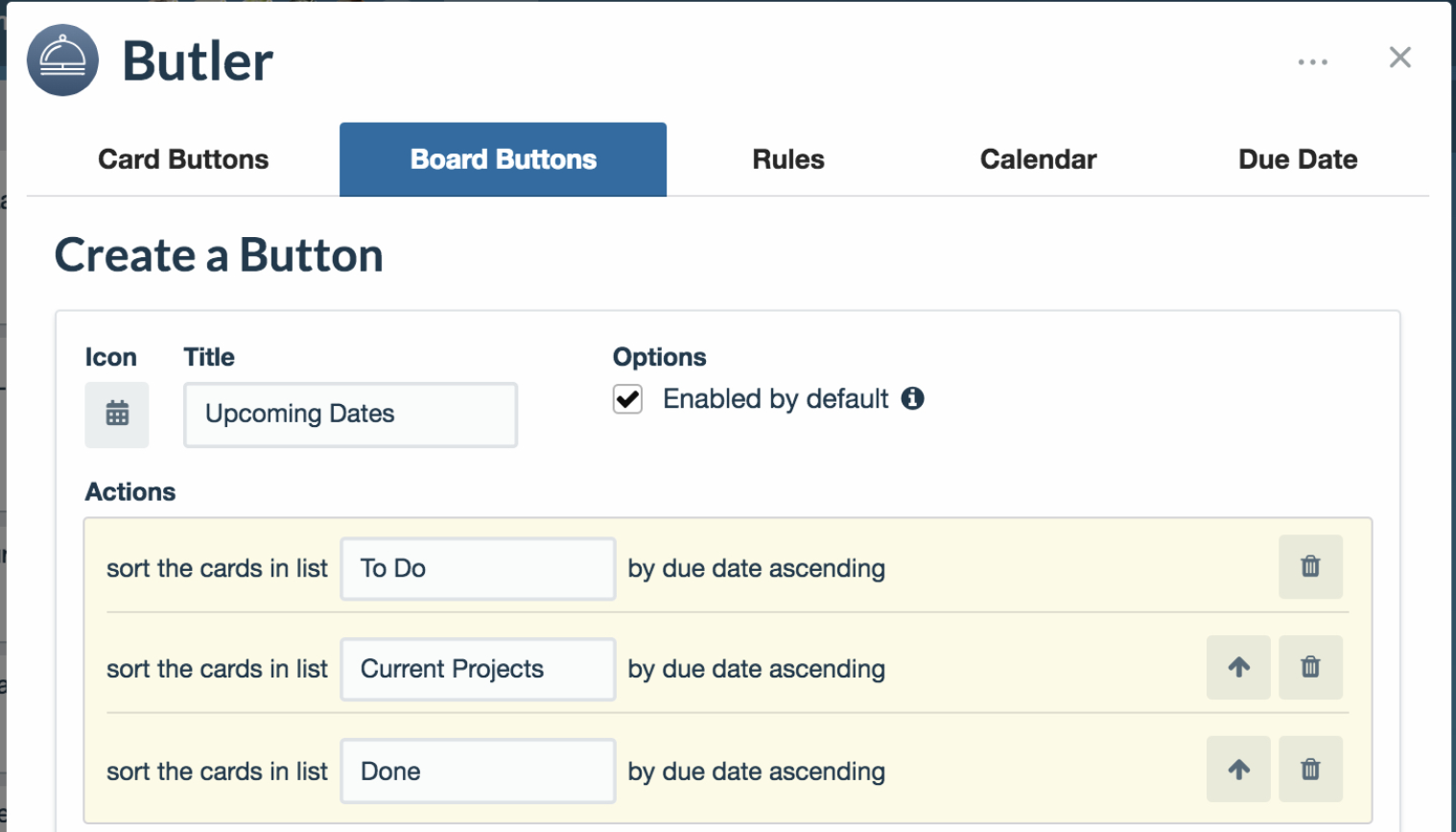

One of the main new feature announcements here is that the Power-Up Butler is now available for free, for both paying and free users (though with some limitations if you aren’t on a subscription plan). Power-Up Butler is an automation extension for Trello that the company acquired in December. It makes it easier to automate workflows and other repetitive tasks in Trello — and that’s clearly something the service’s enterprise users were asking for.

With this update, Trello is also now getting a new board setting beyond ‘private,’ ‘team’ and ‘public.’ This new setting, ‘organization,’ allows you to share a board with the entire company, including those who are not on a particular team. Until now, that wasn’t really an option and creating a public board was obviously not an option for many companies.

Since IT admins love nothing more than access controls, the new version of Trello Enterprise also features a lot of new ways for them to create visibility controls, membership restrictions, board creation restrictions and more. Admins now also get tools to enforce the use of single sign-on solutions and new ways to manage public boards and users, as well as which power-up extensions employees can use.

The company also today announced that it has received SOX and SOC2 Type 1 compliance.

Powered by WPeMatico

Teams, Microsoft’s Slack competitor, says it’s signed up over 500k organizations, adds whiteboard and live events support

Microsoft Teams, the collaboration platform that Microsoft built to complement its Office 365 suite of productivity apps for workers — which also ensures a way of keeping those workers staying within its own ecosystem — is hitting a milestone on its second birthday.

Today, the company announced that over 500,000 organizations are now using Teams. The company is not spelling out what that works out to in total users but notes that 150 of them have more than 10,000 users apiece, putting its total user numbers well over 1.5 million.

Alongside this, Microsoft also announced a number of new features that will be coming to Teams as it works on native integrations of more of Microsoft’s own tools to give Teams more functionality and more relevance for a wider range of use cases.

“The rigid hierarchy of the workplace has evolved, and environments are now about inclusivity and transparency,” said Lori Wright, General Manager of Workplace Collaboration at Microsoft, in an interview. “We see these trends playing out all over the world, and this is giving rise to new forms of technology.”

The new features indeed speak to that trend of inclusivity and making platforms more personalised to users. They include customized backgrounds; and support for cameras to capture content to bring in new ways of interacting in Teams beyond text — something that will be further explored with the eventual integration Microsoft Whiteboard, for people to create and ingest presentations that are hand-written into the system.

For those who are either hearing-impaired or cannot use or hear the audio, Microsoft’s adding live captions. And to speak to the purview of CSOs, it’s adding secure channels for private chats as well as “information barriers” that can be put in place for compliance purposes and to make sure that any potential conflicts of interest between channels are kept out; screening for data-loss prevention to prevent sensitive information from being shared.

Finally, it is adding live events support, which will let users create broadcasts on Teams for up to 10,000 people (who do not need to be registered Teams users to attend).

All in all, this is a significant list of product updates. The company kicked off its service as very much a Slack-style product for “knowledge workers” but has since emphasized a more inclusive approach, for all kinds of employees, from front line to back-office.

No updates today to the number of third-party applications that are being incorporated into Teams — an area where Slack has particularly excelled — but Microsoft is focused on making sure that as many users as it has already captured in Office 365, which today number 155 million — eventually also turn on to Teams. “We using as many as the Microsoft services as we can, tapping the Microsoft Graph to feed in services and structure information,” Wright said.

Microsoft is somewhat of a late comer to the collaboration space, coming in the wake of a number of other efforts, but these user figures put the company’s effort well within striking distance of notable, and large, competitors. Last month, Facebook noted that Workplace, its own Slack rival, had 2 million users, also with 150 organizations with more than 10,000 users each included in the number. Slack, meanwhile, in January said it had over 10 million daily active users with the number of organizations on the platform at 85,000.

(Notably, just yesterday Slack made a timely announcement in its bid to court more large enterprises: they will now give regulated customers access to their encrypted keys, an important component to win more business in those sectors.)

Powered by WPeMatico

Vonage brings number programmability to its business service

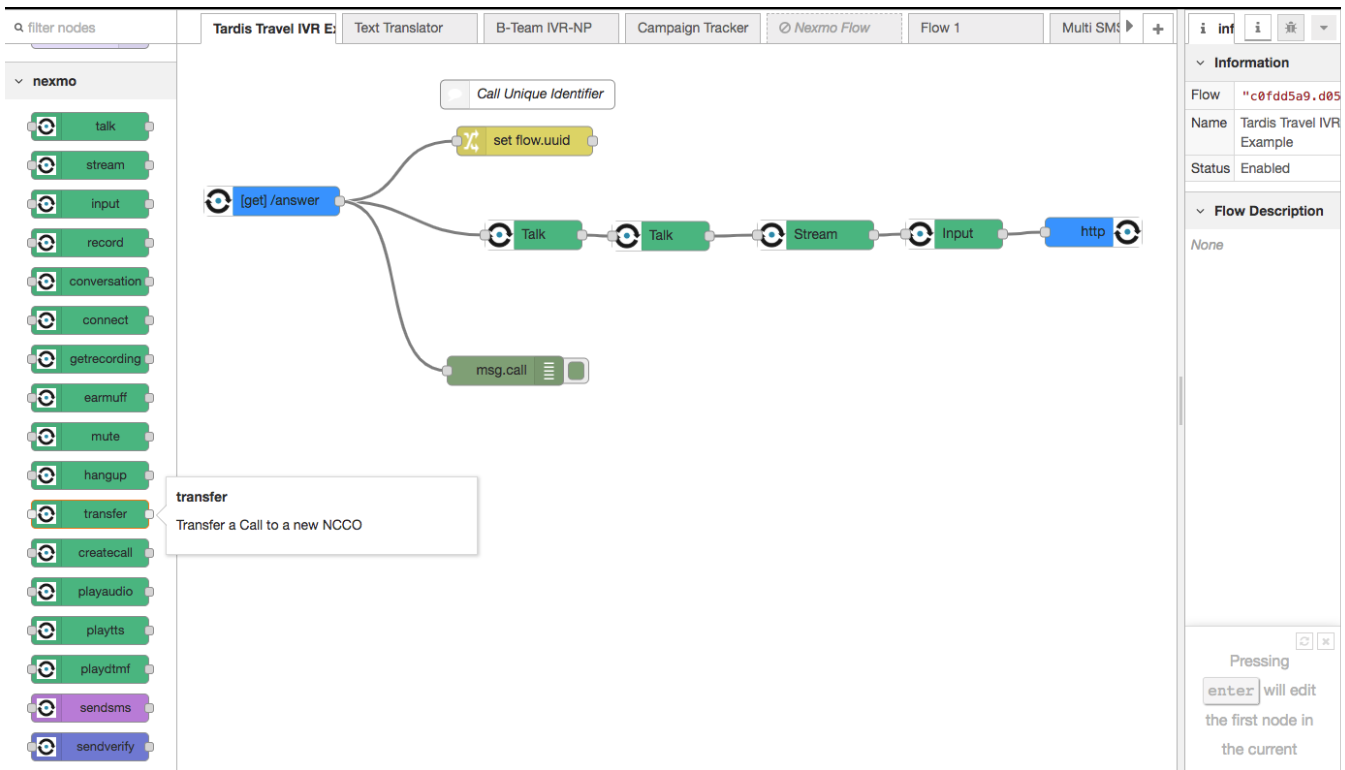

Chances are you still mostly think of Vonage as a consumer VOIP player, but in recent years, the company also launched its Vonage Business Cloud (VBC) platform and acquired Nexmo, an API-based communications service that competes directly with many of Twilio’s core services. Today, Vonage is bringing its VBC service and Nexmo a bit closer with the launch of number programmability for its business customers.

What this means is that enterprises can now take any VBC number and extend it with the help of Nexmo’s APIs. To enable this, all they have to do is toggle a switch in their management console and then they’ll be able to programmatically route calls, create custom communications apps and workflows, and integrate third-party systems to build chatbots and other tools.

“About four years ago we made a pretty strong pivot to going from residential — a lot of people know Vonage as a residential player — to the business side,” Vonage senior VP of product management Jay Patel told me. “And through a series of acquisitions [including Nexmo], we’ve kind of built what we think is a very unique offering.” In many ways, those different platforms were always separated from each other, though. With all of the pieces in place now, however, the team started thinking about how it could use the Nexmo APIs to allow its customers in the unified communications and contact center space to more easily customize these services for them.

About a year ago, the team started working on this new functionality that brings the programmability of Nexmo to VBC. “We realized it doesn’t make sense for us to create our own new sets of APIs on our unified communications and contact center space,” said Patel. “Why don’t we use the APIs that Nexmo has already built?”

As Patel also stressed, the phone number is still very much linked to a business or individual employee — and they don’t want to change that just for the sake of having a programmable service. By turning on programmability for these existing numbers, though, and leveraging the existing Nexmo developer ecosystem and the building blocks those users have already created, the company believes that it’s able to offer a differentiated service that allows users to stay on its platform instead of having to forward a call to a third-party service like Twilio, for example, to enable similar capabilities.

In terms of those capabilities, users can pretty much do anything they want with these calls — and that’s important because every company has different processes and requirements. Maybe that’s logging info into multiple CRM systems in parallel or taking a clip of a call and pushing it into a different system for training purposes. Or you could have the system check your calendar when there are incoming calls and then, if it turns out you are in a meeting, offer the caller a callback whenever your calendar says you’re available again. All of that should only take a few lines of code or, if you want to avoid most of the coding, a few clicks in the company’s GUI for building these flows.

Vonage believes that these new capabilities will attract quite a few new customers. “It’s our value-add when we’re selling to new customers,” he said. “They’re looking for this kind of capability or are running into brick walls. We see a lot of companies that have an idea but they don’t know how to do it. They’re not engineers or they don’t have a big staff of developers, but because of the way we’ve implemented this, it brings the barrier of entry to create these solutions much lower than if you had a legacy system on-prem where you had to be a C++ developer to build an app.

Powered by WPeMatico

Xiaomi outs Redmi Go, a $65 entry-level smartphone for India

Chinese smartphone maker Xiaomi has announced a new entry level smartphone at an event in Delhi.

The entry-level smartphone is targeted at the Indian market and looks intended to woo feature phone owners to upgrade from a more basic mobile.

It runs Google’s flavor of Android optimized for low-powered smartphones (Android Go) which supports lightweight versions of apps.

Under the hood the dual-SIM handset has a Qualcomm Snapdragon 425 chipset, 1GB RAM and 8GB of storage (though there’s a slot for expanding storage capacity up to 128GB).

Also on board: 4G cellular connectivity and a 3000mAh battery.

Up front there’s a 5 inch HD display with a 16:9 aspect ration, and 5MP selfie camera. An 8MP camera brings up the rear, with support for 1080p video recording.

At the time of writing the Redmi Go is being priced at 4,499 rupee (~$65). Albeit a mark-down graphic on the company’s website suggests the initial price may be a temporary discount on a full RRP of 5,999 rupees (~85). We’ve asked Xiaomi for confirmation.

Xiaomi’s website lists it as available to buy at 12PM March 22.

Mi fans, presenting #RedmiGo #AapkiNayiDuniya

– Qualcomm® Snapdragon

425

– AndroidOreo

(Go Edition)

– 3000mAh Battery

– 8MP Rear camera with LED Flash

– 5MP Selfie camera

– 5″ HD display

– 4G Network Connectivity

– Color: Blue & black

– Price: ₹4,499RT & spread the

pic.twitter.com/aanAoiauqj

— Mi India (@XiaomiIndia) March 19, 2019

While Xiaomi is squeezing its entry level smartphone price-tag here, the Redmi Go’s cost to consumers in India still represents a sizeable bump on local feature phone prices.

For example the Nokia 150 Dual SIM candybar can cost as little as 1,500 rupees (~20). Though there’s clearly a big difference between a candybar keypad mobile and a full-screen smartphone. Yet 3x more expensive represents an immovable barrier for many consumers in the market.

The Redmi Go also looks intended to respond to local carrier Reliance Jio’s 4G feature phones, which are positioned — price and feature wise — as a transitionary device, sitting between a dumber feature phone and full-fat smartphone.

The JioPhone 2 launched last year with a price tag of 2,999 rupees (~40). So the Redmi Go looks intended to close the price gap — and thus try to make a transitionary handset with a smaller screen less attractive than a full screen Android-powered smartphone experience.

That said, the JioPhone handsets run a fork of Firefox OS, called KaiOS, which can also run lightweight versions of apps like Facebook, Twitter and Google.

So, again, many India consumers may not see the need (or be able) to shell out ~1,500 rupees more for a lightweight mobile computing experience when they can get something similar for cheaper elsewhere. And indeed plenty of the early responses to Xiaomi’s tweet announcing the Redmi Go brand it “overpriced”.

Powered by WPeMatico

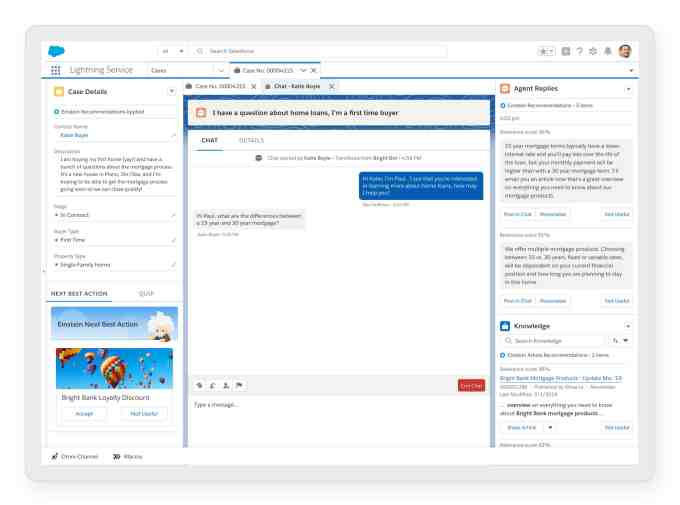

Salesforce update brings AI and Quip to customer service chat experience

When Salesforce introduced Einstein, its artificial intelligence platform in 2016, it was laying the ground work for artificial intelligence underpinnings across the platform. Since then the company has introduced a variety of AI enhancements to the Salesforce product family. Today, customer service got some AI updates.

The goal of any customer service interaction is to get the customer answers as quickly as possible. Many users opt to use chat over phone, and Salesforce has added some AI features to help customer service agents get answers more quickly in the chat interface. (The company hinted that phone customer service enhancements are coming.)

For starters, Salesforce is using machine learning to deliver article recommendations, response recommendations and next best actions to the agent in real time as they interact with customers. “With Einstein article recommendations, we can use machine learning on past cases and we can look at how articles were used to successfully solve similar cases in the past, and serve up the best article right in the console to help the agent with the case,” Martha Walchuk, senior director of product marketing for Salesforce Service Cloud explained.

Salesforce Service Console. Screenshot: Salesforce

The company is also using similar technology to provide response recommendations, which the agent can copy and paste into the chat to speed up the time to response. Before the interaction ends, the company can offer the next best action (which was announced last year) based on the conversation. For example, they could offer related information, an upsell recommendation or whatever type of action the customer defines.

Salesforce is also using machine learning to help route each person to the most appropriate customer service rep. As Salesforce describes it, this feature uses machine learning to filter cases and route them to the right queue or agent automatically, based on defined criteria such as best qualified agent or past outcomes.

Finally, the company is embedding Quip, the company it acquired in 2016 for $750 million, into the customer service console to allow agents to communicate with one another to find answers to difficult problems. That not only helps solve the issues faster, the conversations themselves become part of the knowledge base, which Salesforce can draw upon to help teach the machine learning algorithms about the correct responses to commonly asked questions in the future.

As with the Oracle AI announcement this morning, this use of artificial intelligence in sales, service and marketing is part of a much broader industry trend, as these companies try to inject intelligence into workflows to make them run more efficiently.

Powered by WPeMatico

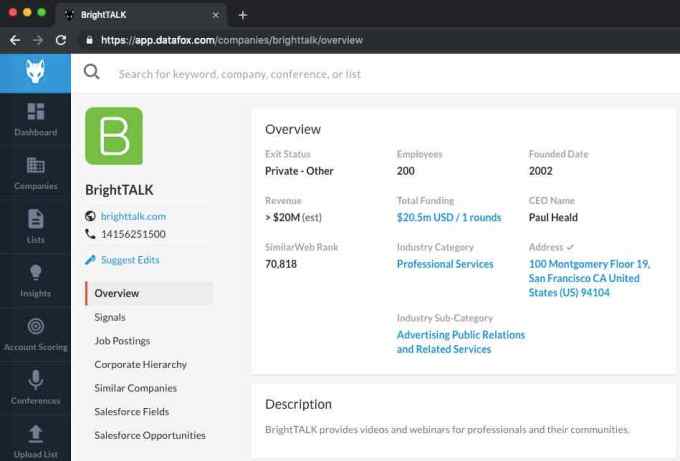

Oracle adds more AI features to its suite of sales tools

As the biggest sales and marketing technology firms mature, they are all turning to AI and machine learning to advance the field. This morning it was Oracle’s turn, announcing several AI-fueled features for its suite of sales tools.

Rob Tarkoff, who had previous stints at EMC, Adobe and Lithium, and is now EVP of Oracle CX Cloud says that the company has found ways to increase efficiency in the sales and marketing process by using artificial intelligence to speed up previously manual workflows, while taking advantage of all the data that is part of modern sales and marketing.

For starters, the company wants to help managers and salespeople understand the market better to identify the best prospects in the pipeline. To that end, Oracle is announcing integration with DataFox, the company it purchased last fall. The acquisition gave Oracle the ability to integrate highly detailed company profiles into their Customer Experience Cloud, including information such as SEC filings, job postings, news stories and other data about the company.

DataFox company profile. Screenshot: Oracle

“One of the things that DataFox helps you you do better is machine learning-driven sales planning, so you can take sales and account data and optimize territory assignments,” he explained.

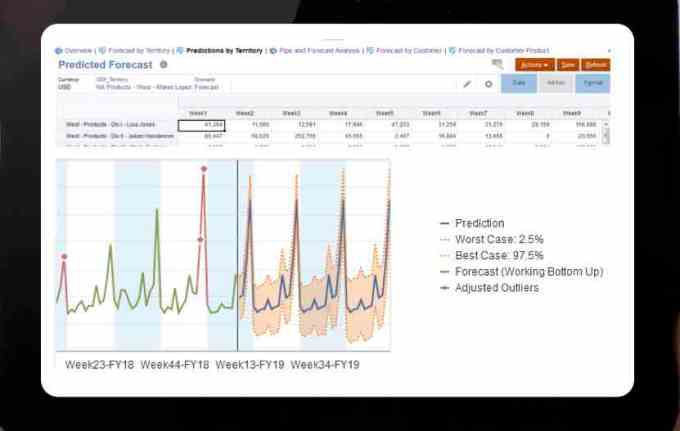

The company also announced an AI sales planning tool. Tarkoff says that Oracle created this tool in conjunction with its ERP team. The goal is to use machine learning to help finance make more accurate performance predictions based on internal data.

“It’s really a competitor to companies like Anaplan, where we are now in the business of helping sales leaders optimize planning and forecasting, using predictive models to identify better future trends,” Tarkoff said.

Sales forecasting tool. Screenshot: Oracle

The final tool is really about increasing sales productivity by giving salespeople a virtual assistant. In this case, it’s a chatbot that can help handle tasks like scheduling meetings and offering task reminders to busy sales people, while allowing them to use their voices to enter information about calls and tasks. “We’ve invested a lot in chatbot technology, and a lot in algorithms to help our bots with specific dialogues that have sales- and marketing-industry specific schema and a lot of things that help optimize the automation in a rep’s experience working with sales planning tools,” Tarkoff said.

Brent Leary, principal at CRM Essentials, says that this kind of voice-driven assistant could make it easier to use CRM tools. “The Smarter Sales Assistant has the potential to not only improve the usability of the application, but by letting users interact with the system with their voice it should increase system usage,” he said.

All of these enhancements are designed to increase the level of automation and help sales teams run more efficiently with the ultimate goal of using data to more sales and making better use of sales personnel. They are hardly alone in this goal as competitors like Salesforce, Adobe and Microsoft are bringing a similar level of automation to their sales and marketing tools

The sales forecasting tool and the sales assistant are generally available starting today. The DataFox integration will GA in June.

Powered by WPeMatico

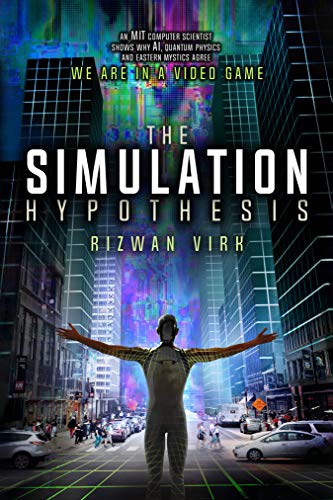

How to build The Matrix

Contributor

Released this month 20 years ago, “The Matrix” went on to become a cultural phenomenon. This wasn’t just because of its ground-breaking special effects, but because it popularized an idea that has come to be known as the simulation hypothesis. This is the idea that the world we see around us may not be the “real world” at all, but a high-resolution simulation, much like a video game.

While the central question raised by “The Matrix” sounds like science fiction, it is now debated seriously by scientists, technologists and philosophers around the world. Elon Musk is among those; he thinks the odds that we are in a simulation are a billion to one (in favor of being inside a video-game world)!

As a founder and investor in many video game startups, I started to think about this question seriously after seeing how far virtual reality has come in creating immersive experiences. In this article we look at the development of video game technology past and future to ask the question: Could a simulation like that in “The Matrix” actually be built? And if so, what would it take?

What we’re really asking is how far away we are from The Simulation Point, the theoretical point at which a technological civilization would be capable of building a simulation that was indistinguishable from “physical reality.”

[Editor’s note: This article summarizes one section of the upcoming book, “The Simulation Hypothesis: An MIT Computer Scientist Shows Why AI, Quantum Physics and Eastern Mystics All Agree We Are in a Video Game.“]

From science fiction to science?

But first, let’s back up.

“The Matrix,” you’ll recall, starred Keanu Reeves as Neo, a hacker who encounters enigmatic references to something called the Matrix online. This leads him to the mysterious Morpheus (played by Laurence Fishburne, and aptly named after the Greek god of dreams) and his team. When Neo asks Morpheus about the Matrix, Morpheus responds with what has become one of the most famous movie lines of all time: “Unfortunately, no one can be told what The Matrix is. You’ll have to see it for yourself.”

Even if you haven’t seen “The Matrix,” you’ve probably heard what happens next — in perhaps its most iconic scene, Morpheus gives Neo a choice: Take the “red pill” to wake up and see what the Matrix really is, or take the “blue pill” and keep living his life. Neo takes the red pill and “wakes up” in the real world to find that what he thought was real was actually an intricately constructed computer simulation — basically an ultra-realistic video game! Neo and other humans are actually living in pods, jacked into the system via a cord into his cerebral cortex.

Who created the Matrix and why are humans plugged into it at birth? In the two sequels, “The Matrix Reloaded” and “The Matrix Revolutions,” we find out that Earth has been taken over by a race of super-intelligent machines that need the electricity from human brains. The humans are kept occupied, docile and none the wiser thanks to their all-encompassing link to the Matrix!

But the Matrix wasn’t all philosophy and no action; there were plenty of eye-popping special effects during the fight scenes. Some of these now have their own name in the entertainment and video game industry, such as the famous “bullet time.” When a bullet is shot at Neo, the visuals slow down time and manipulate space; the camera moves in a circular motion while the bullet is frozen in the air. In the context of a 3D computer world, this make perfect sense, though now the camera technique is used in both live action and video games. AI plays a big role too: in the sequels, we find out much more about the agents pursuing Neo, Morpheus and the team. Agent Smith (played brilliantly by Hugo Weaving), the main adversary in the first movie, is really a computer agent — an artificial intelligence meant to keep order in the simulation. Like any good AI villain, Agent Smith (who was voted the 84th most popular movie character of all time!) is able to reproduce itself and overlay himself onto any part of the simulation.

“The Matrix” storyboard from the original movie. (Photo by Jonathan Leibson/Getty Images for Warner Bros. Studio Tour Hollywood)

The Wachowskis, creators of “The Matrix,” claim to have been inspired by, among others, science fiction master Philip K. Dick. Most of us are familiar with Dick’s work from the many film and TV adaptations, ranging from Blade Runner, Total Recall and the more recent Amazon show, The Man in the High Castle. Dick often explored questions of what was “real” versus “fake” in his vast body of work. These are some of the same themes we will have to grapple with to build a real Matrix: AI that is indistinguishable from humans, implanting false memories and broadcasting directly into the mind.

As part of writing my upcoming book, I interviewed Dick’s wife, Tessa B. Dick, and she told me that Philip K. Dick actually believed we were living in a simulation. He believed that someone was changing the parameters of the simulation, and most of us were unaware that this was going on. This was of course, the theme of his short story, “The Adjustment Team” (which served as the basis for the blockbuster “The Adjustment Bureau,” starring Matt Damon and Emily Blunt).

A quick summary of the basic (non-video game) simulation argument

Today, the simulation hypothesis has moved from science fiction to a subject of serious debate because of several key developments.

The first was when Oxford professor Nick Bostrom published his 2003 paper, “Are You Living in a Simulation?” Bostrom doesn’t say much about video games nor how we might build such a simulation; rather, he makes a clever statistical argument. Bostrom theorized that if a civilization ever got the Simulation Point, it would create many ancestor simulations, each with large numbers (billions or trillions?) of simulated beings. Since the number of simulated beings would vastly outnumber the number of real beings, any beings (including us!) were more likely to be living inside a simulation than outside of it!

Other scientists, like physicists and Cosmos host Neil deGrasse Tyson and Stephen Hawking weighed in, saying they found it hard to argue against this logic.

Bostrom’s argument implied two things that are the subject of intense debate. The first is that if any civilization every reached the Simulation Point, then we are more likely in a simulation now. The second is that we are more likely all AI or simulated consciousness rather than biological ones. On this second point, I prefer to use the “video game” version of the simulation argument, which is a little different than Bostrom’s version.

Video games hold the key

Let’s look more at the video game version of the argument, which rests on the rapid pace of development of video game and computer graphics technology over the past decades. In video games, we have both “players” who exist outside of the video game, and “characters” who exist inside the game. In the game, we have PCs (player characters) that are controlled (you might say mentally attached to the players), and NPCs (non-player characters) that are the simulation artificial characters.

Powered by WPeMatico

Unity adds preview support for Nvidia’s ray tracing tech to push gaming realism

Ray tracing has been a major topic of conversation at both GDC and GTC so it seems fitting that that the overlapping conventions would both kick off with an announcement that touches both industries.

Today at GTC, Nvidia announced that it has built-out a number of major partnerships with 3D software makers including some apparent names like Adobe and Autodesk to integrate access with Nvidia’s RTX ray-tracing platform in their future software releases. The partnerships with Unity is perhaps the most interesting, given the excitement amongst game developers to bring real-time ray tracing to interactive works.

Epic Games had already announced Unreal Engine 4.22 support for Nvidia RTX ray-tracing, and it was only a matter of time before Unity made the plunge as well, but now the tech is officially coming to Unity’s High Definition Render Pipeline (HDRP) today in preview.

The technology is all focused on how games render lighting more realistically, showing how light interacts with the atmosphere and the objects it strikes. This technique has already been in use elsewhere but rendering all of this can be pretty resource-intensive which has made the advancements of the past few years to cement this as a real-time system such an entrancing prospect.

Nvidia has certainly been tooting the horn of this technology, but there have been some doubts whether this is just another technology that’s still a few years out from popular adoption amongst game developers.

“Real-time ray tracing moves real-time graphics significantly closer to realism, opening the gates to global rendering effects never before possible in the real-time domain,” a Unity exec said in a statement. In their announcement, Nvidia boasted that their system enabled “ray traced images that can be indistinguishable from photographs” that “blur the line between real-time and reality.”

While the prospect of added realism in gaming is certainly something consumers will be psyched about, engine-makers will undoubtedly also be promoting their early access to the Nvidia tech to customers in other industries including enterprise.

Powered by WPeMatico