TC

Auto Added by WPeMatico

Auto Added by WPeMatico

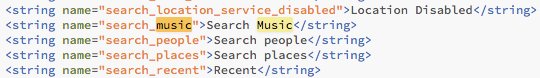

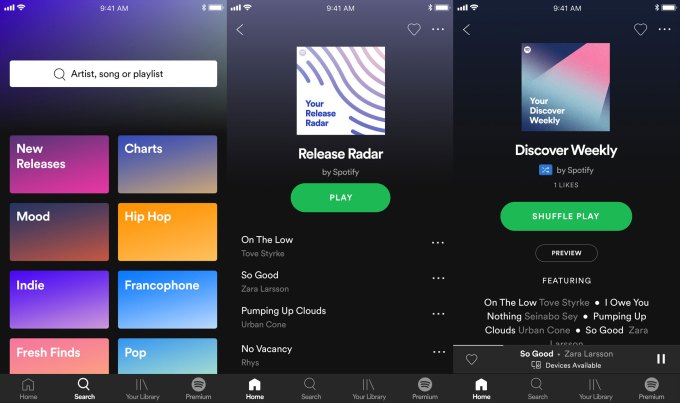

Instagram is preparing to let you add music to your Stories, judging by code found inside its Android app. “music stickers” could let you search for and add a song to your posts, thanks to licensing deals with the major record labels recently struck by Facebook. Instagram is also testing a way to automatically detect a song you’re listening to and display the artist and song title as just a visual label.

Listenable music stickers would make Instagram Stories much more interesting to watch. Amateur video footage suddenly looks like DIY MTV when you add the right score. The feature could also steal thunder from teen lip syncing app sensation Musically, and stumbling rival Snapchat that planned but scrapped a big foray into music. And alongside Instagram Stories’ new platform for sharing posts directly from third-party apps, including Spotify and SoundCloud, these stickers could make Instagram a powerful driver of music discovery.

TechCrunch was first tipped off to the hidden music icons and code from reader Ishan Agarwal. Instagram declined to comment. But Instagram later confirmed three other big features first reported by TechCrunch and spotted by Agarwal that it initially refused to discuss: Focus mode for shooting portraits, QR-scannable Nametags for following people and video calling, which got an official debut at F8.

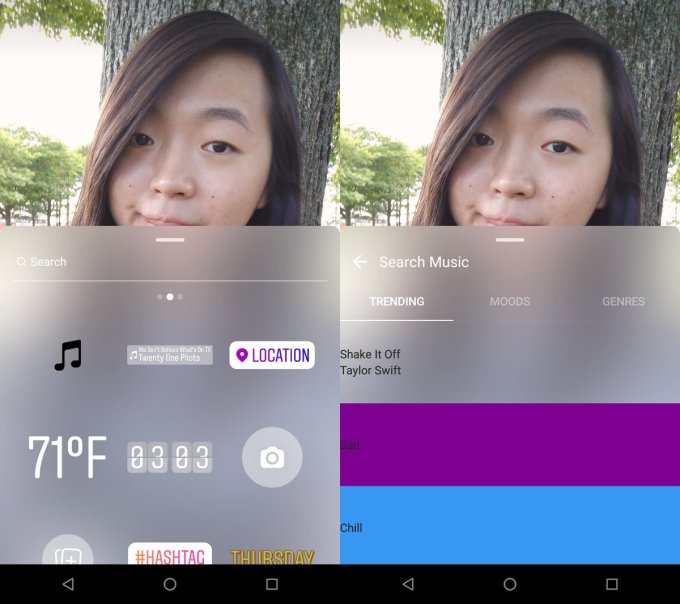

[Update: Jane Manchun Wong tells TechCrunch she was briefly able to test the feature, as seen in the screenshot above. The prototype design looks a bit janky, and Instagram crashed when she tried to post anything using the music stickers. Beyond the music sticker search interface seen on the right, Wong tells us Instagram automatically detected a song she was currently playing on her phone and created a sticker for it (not using audio recognition like Shazam).]

Facebook and Instagram’s video editing features have been in a sad state for a long time. I wrote about the big opportunity back in 2013, and in 2016 called on both Facebook and Instagram to add more editing features, including soundtracks. Finally in late 2017, Facebook started testing Sound Collection, which lets you add to your videos sound effects and a very limited range of not-popular artists’ songs. But since then, Facebook has secured licensing deals with Sony, Warner, Universal and European labels.

![]()

For years, people thought Facebook’s ongoing negotiations with record labels would power some Spotify competitor. But streaming is a crowded market with strong solutions already. The bigger problem for Facebook was that if users added soundtracks themselves using editing software, or a song playing in the background got caught in the recording, those videos could be removed due to copyright complaints from the labels. Facebook’s intention was the opposite — to make it easier to add popular music to your posts so they’d be more fun to consume.

Instagram’s music stickers could be the culmination of all those deals.

The code shows that Instagram’s app has an unreleased “Search Music” feature built-in beside its location and friend-mention sticker search options inside Instagram Stories. These “music overlay stickers” can be searched using tabs for “Genres,” “Moods,” and “Trending.” Instagram could certainly change the feature before it’s launched, or scrap it all together. But the clear value of music stickers and the fact that Instagram owned up to the Focus, Nametags and Video Calling features all within three months of us reporting their appearance in the code lends weight to an upcoming launch.

It’s not entirely clear, but it seems that once you’ve picked a song and added it as a music sticker to your Story, a clip of that song will play while people watch. It’s possible that the initial version of the stickers will only display the artist and title of the song similar to Facebook’s activity status updates, rather than actually adding it as a listenable soundtrack.

These stickers will almost surely be addable to videos, but maybe Instagram will let you include them on photos too. It would be great if viewers could tap through the sticker to hear the song or check it out on their preferred streaming service. That could make Instagram the new Myspace, where you fall in love with new music through you friends; there are no indicators in the code about that.

Perhaps Instagram will be working with a particular partner on the feature, like it did with Giphy for its GIF stickers. Spotify, with its free tier and long-running integrations with Facebook dating back to the 2011 Open Graph ticker, would make an obvious choice. But Facebook might play it more neutral, powering the feature another way, or working with a range of providers, potentially including Apple, YouTube, SoundCloud and Amazon.

Several apps like Sounds and Soundtracking have tried to be the “Instagram for music.” But none have gained mass traction because it’s hard to tell if you like a song quickly enough to pause your scrolling, staring at album art isn’t fun, users don’t want a whole separate app for this and Facebook and Instagram’s algorithms can bury cross-posts of this content. But Stories — with original visuals that are easily skippable, natively created and consumed in your default social app — could succeed.

Getting more users wearing headphones or turning the sound on while using Instagram could be a boon to the app’s business, as advertisers all want to be heard, as well as seen. The stickers could also get young Instagrammers singing along to their favorite songs the way 60 million Musically users do. In that sense, music could spice up the lives of people who otherwise might not appear glamorous through Stories.

Music stickers could let Instagram beat Snapchat to the punch. Leaked emails from the 2014 Sony hack showed Snap CEO Evan Spiegel was intent on launching a music video streaming feature or even creating Snapchat’s own record label. But complications around revenue-sharing negotiations and the potential to distract the team and product from Snapchat’s core use case derailed the project. Instead, Snap has worked with record labels on Discover channels and augmented reality lenses to promote new songs. But Snapchat still has no sound board or soundtrack features, leaving some content silent or drowned in random noise.

With the right soaring strings, the everyday becomes epic. With the perfect hip-hop beat, a standard scene gains swagger. And with the hottest new dance hook, anywhere can be a party. Instagram has spent the past few years building all conceivable forms of visual flair to embellish your photos and videos. But it’s audio that could be the next dimension of Stories.

For more on the future of Stories, read our feature pieces:

Powered by WPeMatico

Printify, a startup all the way from Riga, Latvia, has raised $1 million in seed funding led by Google AdSense pioneer Gokul Rajaram as it looks to expand its services in the U.S. and build out its team in Latvia.

Today, roughly 50,000 e-commerce stores use Printify’s services for printing-on-demand, according to the company.

Together with Lumi, which can handle packaging for consumer-facing startups, Printify is making the notion of becoming a brand as seamless as possible by taking much of a vendor’s legwork out of the equation.

The company keeps its customers’ billing information on file and links with the back-end ordering system of almost any e-commerce platform.

When an order comes in, Printify gets an API notification to begin working on a product. The company then sends print-ready files to an on-demand manufacturer that can print a design and ship a product within 24 hours.

Printify founder James Berdigans came up with the idea for the company after starting a business making accessories for Apple products.

“We wanted to print custom phone cases and we thought we’d find an on-demand manufacturer and it would be easy,” says Berdigans. That’s when Printify was born.

The company first integrated through Shopify and began to see some traction in November 2015, but because the company didn’t own its own manufacturing, quality became a concern.

In 2016, Berdigans pivoted to the marketplace model because he saw demand coming from a growing number of small business owners like himself that needed access to a selection of quality printing houses.

New direct-to-garment printing and digital printing on t-shirts is going to grow very rapidly over the next few years, according to Berdigans.

A graduate from the 500 Startups accelerator program, the company is already profitable and hoping to capitalize on its success with this new funding to expand its engineering team.

“In just two years, Printify has become profitable and is growing at a rapid pace. With this

investment, we plan to double our Riga team in 2018. That will create at least 30 new jobs,

most of which will be in programming, design, and customer support,” said Printify co-founder

Artis Kehris in a statement.

Powered by WPeMatico

At the Microsoft Build developer conference today, Microsoft and Chinese drone manufacturer DJI announced a new partnership that aims to bring more of Microsoft’s machine learning smarts to commercial drones. Given Microsoft’s current focus on bringing intelligence to the edge, this is almost a logical partnership, given that drones are essentially semi-autonomous edge computing devices.

DJI also today announced that Azure is now its preferred cloud computing partner and that it will use the platform to analyze video data, for example. The two companies also plan to offer new commercial drone solutions using Azure IoT Edge and related AI technologies for verticals like agriculture, construction and public safety. Indeed, the companies are already working together on Microsoft’s FarmBeats solution, an AI and IoT platform for farmers.

As part of this partnership, DJI is launching a software development kit (SDK) for Windows that will allow Windows developers to build native apps to control DJI drones. Using the SDK, developers can also integrate third-party tools for managing payloads or accessing sensors and robotics components on their drones. DJI already offers a Windows-based ground station.

“DJI is excited to form this unique partnership with Microsoft to bring the power of DJI aerial platforms to the Microsoft developer ecosystem,” said Roger Luo, DJI president, in today’s announcement. “Using our new SDK, Windows developers will soon be able to employ drones, AI and machine learning technologies to create intelligent flying robots that will save businesses time and money and help make drone technology a mainstay in the workplace.”

Interestingly, Microsoft also stresses that this partnership gives DJI access to its Azure IP Advantage program. “For Microsoft, the partnership is an example of the important role IP plays in ensuring a healthy and vibrant technology ecosystem and builds upon existing partnerships in emerging sectors such as connected cars and personal wearables,” the company notes in today’s announcement.

Powered by WPeMatico

At its Build developer conference this week, Microsoft is putting a lot of emphasis on artificial intelligence and edge computing. To a large degree, that means bringing many of the existing Azure services to machines that sit at the edge, no matter whether that’s a large industrial machine in a warehouse or a remote oil-drilling platform. The service that brings all of this together is Azure IoT Edge, which is getting quite a few updates today. IoT Edge is a collection of tools that brings AI, Azure services and custom apps to IoT devices.

As Microsoft announced today, Azure IoT Edge, which sits on top of Microsoft’s IoT Hub service, is now getting support for Microsoft’s Cognitive Services APIs, for example, as well as support for Event Grid and Kubernetes containers. In addition, Microsoft is also open sourcing the Azure IoT Edge runtime, which will allow developers to customize their edge deployments as needed.

The highlight here is support for Cognitive Services for edge deployments. Right now, this is a bit of a limited service as it actually only supports the Custom Vision service, but over time, the company plans to bring other Cognitive Services to the edge as well. The appeal of this service is pretty obvious, too, as it will allow industrial equipment or even drones to use these machine learning models without internet connectivity so they can take action even when they are offline.

As far as AI goes, Microsoft also today announced that it will bring its new Brainwave deep neural network acceleration platform for real-time AI to the edge.

The company has also teamed up with Qualcomm to launch an AI developer kit for on-device inferencing on the edge. The focus of the first version of this kit will be on camera-based solutions, which doesn’t come as a major surprise given that Qualcomm recently launched its own vision intelligence platform.

IoT Edge is also getting a number of other updates that don’t directly involve machine learning. Kubernetes support is an obvious one and a smart addition, given that it will allow developers to build Kubernetes clusters that can span both the edge and a more centralized cloud.

The appeal of running Event Grid, Microsoft’s event routing service, at the edge is also pretty obvious, given that it’ll allow developers to connect services with far lower latency than if all the data had to run through a remote data center.

Other IoT Edge updates include the planned launch of a marketplace that will allow Microsoft partners and developers to share and monetize their edge modules, as well as a new certification program for hardware manufacturers to ensure that their devices are compatible with Microsoft’s platform. IoT Edge, as well as Windows 10 IoT and Azure Machine Learning, will also soon support hardware-accelerated model evaluation with DirextX 12 GPU, which is available in virtually every modern Windows PC.

Powered by WPeMatico

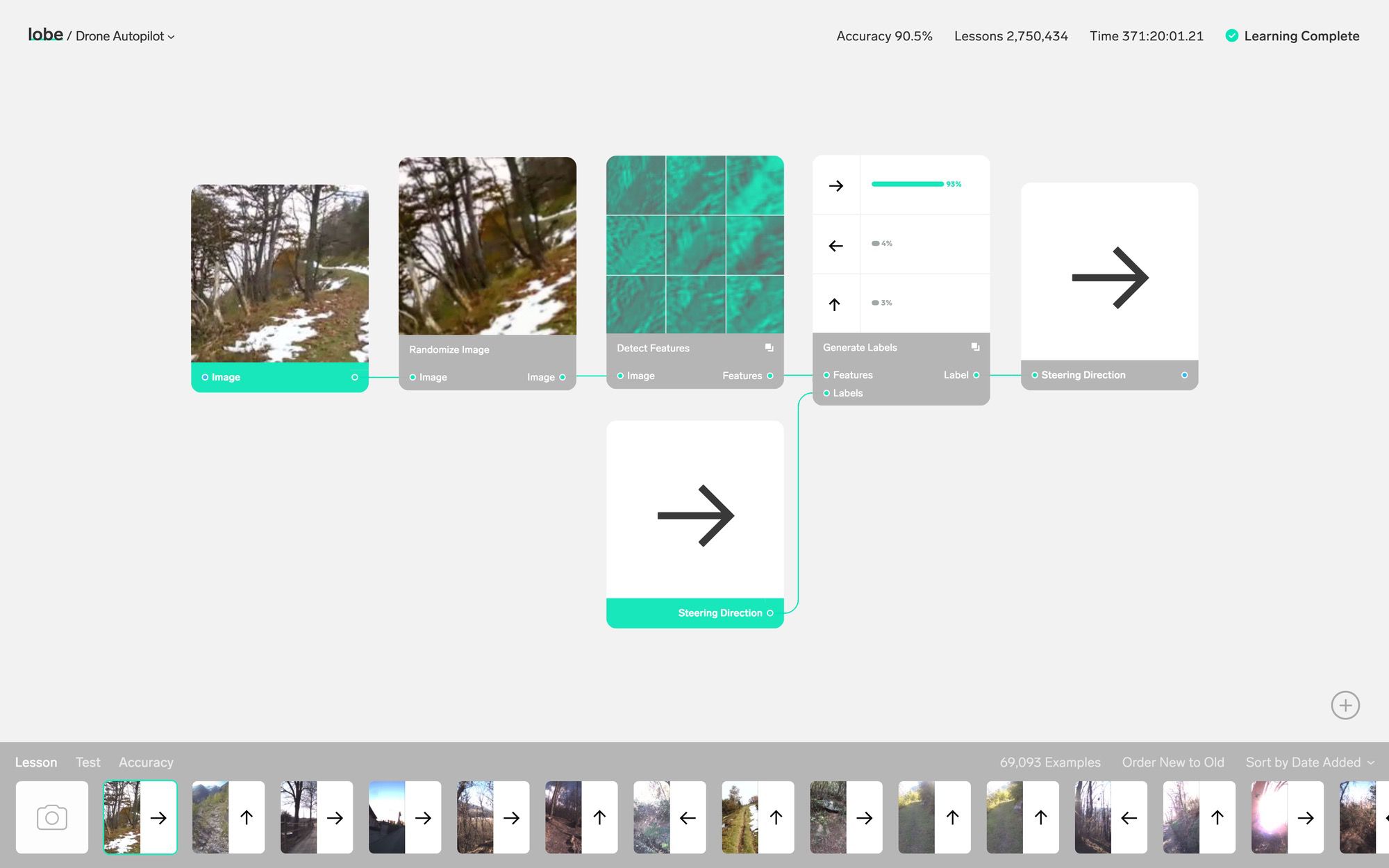

Machine learning may be the tool de jour for everything from particle physics to recreating the human voice, but it’s not exactly the easiest field to get into. Despite the complexities of video editing and sound design, we have UIs that let even a curious kid dabble in them — why not with machine learning? That’s the goal of Lobe, a startup and platform that genuinely seems to have made AI models as simple to put together as LEGO bricks.

I talked with Mike Matas, one of Lobe’s co-founders and the designer behind many a popular digital interface, about the platform and his motivations for creating it.

“There’s been a lot of situations where people have kind of thought about AI and have these cool ideas, but they can’t execute them,” he said. “So those ideas just like shed, unless you have access to an AI team.”

This happened to him, too, he explained.

“I started researching because I wanted to see if I could use it myself. And there’s this hard to break through veneer of words and frameworks and mathematics — but once you get through that the concepts are actually really intuitive. In fact even more intuitive than regular programming, because you’re teaching the machine like you teach a person.”

But like the hard shell of jargon, existing tools were also rough on the edges — powerful and functional, but much more like learning a development environment than playing around in Photoshop or Logic.

“You need to know how to piece these things together, there are lots of things you need to download. I’m one of those people who if I have to do a lot of work, download a bunch of frameworks, I just give up,” he said. “So as a UI designer I saw the opportunity to take something that’s really complicated and reframe it in a way that’s understandable.”

Lobe, which Matas created with his co-founders Markus Beissinger and Adam Menges, takes the concepts of machine learning, things like feature extraction and labeling, and puts them in a simple, intuitive visual interface. As demonstrated in a video tour of the platform, you can make an app that recognizes hand gestures and matches them to emoji without ever seeing a line of code, let alone writing one. All the relevant information is there, and you can drill down to the nitty gritty if you want, but you don’t have to. The ease and speed with which new applications can be designed and experimented with could open up the field to people who see the potential of the tools but lack the technical know-how.

He compared the situation to the early days of PCs, when computer scientists and engineers were the only ones who knew how to operate them. “They were the only people able to use them, so they were they only people able to come up with ideas about how to use them,” he said. But by the late ’80s, computers had been transformed into creative tools, largely because of improvements to the UI.

Matas expects a similar flood of applications, even beyond the many we’ve already seen, as the barrier to entry drops.

“People outside the data science community are going to think about how to apply this to their field,” he said, and unlike before, they’ll be able to create a working model themselves.

A raft of examples on the site show how a few simple modules can give rise to all kinds of interesting applications: reading lips, tracking positions, understanding gestures, generating realistic flower petals. Why not? You need data to feed the system, of course, but doing something novel with it is no longer the hard part.

A raft of examples on the site show how a few simple modules can give rise to all kinds of interesting applications: reading lips, tracking positions, understanding gestures, generating realistic flower petals. Why not? You need data to feed the system, of course, but doing something novel with it is no longer the hard part.

And in keeping with the machine learning community’s commitment to openness and sharing, Lobe models aren’t some proprietary thing you can only operate on the site or via the API. “Architecturally we’re built on top of open standards like Tensorflow,” Matas said. Do the training on Lobe, test it and tweak it on Lobe, then compile it down to whatever platform you want and take it to go.

Right now the site is in closed beta. “We’ve been overwhelmed with responses, so clearly it’s resonating with people,” Matas said. “We’re going to slowly let people in, it’s going to start pretty small. I hope we’re not getting ahead of ourselves.”

Powered by WPeMatico

Why has San Francisco’s startup scene generated so many hugely valuable companies over the past decade?

That’s the question we asked over the past few weeks while analyzing San Francisco startup funding, exit, and unicorn creation data. After all, it’s not as if founders of Uber, Airbnb, Lyft, Dropbox and Twitter had to get office space within a couple of miles of each other.

We hadn’t thought our data-centric approach would yield a clear recipe for success. San Francisco private and newly public unicorns are a diverse bunch, numbering more than 30, in areas ranging from ridesharing to online lending. Surely the path to billion-plus valuations would be equally varied.

But surprisingly, many of their secrets to success seem formulaic. The most valuable San Francisco companies to arise in the era of the smartphone have a number of shared traits, including a willingness and ability to post massive, sustained losses; high-powered investors; and a preponderance of easy-to-explain business models.

No, it’s not a recipe that’s likely replicable without talent, drive, connections and timing. But if you’ve got those ingredients, following the principles below might provide a good shot at unicorn status.

First, lose money until you’ve left your rivals in the dust. This is the most important rule. It is the collective glue that holds the narratives of San Francisco startup success stories together. And while companies in other places have thrived with the same practice, arguably San Franciscans do it best.

It’s no secret that a majority of the most valuable internet and technology companies citywide lose gobs of money or post tiny profits relative to valuations. Uber, called the world’s most valuable startup, reportedly lost $4.5 billion last year. Dropbox lost more than $100 million after losing more than $200 million the year before and more than $300 million the year before that. Even Airbnb, whose model of taking a share of homestay revenues sounds like an easy recipe for returns, took nine years to post its first annual profit.

Not making money can be the ultimate competitive advantage, if you can afford it.

Industry stalwarts lose money, too. Salesforce, with a market cap of $88 billion, has posted losses for the vast majority of its operating history. Square, valued at nearly $20 billion, has never been profitable on a GAAP basis. DocuSign, the 15-year-old newly public company that dominates the e-signature space, lost more than $50 million in its last fiscal year (and more than $100 million in each of the two preceding years). Of course, these companies, like their unicorn brethren, invest heavily in growing revenues, attracting investors who value this approach.

We could go on. But the basic takeaway is this: Losing money is not a bug. It’s a feature. One might even argue that entrepreneurs in metro areas with a more fiscally restrained investment culture are missing out.

What’s also noteworthy is the propensity of so many city startups to wreak havoc on existing, profitable industries without generating big profits themselves. Craigslist, a San Francisco nonprofit, may have started the trend in the 1990s by blowing up the newspaper classified business. Today, Uber and Lyft have decimated the value of taxi medallions.

Not making money can be the ultimate competitive advantage, if you can afford it, as it prevents others from entering the space or catching up as your startup gobbles up greater and greater market share. Then, when rivals are out of the picture, it’s possible to raise prices and start focusing on operating in the black.

You can’t lose money on your own. And you can’t lose any old money, either. To succeed as a San Francisco unicorn, it helps to lose money provided by one of a short list of prestigious investors who have previously backed valuable, unprofitable Northern California startups.

It’s not a mysterious list. Most of the names are well-known venture and seed investors who’ve been actively investing in local startups for many years and commonly feature on rankings like the Midas List. We’ve put together a few names here.

You might wonder why it’s so much better to lose money provided by Sequoia Capital than, say, a lower-profile but still wealthy investor. We could speculate that the following factors are at play: a firm’s reputation for selecting winning startups, a willingness of later investors to follow these VCs at higher valuations and these firms’ skill in shepherding portfolio companies through rapid growth cycles to an eventual exit.

Whatever the exact connection, the data speaks for itself. The vast majority of San Francisco’s most valuable private and recently public internet and technology companies have backing from investors on the short list, commonly beginning with early-stage rounds.

Generally speaking, you don’t need to know a lot about semiconductor technology or networking infrastructure to explain what a high-valuation San Francisco company does. Instead, it’s more along the lines of: “They have an app for getting rides from strangers,” or “They have an app for renting rooms in your house to strangers.” It may sound strange at first, but pretty soon it’s something everyone seems to be doing.

It’s not a recipe that’s likely replicable without talent, drive, connections and timing.

A list of 32 San Francisco-based unicorns and near-unicorns is populated mostly with companies that have widely understood brands, including Pinterest, Instacart and Slack, along with Uber, Lyft and Airbnb. While there are some lesser-known enterprise software names, they’re not among the largest investment recipients.

Part of the consumer-facing, high brand recognition qualities of San Francisco startups may be tied to the decision to locate in an urban center. If you were planning to manufacture semiconductor components, for instance, you would probably set up headquarters in a less space-constrained suburban setting.

While it can be frustrating to watch a company lurch from quarter to quarter without a profit in sight, there is ample evidence the approach can be wildly successful over time.

Seattle’s Amazon is probably the poster child for this strategy. Jeff Bezos, recently declared the world’s richest man, led the company for more than a decade before reporting the first annual profit.

These days, San Francisco seems to be ground central for this company-building technique. While it’s certainly not necessary to locate here, it does seem to be the single urban location most closely associated with massively scalable, money-losing consumer-facing startups.

Perhaps it’s just one of those things that after a while becomes status quo. If you want to be a movie star, you go to Hollywood. And if you want to make it on Wall Street, you go to Wall Street. Likewise, if you want to make it by launching an industry-altering business with a good shot at a multi-billion-dollar valuation, all while losing eye-popping sums of money, then you go to San Francisco.

Powered by WPeMatico

Perennial smartphone struggler HTC has revealed its newest smartphone — the U12/U12+ — will launch on May 23. The big spoiler from the company is that the phone will include… components.

Coming Soon. A phone that is more than the sum of its specs. pic.twitter.com/m2skJSK0qt

— HTC (@htc) May 3, 2018

That isn’t exactly an informative teaser, but we do actually have a flavor for what HTC will bring to market.

Serial leaker Evan Blass, writing for VentureBeat, revealed a dual-camera setup on the reverse of the phone, with a Snapdragon 845 chipset, 6GB of RAM and either 64GB or 128GB of internal storage under the hood.

HTC badly needs this device to be a winner. Its most recent results for Q4 2017 were grim with a loss $337 million from total sales of $540 million. The company did get a cash boost from a $1.1 billion deal to sell some of its tech and talent to Google, but that wasn’t reflected in these results.

The firm is putting that capital to use for “greater investment in emerging technologies” that it says will be “vital across all of our businesses and present significant long-term growth opportunities.” The fruits of that aren’t likely to be seen for a while yet.

Powered by WPeMatico

In this episode of Technotopia I talk to Jeff Schmidt of the Columbus Collaboratory. He is well-versed in the future of security and our conversation ranged from the rise of the midwest to the future of cyberattacks.

The Columbus Collaboratory is a unique think tank dedicated to building security and system solutions for major clients. It’s a sort of Delta Force for major corporations headquartered in Columbus, and Schmidt has a lot to say about the value of a good security plan.

Technotopia is a podcast by John Biggs about a better future. You can subscribe in Stitcher, RSS or iTunes and listen the MP3 here.

Powered by WPeMatico

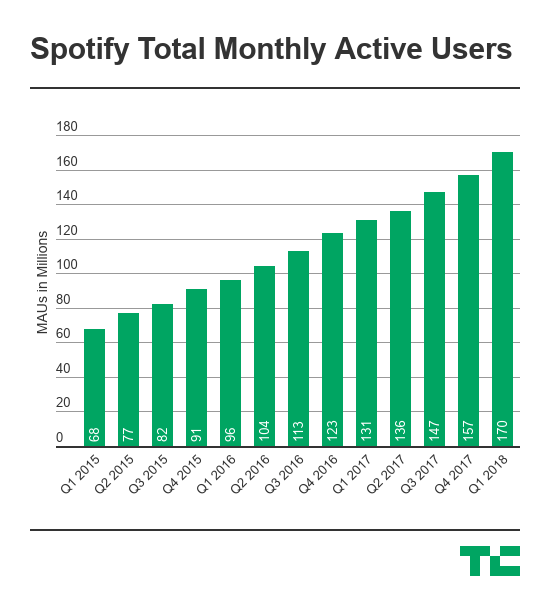

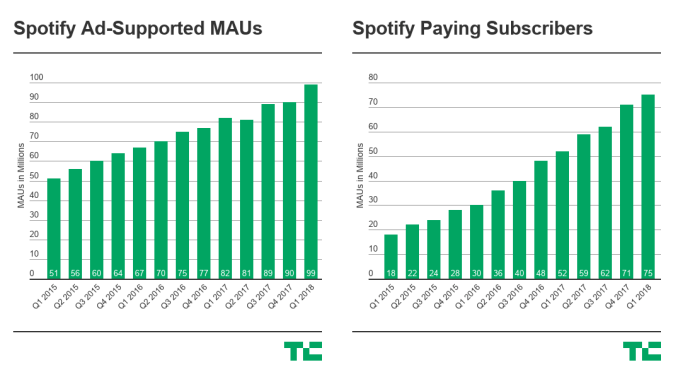

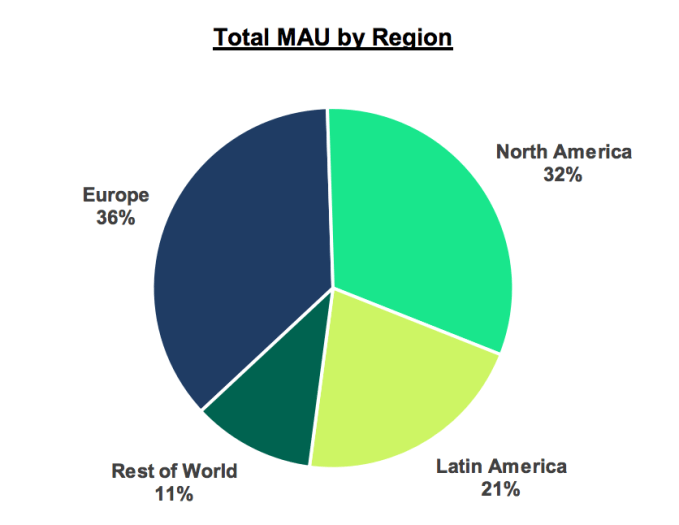

In Spotify’s first ever earnings report, the streaming music came up a little short, pulling in $1.36 billion revenue in Q1 2018. That’s compared to Wall Street’s estimates of $1.4 billion in revenue and an adjusted EPS loss of $0.34. Spotify hit 170 million monthly active users, up 6.9 percent from 159 million in Q4 2017 and 99 million ad-supported users. It also hit 75 million Premium Subscribers, up 30 percent year-over-year, and 75 million paid subscribers, up 5.6 percent from 71 million in Q4 and up 45 percent YoY.

Interestingly, the MAU count indicates that 4 million of Spotify’s 75 million subscribers pay but don’t listen. Spotify confirmed as much. For reference, Apple Music has roughly 40 million subscribers.

Spotify’s results were in line with the guidance it gave yet Wall Street was still disappointed. Spotify shares promptly fell over 8 percent in after-hours trading to around $156, beneath its IPO pop a month ago but still above its $149 day one closing price and $132 IPO pricing.

Spotify’s Gross Margin was 24.9 percent in Q1, over the top of its guidance range of 23-24 percent. Its operating loss was $48.9 million, which improved significantly, and come in under the $59 million to $95 million operating loss Spotify warned of. The music company now has $1.91 billion in cash and cash equivalents at the end of Q1.

As for Q2 guidance, Spotify expects 175 to 180 million MAU, 79 to 83 million paid subscribers, and $1.3 to $1.55 billion in revenue, excluding the impoact of foreign exchange rates. It’s planning an operating loss of $71 million to $167 million, in part due to a $35 million to $42 million expense related to its direct listing debut on the public markets.

During the earnings call, CEO Daniel Ek said he hasn’t seen any significant impact from increased promotion by its competitor Apple Music. In fact, churn hit an all-time low of 4.7 percent, and lifetime value to customer acquisition cost ratio is holding firm at 2.7:1. But overall, “We don’t see this as a winner takes all market” Ek says.

As for voice-activated smart speakers, Ek said “We view it longterm as an opportunity not a threat” since Spotify is available on Google Home and Amazon Alexa devices.

Spotify is hoping to boost paid subscriber numbers by first luring more users to its free ad-supported service. Last month it unveiled a revamped free tier that lets users listen to songs on-demand on particular Spotify-controlled playlists instead of only being able to play in shuffle mode. The idea is that once users get a taste of on-demand listening, they’ll pay to upgrade so they can listen to whatever they want across the whole catalog.

That strategy could not only boost subscriber numbers, but also give Spotify more leverage over the record labels. More than 30 percent of all Spotify listening now happens on its owned playlists. That gives it the power to choose what will become a hit, and in turn means record labels need to play nice. This could help Spotify secure more exclusive content and a better bargaining position in royalty negotiations.

Powered by WPeMatico

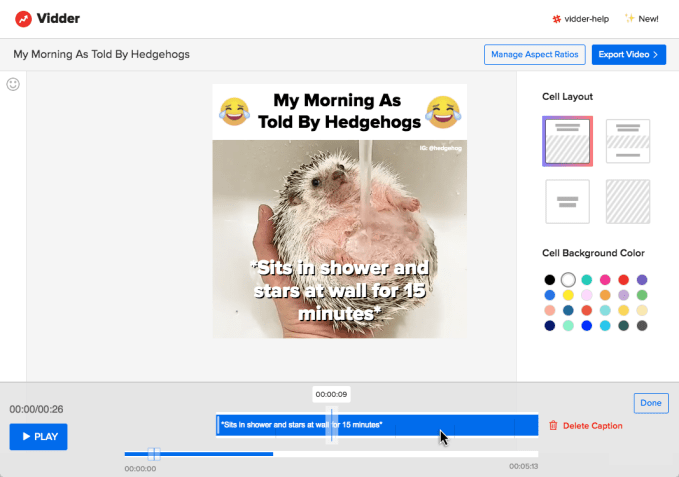

Although the “pivot to video” has been notoriously challenging for most publishers, they can’t exactly give up on video. In fact, BuzzFeed has been ramping up production with a new editing tool called Vidder.

Vidder was created Senior Product Designer Elaine Dunlap and Senior Software Engineer Joseph Bergen. Dunlap recalled talking to Bergen more than a year ago and pointing out that while BuzzFeed is known for building its own publishing tools, “there wasn’t really the same opinionated software solution” for creating videos.

So they decided to build a video editing product that could be used by anyone, not just experienced producers and editors. Dunlap said the initial goal was to “demystify video production.”

“We basically started out with this hypothesis that if we gave a very simple tool to these editors who are constantly creating very funny, interesting things, they would really be able to fly,” Bergen added.

Fast forward to 2018 and BuzzFeed says Vidder is being used by 40 or 50 team members to create 200 videos each month, with 800 videos created in all since October. Almost none of BuzzFeed’s Vidder users are full-time video producers, and most of them had little to no experience with professional video editing software.

For example, Kayla Yandoli was a member of BuzzFeed’s social team (she’s since transferred to the video) when she created this compilation of “shady” insults from The Golden Girls last fall. With 1.1 million shares, it was one of Vidder’s early success stories, and Yandoli estimated that it only took her only an hour and a half to edit.

We moved even faster when the Vidder team demonstrated the product for me last week. We started with a basic template, then customized it by uploading one or two video clips, typing in captions and subtitles, adding emojis, and we had a perfectly serviceable video ready to go in just a few minutes.

The experience had very little in common with the hours I’ve spent fiddling with timelines in FinalCut. It also benefits from being entirely web-based, with no software download needed.

The key to Vidder is simplicity. As Product Manager Chris Johanesen put it, “We’re not trying to recreate Adobe Premiere.” There are teams at BuzzFeed creating more in-depth, highly produced videos, and Vidder isn’t built for them. Instead, it might be used by an editor like Yandoli who wants to quickly translate a regular BuzzFeed post into a video for Facebook or Instagram.

“There was a really big boom with Facebook videos around pop culture, animals, babies and stuff,” Yandoli recalled. “People on my team were interested in creating videos, but everyone couldn’t download Premiere. We were yearning to just find an accessible tool so that we could create things for our social platforms.”

And while you might think that Vidder has become less relevant with recent Facebook algorithm changes, Johanesen said the tool allows BuzzFeed to continue experimenting.

“Ever since the big Facebook algorithm changes that happened, our social strategy is less about longer videos and more about making things that will engage communities and conversations,” Johanesen said. “Vidder has helped teams move a little bit faster than might have been possible with other tools. I don’t know that we’ve cracked it, but it’s helping us make progress.”

BuzzFeed is also using Vidder to adapt videos for different platforms, like creating a shorter video for Instagram or compiling several short videos into a longer cut for YouTube. The tool is also being used by international teams who might quickly create a localized version when they see that a BuzzFeed U.S. video is doing well. In fact, BuzzFeed says one international editor was able to “clone” eight Vidder videos in an hour.

Usage is spreading beyond social media teams, with the sales team potentially using it to create “BuzzCuts” for advertisers. And of course Johanesen and his team are going to continue working on the product — for example, they have plans connect it to a library of licensed content.

Powered by WPeMatico