TC

Auto Added by WPeMatico

Auto Added by WPeMatico

“Whether for AR or robots, anytime you have software interacting with the world, it needs a 3D model of the globe. We think that map will look a lot more like the decentralized internet than a version of Apple Maps or Google Maps.” That’s the idea behind new startup Fantasmo, according to co-founder Jameson Detweiler. Coming out of stealth today, Fantasmo wants to let any developer contribute to and draw from a sub-centimeter accuracy map for robot navigation or anchoring AR experiences.

Fantasmo plans to launch a free Camera Positioning Standard (CPS) that developers can use to collect and organize 3D mapping data. The startup will charge for commercial access and premium features in its TerraOS, an open-sourced operating system that helps property owners keep their maps up to date and supply them for use by robots, AR and other software equipped with Fantasmo’s SDK.

With $2 million in funding led by TenOneTen Ventures, Fantasmo is now accepting developers and property owners to its private beta.

Directly competing with Google’s own Visual Positioning System is an audacious move. Fantasmo is betting that private property owners won’t want big corporations snooping around to map their indoor spaces, and instead will want to retain control of this data so they can dictate how it’s used. With Fantasmo, they’ll be able to map spaces themselves and choose where robots can roam or if the next Pokémon GO can be played there.

“Only Apple, Google, and HERE Maps want this centralized. If this data sits on one of the big tech company’s servers, they could basically spy on anyone at any time,” says Detweiler. The prospect gets scarier when you imagine everyone wearing camera-equipped AR glasses in the future. “The AR cloud on a central server is Big Brother. It’s the end of privacy.”

Detweiler and his co-founder Dr. Ryan Measel first had the spark for Fantasmo as best friends at Drexel University. “We need to build Pokémon in real life! That was the genesis of the company,” says Detweiler. In the meantime he founded and sold LaunchRock, a 500 Startups company for creating “Coming Soon” sign-up pages for internet services.

After Measel finished his PhD, the pair started Fantasmo Studios to build augmented reality games like Trash Collectors From Space, which they took through the Techstars accelerator in 2015. “Trash Collectors was the first time we actually created a spatial map and used that to sync multiple people’s precise position up,” says Detweiler. But while building the infrastructure tools to power the game, they realized there was a much bigger opportunity to build the underlying maps for everyone’s games. Now the Santa Monica-based Fantasmo has 11 employees.

“It’s the internet of the real world,” says Detweiler. Fantasmo now collects geo-referenced photos, scans them for identifying features like walls and objects, and imports them into its point cloud model. Apps and robots equipped with the Fantasmo SDK can then pull in the spatial map for a specific location that’s more accurate than federally run GPS. That lets them peg AR objects to precise spots in your environment while making sure robots don’t run into things.

Fantasmo identifies objects in geo-referenced photos to build a 3D model of the world

“I think this is the most important piece of infrastructure to be built during the next decade,” Detweiler declares. That potential attracted funding from TenOneTen, Freestyle Capital, LDV, NoName Ventures, Locke Mountain Ventures and some angel investors. But it’s also attracted competitors like Escher Reality, which was acquired by Pokémon GO parent company Niantic, and Ubiquity6, which has investment from top-tier VCs like Kleiner Perkins and First Round.

Google is the biggest threat, though. With its industry-leading traditional Google Maps, experience with indoor mapping through Tango, new VPS initiative and near limitless resources. Just yesterday, Google showed off using an AR fox in Google Maps that you can follow for walking directions.

Fantasmo is hoping that Google’s size works against it. The startup sees a path to victory through interoperability and privacy. The big corporations want to control and preference their own platforms’ access to maps while owning the data about private property. Fantasmo wants to empower property owners to oversee that data and decide what happens to it. Measel concludes, “The world would be worse off if GPS was proprietary. The next evolution shouldn’t be any different.”

Powered by WPeMatico

What could be more perfect than moving the inaugural championship finals for an esports league from its Los Angeles home to Brooklyn?

For Overwatch League, the esports conference created by fiat from Activision Blizzard, the move is the first step in its plans for housing esports teams in cities around the country.

Heading from sunny Burbank, Calif. to the hipster heartland of Brooklyn conjures up echoes of the famed Dodger franchise move (in reverse) while tapping into one of the few other markets in the U.S. that might rival LA for esports popularity.

When the Overwatch regular season ends on Sunday, June 17th, six teams will face off in the league’s first post-season playoffs. Those games are set to begin July 11th and will take place in Burbank at the company’s “Blizzard Arena Los Angeles.”

After the playoffs, the final teams will fly to New York to compete for the largest share of a $1.4 million prize pool and the first Overwatch League trophy. The games are slated to begin Friday, July 27th and continue on the 28th.

“The Overwatch League Grand Finals will be an epic experience for fans and viewers,” said Overwatch League commissioner Nate Nanzer in a statement. “We want this to be the pinnacle of esports, and holding it at a world-class venue like Barclays Center, in a global capital like New York, will help us celebrate not only the league’s two best teams, but the fans, partners, and players who have joined us on this incredible journey.”

Overwatch is taking a geographic approach to its franchises with teams sponsored by cities in the U.S. and major esports hubs around the world like London, Shanghai and Seoul.

Eventually the league is looking to set up stadiums in locations outside of Burbank. With league play requiring teams to travel — like a traditional sports league.

The move to Brooklyn could be a test of how well the Overwatch experience travels and a precursor to the league starting to take its show on the road in earnest.

Tickets go on sale on Friday, May 18th, at 10 a.m. EDT, and can be bought on ticketmaster.com and barclayscenter.com, while tickets to the first two rounds of the Overwatch League postseason at Blizzard Arena Los Angeles go on sale Thursday, May 10th, at 9 a.m. PDT via AXS.com.

Powered by WPeMatico

StubHub is best known as a destination for buying and selling event tickets. The company operates in 48 countries and sells a ticket every 1.3 seconds. But the company wants to go beyond that and provide its users with a far more comprehensive set of services around entertainment. To do that, it’s working on changing its development culture and infrastructure to become more nimble. As the company announced today, it’s betting on Google Cloud and Pivotal Cloud Foundry as the infrastructure for this move.

StubHub CTO Matt Swann told me that the idea behind going with Pivotal — and the twelve-factor app model that entails — is to help the company accelerate its journey and give it an option to run new apps in both an on-premise and cloud environment.

“We’re coming from a place where we are largely on premise,” said Swann. “Our aim is to become increasingly agile — where we are going to focus on building balanced and focused teams with a global mindset.” To do that, Swann said, the team decided to go with the best platforms to enable that and that “remove the muck that comes with how developers work today.”

As for Google, Swann noted that this was an easy decision because the team wanted to leverage that company’s infrastructure and machine learning tools like Cloud ML. “We are aiming to build some of the most powerful AI systems focused on this space so we can be ahead of our customers,” he said. Given the number of users, StubHub sits on top of a lot of data — and that’s exactly what you need when you want to build AI-powered services. What exactly these will look like, though, remains to be seen, but Swann has only been on the job for six months. We can probably expect to see more for the company in this space in the coming months.

“Digital transformation is on the mind of every technology leader, especially in industries requiring the capability to rapidly respond to changing consumer expectations,” said Bill Cook, President of Pivotal . “To adapt, enterprises need to bring together the best of modern developer environments with software-driven customer experiences designed to drive richer engagement.”

Stubhub has already spun up its new development environment and plans to launch all new ups on this new infrastructure. Swann acknowledged that they company won’t be switching all of its workloads over to the new setup soon. But he does expect that the company will hit a tipping point in the next year or so.

He also noted that this over transformation means that the company will look beyond its own walls and toward working with more third-party APIs, especially with regard to transportation services and merchants that offer services around events.

Throughout our conversation, Swann also stressed that this isn’t a technology change for the sake of it.

Powered by WPeMatico

Intel Capital, the investment arm of the computer processor giant, is today announcing $72 million in funding for the 12 newest startups to enter its portfolio, bringing the total invested so far this year to $115 million. Announced at the company’s global summit currently underway in southern California, investments in this latest tranche cover artificial intelligence, Internet of Things, cloud services, and silicon. A detailed list is below.

Other notable news from the event included a new deal between the NBA and Intel Capital to work on more collaborations in delivering sports content, an area where Intel has already been working for years; and the news that Intel has now invested $125 million in startups headed by minorities, women and other under-represented groups as part of its Diversity Initiative. The mark was reached 2.5 years ahead of schedule, it said.

The range of categories of the startups that Intel is investing in is a mark of how the company continues to back ideas that it views as central to its future business — and specifically where it hopes its processors will play a central role, such as AI, IoT and cloud. Investing in silicon startups, meanwhile, is a sign of how Intel is also focusing on businesses that are working in an area that’s close to the company’s own DNA.

It’s hasn’t been a completely smooth road. Intel became a huge presence in the world of IT and early rise of desktop and laptop computers many years ago with its advances in PC processors, but its fortunes changed with the shift to mobile, which saw the emergence of a new wave of chip companies and designs for smaller and faster devices. Mobile is area that Intel itself acknowledged it largely missed out.

Later years have seen still other issues hit the company. For example, the Spectre security flaw (fixes for which are still being rolled out). And some of the business lines where Intel was hoping to make a mark have not panned out as it hoped they would. Just last month, Intel shut down development of its Vaunt smart glasses and reportedly the entirety of its new devices group.

The investments that Intel Capital makes, in contrast, are a fresher and more optimistic aspect of the company’s operations: they represent hopes and possibilities that still have everything to play for. And given that, on balance, things like AI and cloud services still have a long way to go before being truly ubiquitous, there remains a lot of opportunity for Intel.

“These innovative companies reflect Intel’s strategic focus as a data leader,” said Wendell Brooks, Intel senior vice president and president of Intel Capital, in a statement. “They’re helping shape the future of artificial intelligence, the future of the cloud and the Internet of Things, and the future of silicon. These are critical areas of technology as the world becomes increasingly connected and smart.”

Intel Capital since 1991 has put $12.3 billion into 1,530 companies covering everything from autonomous driving to virtual reality and e-commerce and says that more than 660 of these startups have gone public or been acquired. Intel has organised its investment announcements thematically before: last October, it announced $60 million in 15 big data startups.

Here’s a rundown of the investments getting announced today. Unless otherwise noted, the startups are based around Silicon Valley:

Avaamo is a deep learning startup that builds conversational interfaces based on neural networks to address problems in enterprises — part of the wave of startups that are focusing on non-consumer conversational AI solutions.

Fictiv has built a “virtual manufacturing platform” to design, develop and deliver physical products, linking companies that want to build products with manufacturers who can help them. This is a problem that has foxed many a startup (notable failures have included Factorli out of Las Vegas), and it will be interesting to see if newer advances will make the challenges here surmoutable.

Gamalon from Cambridge, MA, says it has built a machine learning platform to “teaches computers actual ideas.” Its so-called Idea Learning technology is able to order free-form data like chat transcripts and surveys into something that a computer can read, making the data more actionable. More from Ron here.

Reconova out of Xiamen, China is focusing on problems in visual perception in areas like retail, smart home and intelligent security.

Syntiant is an Irvine, CA-based AI semiconductor company that is working on ways of placing neural decision making on chips themselves to speed up processing and reduce battery consumption — a key challenge as computing devices move more information to the cloud and keep getting smaller. Target devices include mobile phones, wearable devices, smart sensors and drones.

Alauda out of China is a container-based cloud services provider focusing on enterprise platform-as-a-service solutions. “Alauda serves organizations undergoing digital transformation across a number of industries, including financial services, manufacturing, aviation, energy and automotive,” Intel said.

CloudGenix is a software-defined wide-area network startup, addressing an important area as more businesses take their networks and data into the cloud and look for cost savings. Intel says its customers use its broadband solutions to run unified communications and data center applications to remote offices, cutting costs by 70 percent and seeing big speed and reliability improvements.

Espressif Systems, also based in China, is a fabless semiconductor company, with its system-on-a-chip focused on IoT solutions.

VenueNext is a “smart venue” platform to deliver various services to visitors’ smartphones, providing analytics and more to the facility providing the services. Hospitals, sports stadiums and others are among its customers.

Lyncean Technologies is nearly 18 years old (founded in 2001) and has been working on something called Compact Light Source (CLS), which Intel describes as a miniature synchrotron X-ray source, which can be used for either extremely detailed large X-rays or very microscopic ones. This has both medical and security applications, making it a very timely business.

Movellus “develops semiconductor technologies that enable digital tools to automatically create and implement functionality previously achievable only with custom analog design.” Its main focus is creating more efficient approaches to designing analog circuits for systems on chips, needed for AI and other applications.

SiFive makes “market-ready processor core IP based on the RISC-V instruction set architecture,” founded by the inventors of RISC-V and led by a team of industry veterans.

Powered by WPeMatico

Google unveiled some of the new features in the next version of Android at its developer conference. One feature looked particularly familiar. Android P will get new navigation gestures to switch between apps. And it works just like the iPhone X.

“As part of Android P, we’re introducing a new system navigation that we’ve been working on for more than a year now,” VP of Android Engineering Dave Burke said. “And the new design makes Android multitasking more approachable and easier to understand.”

While Google has probably been working on a new multitasking screen for a year, it’s hard to believe that the company didn’t copy Apple. The iPhone X was unveiled in September 2017.

On Android P, the traditional home, back and multitasking buttons are gone. There’s a single pill-shaped button at the center of the screen. If you swipe up from this button, you get a new multitasking view with your most recent apps. You can swipe left and right and select the app you’re looking for.

If you swipe up one more time, you get the app drawer with suggested apps at the very top. At any time, you can tap on the button to go back to the home screen. These gestures also work when you’re using an app. Android P adds a back button in the bottom left corner if you’re in an app.

But the most shameless inspiration is the left and right gestures. If you swipe left and right on the pill-shaped button, you can switch to the next app, exactly like on the iPhone X. You can scrub through multiple apps. As soon as you release your finger, you’ll jump to the selected app.

You can get Android P beta for a handful of devices starting today. End users will get the new version in the coming months.

It’s hard to blame Google with this one as the iPhone X gestures are incredibly elegant and efficient — and yes, it looks a lot like the Palm Pre. Using a phone that runs the current version of Android after using the iPhone X is much slower as it requires multiple taps to switch to the most recent app.

Apple moved the needle and it’s clear that all smartphones should work like the iPhone X. But Google still deserves to be called out.

Powered by WPeMatico

Google today announced at its I/O developer conference a new suite tools for its new Android P operating system that will help users better manage their screen time, including a more robust do not disturb mode and ways to track your app usage.

The biggest change is introducing a dashboard to Android P that tracks all of your Android usage, labeled under the “digital wellbeing” banner. Users can see how many times they’ve unlocked their phones, how many notifications they get, and how long they’ve spent on apps, for example. Developers can also add in ways to get more information on that app usage. YouTube, for example, will show total watch time across all devices in addition to just Android devices.

Google says it has designed all of this to promote what developers call “meaningful engagement,” trying to reduce the kind of idle screen time that might not necessarily be healthy — like sitting on your phone before you go to bed. Here’s a quick rundown of some of the other big changes:

The launch had been previously reported by The Washington Post, and arrives at a time when there’s increasing concerns about the negative side of technology and, specifically, its addictive nature. The company already offers tools for parents who want to manage children’s devices, via Family Link – software for controlling access to apps, setting screen time limits, and configuring device bedtimes, among other things. Amazon also offers a robust set of parental controls for its Fire tablets and Apple is expected to launch an expanded set of parental controls for iOS later this year.

Powered by WPeMatico

As the war for creating customized AI hardware heats up, Google announced at Google I/O 2018 that is rolling out out its third generation of silicon, the Tensor Processor Unit 3.0.

Google CEO Sundar Pichai said the new TPU is eight times more powerful than last year per pod, with up to 100 petaflops in performance. Google joins pretty much every other major company in looking to create custom silicon in order to handle its machine operations. And while multiple frameworks for developing machine learning tools have emerged, including PyTorch and Caffe2, this one is optimized for Google’s TensorFlow. Google is looking to make Google Cloud an omnipresent platform at the scale of Amazon, and offering better machine learning tools is quickly becoming table stakes.

Amazon and Facebook are both working on their own kind of custom silicon. Facebook’s hardware is optimized for its Caffe2 framework, which is designed to handle the massive information graphs it has on its users. You can think about it as taking everything Facebook knows about you — your birthday, your friend graph, and everything that goes into the news feed algorithm — fed into a complex machine learning framework that works best for its own operations. That, in the end, may have ended up requiring a customized approach to hardware. We know less about Amazon’s goals here, but it also wants to own the cloud infrastructure ecosystem with AWS.

All this has also spun up an increasingly large and well-funded startup ecosystem looking to create a customized piece of hardware targeted toward machine learning. There are startups like Cerebras Systems, SambaNova Systems, and Mythic, with a half dozen or so beyond that as well (not even including the activity in China). Each is looking to exploit a similar niche, which is find a way to outmaneuver Nvidia on price or performance for machine learning tasks. Most of those startups have raised more than $30 million.

Google unveiled its second-generation TPU processor at I/O last year, so it wasn’t a huge surprise that we’d see another one this year. We’d heard from sources for weeks that it was coming, and that the company is already hard at work figuring out what comes next. Google at the time touted performance, though the point of all these tools is to make it a little easier and more palatable in the first place.

Google also said this is the first time the company has had to include liquid cooling in its data centers, CEO Sundar Pichai said. Heat dissipation is increasingly a difficult problem for companies looking to create customized hardware for machine learning.

There are a lot of questions around building custom silicon, however. It may be that developers don’t need a super-efficient piece of silicon when an Nvidia card that’s a few years old can do the trick. But data sets are getting increasingly larger, and having the biggest and best data set is what creates a defensibility for any company these days. Just the prospect of making it easier and cheaper as companies scale may be enough to get them to adopt something like GCP.

Intel, too, is looking to get in here with its own products. Intel has been beating the drum on FPGA as well, which is designed to be more modular and flexible as the needs for machine learning change over time. But again, the knock there is price and difficulty, as programming for FPGA can be a hard problem in which not many engineers have expertise. Microsoft is also betting on FPGA, and unveiled what it’s calling Brainwave just yesterday at its BUILD conference for its Azure cloud platform — which is increasingly a significant portion of its future potential.

Google more or less seems to want to own the entire stack of how we operate on the internet. It starts at the TPU, with TensorFlow layered on top of that. If it manages to succeed there, it gets more data, makes its tools and services faster and faster, and eventually reaches a point where its AI tools are too far ahead and locks developers and users into its ecosystem. Google is at its heart an advertising business, but it’s gradually expanding into new business segments that all require robust data sets and operations to learn human behavior.

Now the challenge will be having the best pitch for developers to not only get them into GCP and other services, but also keep them locked into TensorFlow. But as Facebook increasingly looks to challenge that with alternate frameworks like PyTorch, there may be more difficulty than originally thought. Facebook unveiled a new version of PyTorch at its main annual conference, F8, just last month. We’ll have to see if Google is able to respond adequately to stay ahead, and that starts with a new generation of hardware.

Powered by WPeMatico

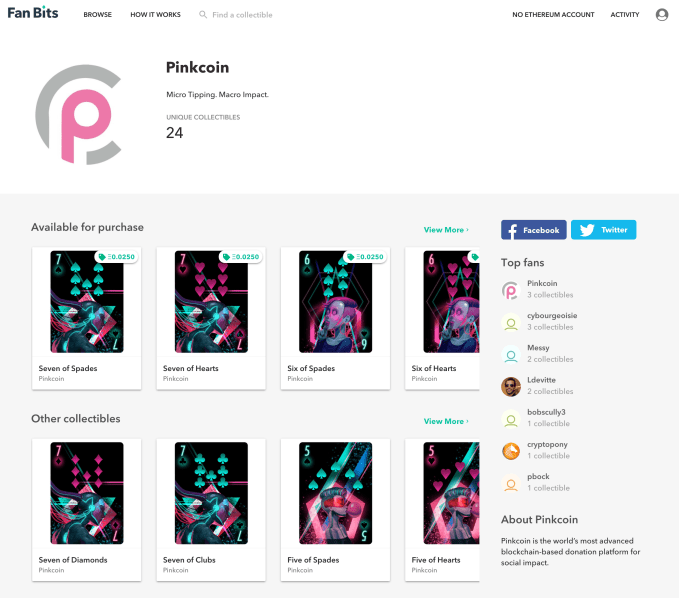

As we plunge into our baffling future, it is believed that, at some point, we will be trading in cryptographically secure kittens, monsters, and playing cards. While it is unclear why this will happen, Rare Bits and their new service, Fan Bits, is ready for the oncoming rush.

Co-founded by Dave Pekar, Amitt Mahajan and Danny Lee (who met after selling their gaming startups to Zynga) and Payom Dousti (formerly of fintech VC fund 1/0 Capital), the company trades in digital goods and has built a blockchain-based solution for buying and selling digital collectables. Lee brought in a team of ex-Zynga and other digital platform creators to build a blockchain-based solution for buying and selling digital collectables. For example, on Rare Bits you can buy this monster and battle it against other monsters on the blockchain. Further, with their new platform called Fan Bits, you can buy actual collectables that are tied to the blockchain. For example, you can sell collectible cards and give some of the proceeds to charity. If the new owner resells those cards then some of the resell price also goes to charity, an interesting if slightly intrusive use of smart contracts.

The team has raised $6 million in Series A. Fan Bits launches on May 17.

“To date, collectible content has only been created by developers for their own dapps – which I suppose could be considered our competition,” said Lee. “Fan Bits is the first to let anyone, especially people who are not technical, to create collectibles. It will create an abundance of supply that didn’t exist before.”

“We started Rare Bits to let people buy, sell, and discover crypto assets. We believe that assets on the blockchain mark a fundamental shift in how we own and exchange property. Our overall mission is to enable the worldwide exchange of online and offline property on the blockchain,” he said.

Lee sees this as a Trojan horse of sorts, allowing non tech-savvy creators sell digital art and designs online without having to understand the vagaries of blockchain.

“For creators, it’s a DIY platform to turn their content into unique collectibles and earn Ethereum on every sale,” he said. “For the first time, a creator can go from idea to published cryptocollectible on a live marketplace without having to have any technical knowledge.”

Given the popularity of other digital collectables – including in-game gear for many multi-player games – things look like they’re going to get pretty interesting in the next few years.

Powered by WPeMatico

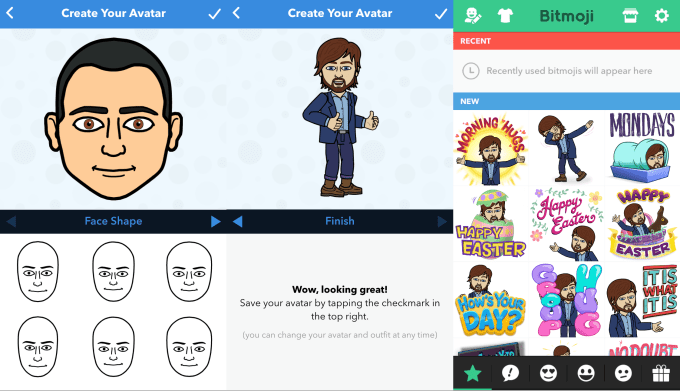

Hidden inside the code of Facebook’s Android app is an unreleased feature called Facebook Avatars that lets people build personalized, illustrated versions of themselves for use as stickers in Messenger and comments. It will let users customize their avatar to depict their skin color, hair style and facial features. Facebook Avatars is essentially Facebook’s version of Snapchat’s acquisition, Bitmoji, which has spent years in the top-10 apps chart.

Back in October I wrote that “Facebook seriously needs its own Bitmoji,” and it seems the company agrees. Facebook has become the identity layer for the internet, allowing you to bring your personal info and social graph to other services. But as the world moves toward visual communication, a name or static profile pic aren’t enough to represent us and our breadth of emotions. Avatars hold the answer, as they can be contorted to convey our reactions to all sorts of different situations when we’re too busy or camera-shy to take a photo.

![]()

The screenhots come courtesy of eagle-eyed developer Jane Manchun Wong, who found the Avatars in the Facebook for Android application package — a set of files that often contain features that are unreleased or in testing. Her digging also contributed to TechCrunch’s reports about Instagram’s music stickers and Twitter’s unlaunched encrypted DMs.

Facebook confirmed it’s building Avatars, telling me, “We’re looking into more ways to help people express themselves on Facebook.” However, the feature is still early in development and Facebook isn’t sure when it will start publicly testing.

![]()

In the onboarding flow for the feature, Facebook explains that “Your Facebook Avatar is a whole new way to express yourself on Facebook. Leave expressive comments with personalized stickers. Use your new avatar stickers in your Messenger group and private chats.” The Avatars should look like the images on the far right of these screenshot tests. You can imagine Facebook creating an updating reel of stickers showing your avatar in happy, sad, confused, angry, bored or excited scenes to fit your mood.

Currently it’s unclear whether you’ll have to configure your Avatar from a blank starter face, or whether Facebook will use machine vision and artificial intelligence to create one based on your photos. The latter is how the Facebook Spaces VR avatars (previewed in April 2017) are automatically generated.

Facebook shows off its 3D VR avatars at F8 2018. The new Facebook Avatars are 2D and can be used in messaging and comments.

Using AI to start with a decent lookalike of you could entice users to try Avatars and streamline the creation process so you just have to make small corrections. However, the AI could creep people out, make people angry if it misrepresents them or generate monstrous visages no one wants to see. Given Facebook’s recent privacy scandals, I’d imagine it would play it conservatively with Avatars and just ask users to build them from scratch. If Avatars grow popular and people are eager to use them, it could always introduce auto-generation from your photos later.

Facebook has spent at least three years trying to figure out avatars for VR. What started as generic blue heads evolved to take on basic human characteristics, real skin tones and more accurate facial features, and are now getting quite lifelike. You can see that progression up top. Last week at F8, Facebook revealed that it’s developing a way to use facial tracking sensors to map real-time expressions onto a photo-realistic avatar of a user so they can look like themselves inside VR, but without the headset on.

![]()

But as long as Facebook’s Avatars are trapped in VR, they’re missing most of their potential.

Bitmoji’s parent company Bitstrips launched in 2008, and while its comic strip creator was cool, it was the personalized emoji avatar feature that was most exciting. Snapchat acquired Bitstrips for a mere $64.2 million in early 2016, but once it integrated Bitmoji into its chat feature as stickers, the app took off. It’s often risen higher than Snapchat itself, and even Facebook’s ubiquitous products on the App Store charts, and was the No. 1 free iOS app as recently as February. Now Snapchat lets you use your Bitmoji avatar as a profile pic, online status indicator in message threads, as 2D stickers and as 3D characters that move around in your Snaps.

It’s actually surprising that Facebook has waited this long to clone Bitmoji, given how popular Instagram Stories and its other copies of Snapchat features have become. Facebook comment reels and Messenger threads could get a lot more emotive, personal and fun when the company eventually launches its own Avatars.

Mark Zuckerberg has repeatedly said that visual communication is replacing text, but that’s forced users to either use generic emoji out of convenience or deal with the chore and self-consciousness of shooting a quick photo or video. Especially in Stories, which will soon surpass feeds as the main way we share social media, people need a quick way to convey their identity and emotion. Avatars let your identity scale to whatever feeling you want to transmit without the complications of the real world.

For more on the potential of Facebook Avatars, read our piece calling for their creation:

Powered by WPeMatico

Going to the dentist can be anxiety-inducing. Unfortunately, it was no different for me last week when I went to discuss Uniform Teeth’s recent $4 million seed funding round from Lerer Hippeau, Refactor Capital, Founder’s Fund and Slow Ventures.

Uniform Teeth is a clear teeth aligner startup that competes with the likes of Invisalign and Smile Direct Club. The startup takes a One Medical-like approach in that it provides real, licensed orthodontists to see you and treat your bite.

“For us, we’re really focused on transforming the orthodontic experience,” Uniform Teeth CEO Meghan Jewitt told me at the startup’s flagship dental office in San Francisco. “There are a lot of health care companies out there that are taking areas that aren’t very customer-centric.”

Jewitt, who spent a couple of years at One Medical as director of operations, pointed to One Medical, Oscar Insurance and 23andMe as examples of companies taking a very customer-centric approach.

“We are really interested in doing the same for the orthodontics space,” she said.

Ahead of the first visit, patients use the Uniform app to take photos of their teeth and their bite. During the initial visit, patients receive a panoramic scan and 3D imaging to confirm what type of work needs to be done.

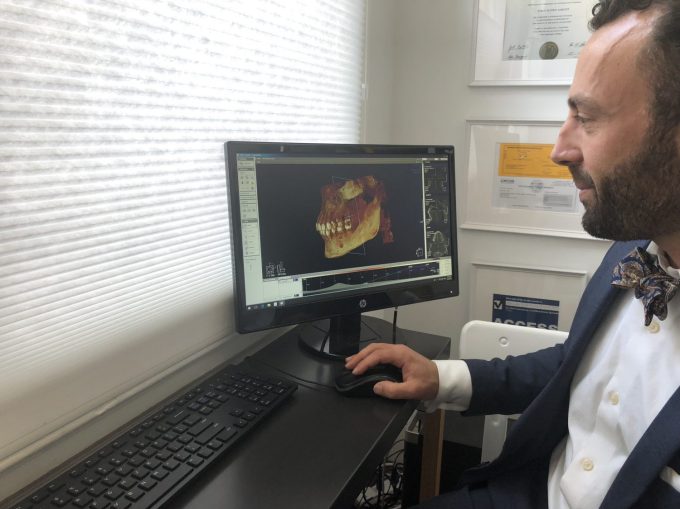

Last week during my visit, Jewitt and Uniform Teeth co-founder Dr. Kjeld Aamodt showed me the technology Uniform uses for its patient evaluations.

In the GIF above, you can see I received a 3D panoramic X-ray. The process took about 10 seconds and it’s about the same exposure to X-rays as a flight from San Francisco to Los Angeles, Dr. Aamodt said.

“With that information, we’re able to see the health of your roots, your teeth, the bone, your jaw joints, check for anything that could get worse during treatment,” Dr. Aamodt said.

Below, you can see the 3D scan.

Next is looking in-between the teeth. From here, the idea is to get a much more holistic view, Dr. Aamodt said. This is where things got interesting.

If you look at the bottom left of the photo, under my back bottom tooth, you can see a dark circle below the tooth. Dr. Aamodt gently pointed that out to me.

“That tells me there’s bacteria living inside of your jaw,” he explained. “A lot of people have this. It’s pretty common so don’t beat yourself up for it.”

This is when he told me I’d likely need to get a root canal to get rid of it. Mild panic ensued.

Dr. Aamodt went on to explain that, if I were a patient of his looking to get my teeth straightened, he would recommend that I first get the root canal before any teeth movement. That’s because, he explained, moving teeth at that point could potentially result in further infection.

“The concern about that is when we move a tooth with that, the infection will get worse and you could risk losing that tooth,” he told me.

Although I was freaking out internally, I continued to move ahead with the process. Next up was the 3D scan, which results in something fancy called a 3D Cone Beam Computed Tomography. This, Dr. Aamodt said, is what really sets Uniform Teeth apart in precision tooth movement.

This process takes the place of dental impressions, which are made by biting into a tray with gooey material. I didn’t feel like getting my bottom teeth scanned, but below is what the top looks like.

At this point Uniform Teeth would share its recommendations with the patient. My personal recommendation was to go see my dentist and, if I’m interested in straightening my teeth, come back once my roots are in a healthy enough state.

From there, I’d receive a custom treatment plan that combines the X-ray plus 3D scan to predict how my teeth will move. After receiving the clear aligners in a couple of weeks, I’d check in with Dr. Aamodt every week via the mobile app. If something were to come up, I could always set up an in-person appointment. Most people average about two to three visits in total, Jewitt said. All of that would add up to about $3,500.

The reason Uniform Teeth requires in-office visits is because 75 percent or more of the cases require additional procedures. For example, some people require small, tooth-colored attachments to be placed onto the clear aligners. Those attachments can help move teeth in a more advanced way, Dr. Aamodt said.

“If you don’t have these, then you can tip some teeth but you can’t do all of the things to help improve the bite, to create a really lasting, beautiful, healthy smile,” he explained.

Uniform Teeth currently treats patients in San Francisco, but intends to open additional offices nationwide next year. As the company expands, the plan is to bring on board more full-time orthodontists.

“Right now, we’re an employment-based model and we’d really like to continue that because it allows us to control the experience and deliver a really high-quality service,” Jewitt said.

A lot of companies say they care about the customer when, in reality, they just care about making money. But I genuinely believe Uniform Teeth does care. After I left with my tail between my legs that day, I called my dentist to set up an appointment. The following day, my dentist confirmed what Dr. Aamodt found and proceeded to set me up to get a root canal. A few days later, Dr. Aamodt checked in with me via the mobile app to ask me how I was doing and to make sure I was getting it treated. I was pleased to let him know, as Olivia Pope likes to say, “It’s handled.”

Powered by WPeMatico