TC

Auto Added by WPeMatico

Auto Added by WPeMatico

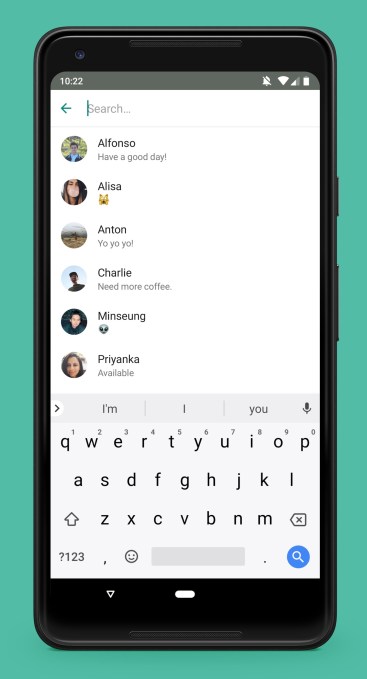

Facebook just installed its VP of Internet.org as the new head of WhatsApp after its CEO Jan Koum left the company. And now Facebook is expanding its mission to get people into “meaningful” groups to WhatsApp. Today, WhatsApp launched a slew of new features for Groups on iOS and Android that let admins set a description for their community and decide who can change the Groups settings. Meanwhile, users will be able to get a Group catch up that shows messages they were mentioned in, and search for people in the group.

WhatsApp’s new Group descriptions

WhatsApp Group participant search

Group improvements will help WhatsApp better compete with Telegram, which has recently emerged as an insanely popular platform for chat groups, especially around cryptocurrency. Telegram has plenty of admin controls of its own, but the two apps will be competing over who can make it easiest to digest these fast-moving chat forums.

“These are features are based on user requests. We develop the product based on what our users want and need” a WhatsApp spokesperson told me when asked why it’s making this update. “There are also people coming together in groups on WhatsApp like new parents looking for support, students organizing study sessions, and even city leaders coordinating relief efforts after natural disasters.”

Facebook is on a quest to get 1 billion users into “meaningful” Groups and recently said it now has hit the 200 million user milestone. Groups could help people strengthen their ties with their city or niche interests, which can make them feel less alone.

With Group descriptions, admins can explain the purpose and rules of a group. They show up when people check out the group and appear atop the chat window when they join. New admin controls let them restrict who is allowed to alter a group’s subject, icon, and description. WhatsApp is also making it tougher to re-add someone to a group they left so you can’t “Group-add-spam people”. Together, these could make sure people find relevant groups, naturally acclimate to their culture, and don’t troll everyone.

As for users, the new Group catch up feature offers a new @ button in the bottom right of the chat window that when tapped, surfaces all your replies and mentions since you last checked. And if you want to find someone specific in the Group, the new participant search on the Info page could let you turn a group chat into a private convo with someone you meet.

WhatsApp Group catch up

Now that WhatsApp has a stunning 1.5 billion users compared to 200 million on Telegram, its next phase of growth may come from deepening engagement instead of just adding more accounts. Many people already do most of their one-on-one chatting with friends on WhatsApp, but Groups could invite tons of time spent as users participate in communities of strangers around their interests.

Powered by WPeMatico

Just days after Google announced that it would acquire Velostrata to help customers migrating more of their operations into cloud environments, HPE under its new CEO Antonio Neri is also upping its game in the same department. Today the company announced that it would acquire Plexxi, a specialist in software-defined data center solutions, aimed at optimising application performance for enterprises that are using hybrid cloud environments.

A spokesperson confirmed that the companies are not currently revealing the terms of the deal, which is expected to close in the third quarter of 2018 (ending July 31). Plexxi has 100 employees and all will be joining HPE, a spokesperson said. A role for Rich Napolitano, Plexxi’s CEO who joined after having been the president of EMC, “is still being finalized.”

For some context and a possible price range for this deal, Plexxi, founded in 2010, was last valued at around $267 million as of its last financing round, more than two years ago in January 2016, according to PitchBook. And the previous cloud infrastructure acquisition HPE made, of SimpliVity over a year ago, was for $650 million. Plexxi’s investors included GV (formerly Google Ventures), Lightspeed Venture Partners, Matrix and more.

Cloud services — propelled by the rise of mobile hardware with less on-device storage, advances at major platforms like AWS, Microsoft’s Azure and Google, and the rise of companies like Box to help manage cloud services — have exploded in their ubiquity as a way to deliver and store software and data among enterprises.

But many organizations are, in fact, not throwing all of their eggs into the clouds, so to speak, and are taking a more gradual path to migrate some or all of their IP out of on-premises-based solutions.

This, in turn, has given rise to a second market for hybrid cloud services, deployments that are more flexible and allow for a mix of legacy and on-premises hardware alongside more modern distributed architectures. HPE and Google are not the only ones building solutions to address that market: Microsoft, Dell, Accenture, NTT, and many more have also made large investments to cover these different bases.

And that has proven popular not just with vendors — but with enterprises as well. BCC today released a report that estimates hybrid cloud services could reach a market size of $98.8 billion globally by 2022.

Ric Lewis, the VP & GM of HPE’s software-defined and cloud group, said that the plan will be to integrate Plexxi into HPE’s existing products in two areas.

The first of these is in the company’s hyperconverged solutions business, where HPE’s acquisition of SimpliVity also sites. “Plexxi will enable us to deliver the industry’s only hyperconverged offering that incorporates compute, storage and data fabric networking into a single solution, with a single management interface and support,” he wrote in a blog post.

The second of these will be to bring Plexxi’s HCN tech to HPE Synergy and its composable infrastructure business. This, Lewis explained, is “a new category of infrastructure that delivers fluid pools of storage and compute resources that can be composed and recomposed as business needs dictate.” Plexxi will enable this approach to extend also to rack-based solutions in private clouds.

“Plexxi and HPE’s values and vision for the future are closely aligned,” Plexxi CEO Rich Napolitano wrote in his own announcement. “We share the same mission, to help the enterprise effectively leverage modern IT to accelerate their business in the digital age.”

While the two wait for the deal to close, it seems to be business as usual for Plexxi. Just earlier today, the company announced an expansion of its integrations with VMware.

Updated with more detail from Plexxi.

Powered by WPeMatico

I’ve spent a good chunk of my life piecing together various LEGO projects… but even the craziest stuff I’ve built pales in comparison to this. It’s a fully functioning pinball machine built entirely out of official LEGO parts, from the obstacles on the playfield, to the electronic brains behind the curtain, to the steel ball itself.

Creator Bre Burns calls her masterpiece “Benny’s Space Adventure,” theming the machine around LEGO’s classic ‘lil blue space man. It’s made up of more than 15,000 LEGO bricks, multiple Mindstorms NXT brains working in unison, steel castor balls borrowed from a Mindstorms kit, plus lights and motors repurposed from a bunch of other sets. Bre initially set out to build the project for exhibition at the LEGO fan conference BrickCon in October of last year, and it’s just grown and grown ever since.

Bre told the LEGO-enthusiast site Brothers Brick that she’s spent somewhere between 200 and 300 hours so far on this project. Want to know more? They’ve got a great breakdown of the entire project right over here.

Powered by WPeMatico

A lot of money — about $140 billion — is lost every year in the U.S. healthcare system thanks to inefficient management of basic internal operations, according to a study from the Journal of the American Medical Association.

While there are many factors that contribute to the woeful state of healthcare in the U.S., with greed chief among them, the 2012 study points to one area where hospitals have nothing to lose and literally billions to gain by improving their patient flows.

The problem, according to executives and investors in the startup Qventus, is that hospitals can’t invest in new infrastructure to streamline the process that’s able to work with technology systems that are in some cases decades old — and with an already overtaxed professional staff.

That’s why the founders of Qventus decided to develop a software-based service that throws out dashboards and analytics tools and replaces it with a machine learning-enhanced series of prescriptions for hospital staff to follow when presented with certain conditions.

Qventus’ co-founder and chief executive Mudit Garg started working with hospitals 10 years ago and found the experience “eye-opening.”

“There are lots and lots of people who really really care about giving the best care to every patient, but it depends on a heroic effort from all of those individuals,” Garg said. “It depended on some amazing manager going above and beyond and doing some diving catch to make things work.”

As a software engineer, Garg thought there was a simple solution to the problem — applying data to make processes run more effectively.

In 2012 the company started out with a series of dashboards and data management tools to provide visibility to the hospital administrators and operators about what was happening in their healthcare facilities. But, as Garg soon discovered, when doctors and nurses get busy, they don’t love a dashboard.

From the basic analytics, Garg and his team worked to make the data more predictive — based on historical data about patient flows, the system would send out notifications about how many patients a facility could expect to come in at almost any time of day.

But even the predictive information wasn’t useful enough for the hospitals to act on, so Garg and company went back to the drawing board.

What they finally came up with was a solution that used the data and predictive capabilities to start suggesting potential recipes for dealing with situations in hospitals. Rather than saying that a certain number of patients were likely to be admitted to the hospital, the software suggests actions for addressing the likely scenarios that could occur.

For instance, if there are certain times when the hospital is getting busier, nurses can start discharging patients in anticipation of the need for new capacity in an ICU, Garg said.

Photo courtesy of Paul Burns

That product, some six years in the making, has garnered the attention of a number of top investors in the healthcare space. Mayfield Fund and Norwest Venture Partners led the company’s first round, and Qventus managed to snag a new $30 million round from return investors and new lead investor, Bessemer Venture Partners. Strategic backer New York Presbyterian Ventures, the investment arm of the famed New York hospital system, also participated.

So far, Qventus has raised $43 million for its service.

As a result of the deal, Stephen Kraus, a partner at Bessemer, will take a seat on the company’s board of directors.

“Hospitals are under tremendous pressure to increase efficiency, improve margins and enhance patient experience, all while reducing the burden on frontline teams, and they currently lack tools to use data to achieve operational productivity gains,” said Kraus, in a statement.

For Kraus, the application of artificial intelligence to operations is just as transformative for a healthcare system, as its clinical use cases.

“We’ve been looking at this space broadly… AI and ML to improve healthcare… image interpretation, pathology slide interpretation… that’s all going to take a longer time because healthcare is slow to adapt.” said Kraus. “The barriers to adoption in healthcare is frankly the physicians themselves…the average primary care doc is seeing 12 to 20 patients a day… they barely want to adopt their [electronic medical health records]… The idea that they’re going to get comfortable with some neural network or black box technology to change their clinical workflow vs. Qventus which is clinical workflow to strip out cost… That’s lower hanging fruit.”

Powered by WPeMatico

Netflix today announced that it will release a second season of Lost in Space, the big-budget sci-fi program that debuted in April.

More Danger, Will Robinson. Lost in Space Season 2 is coming. pic.twitter.com/SBEbJaKUIi

— Lost In Space (@lostinspacetv) May 14, 2018

The series is a revamp of the original show from the 1960s. Season One, which included 10 episodes, follows the Robinson family on their journey from Earth to Alpha Centauri. Along the way, they stumble across extraterrestrial life and a wide array of life-or-death situations.

Many of the elements from the original show have been reimagined, not least of which being the role of Mr. Smith going to Parker Posey, who plays the delightfully wicked villain.

We reviewed the show on the Original Content podcast in this episode, and struggled to find any meaningful flaws.

Powered by WPeMatico

A new tool by researchers at the School of Science at IUPUI and Erasmus MC University Medical Center Rotterdam in the Netherlands can predict your hair, skin, and eye color from your DNA data. The system, which is essentially a web app that can accept DNA sequences, compares known color phenotypes to known data and tells you the probabilities of each color.

The app, called HIrisPlex-S, can tell colors from even small amounts of DNA like that left at a crime scene.

“We have previously provided law enforcement and anthropologists with DNA tools for eye color and for combined eye and hair color, but skin color has been more difficult,” said forensic geneticist Susan Walsh from IUPUI. “Importantly, we are directly predicting actual skin color divided into five subtypes — very pale, pale, intermediate, dark and dark to black – using DNA markers from the genes that determine an individual’s skin coloration. This is not the same as identifying genetic ancestry. You might say it’s more similar to specifying a paint color in a hardware store rather than denoting race or ethnicity. If anyone asks an eyewitness what they saw, the majority of time they mention hair color and skin color. What we are doing is using genetics to take an objective look at what they saw.”

You can actually try the web app here but be warned: it’s not exactly the most user friendly app on the web. It requires you to know specific alleles for your test subject or upload a set of alleles in a csv file. It is, however, free and looks like it could wildly useful in law enforcement and figuring out what your hair color was before you dyed it purple.

“With our new HIrisPlex-S system, for the first time, forensic geneticists and genetic anthropologists are able to simultaneously generate eye, hair and skin color information from a DNA sample, including DNA of the low quality and quantity often found in forensic casework and anthropological studies,” said Manfred Kayser of Erasmus MC.

Powered by WPeMatico

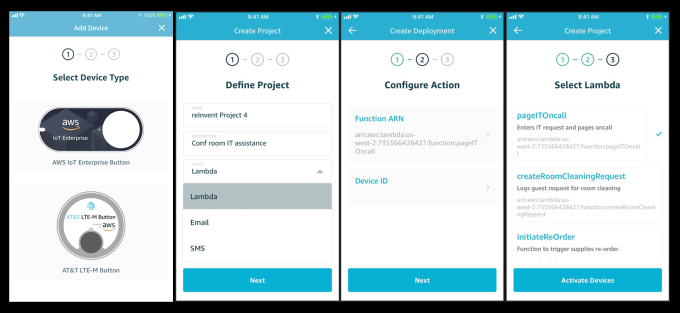

When Amazon introduced AWS Lambda in 2015, the notion of serverless computing was relatively unknown. It enables developers to deliver software without having to manage a server to do it. Instead, Amazon manages it all and the underlying infrastructure only comes into play when an event triggers a requirement. Today, the company released an app in the iOS App Store called AWS IoT 1-Click to bring that notion a step further.

The 1-click part of the name may be a bit optimistic, but the app is designed to give developers even quicker access to Lambda event triggers. These are designed specifically for simple single-purpose devices like a badge reader or a button. When you press the button, you could be connected to customer service or maintenance or whatever makes sense for the given scenario.

One particularly good example from Amazon is the Dash Button. These are simple buttons that users push to reorder goods like laundry detergent or toilet paper. Pushing the button connects to the device to the internet via the home or business’s WiFi and sends a signal to the vendor to order the product in the pre-configured amount. AWS IoT 1-Click extends this capability to any developers, so long as it is on a supported device.

To use the new feature, you need to enter your existing account information. You configure your WiFi and you can choose from a pre-configured list of devices and Lambda functions for the given device. Supported devices in this early release include AWS IoT Enterprise Button, a commercialized version of the Dash button and the AT&T LTE-M Button.

Once you select a device, you define the project to trigger a Lambda function, or send an SMS or email, as you prefer. Choose Lambda for an event trigger, then touch Next to move to the configuration screen where you configure the trigger action. For instance, if pushing the button triggers a call to IT from the conference room, the trigger would send a page to IT that there was a call for help in the given conference room.

Finally, choose the appropriate Lambda function, which should work correctly based on your configuration information.

All of this obviously requires more than one click and probably involves some testing and reconfiguring to make sure you’ve entered everything correctly, but the idea of having an app to create simple Lambda functions could help people with non-programming background configure buttons with simple functions with some training on the configuration process.

It’s worth noting that the service is still in Preview, so you can download the app today, but you have to apply to participate at this time.

Powered by WPeMatico

“Last year was pretty hard, I’m not gonna lie,” says Peter Deng, Uber’s head of rider experience. But as part of new CEO Dara Khosrowshahi’s push to rebrand Uber around safety, Deng says, “we’ve seen the company shift to more listening.”

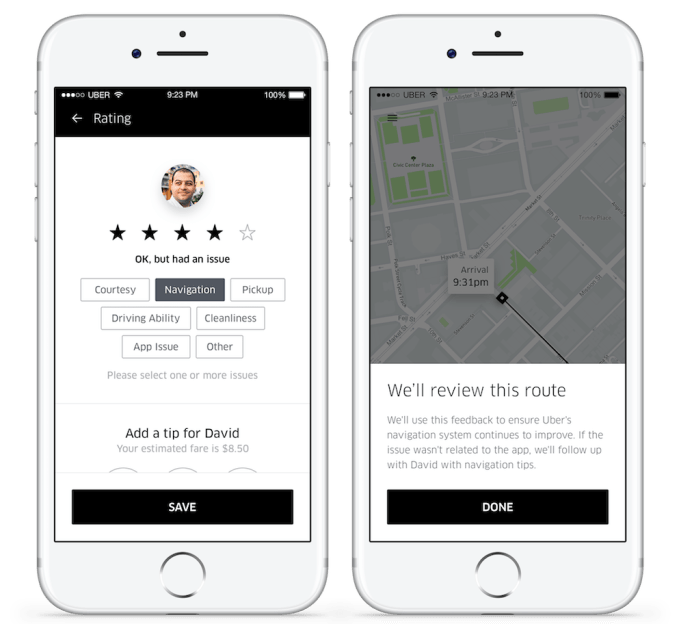

That focus on hearing users’ concerns prompted today’s change. Have a bad Uber ride when you’re busy and you might neglect to rate the driver or accidentally rush through giving them 5 stars. Forcing users to wait until a ride ends to provide feedback deprives them of a sense of control, while decreasing the number of accurate data points Uber has to optimize its service.

I had just this experience last month, leading me to tweet that Uber should let us rate trips mid-ride:

Uber & Lyft could let us rate drivers mid-ride, but only apply the ratings 5 minutes after a ride ends in case something goes better/worse before the end of the trip.

— Josh Constine (@JoshConstine) April 25, 2018

Uber apparently felt similarly, so it’s making an update. Starting today, Uber users can rate their trip mid-ride, providing a star rating with categorized and written feedback, plus a compliment or tip at any time instead of having to wait for the trip to end. “Every day 15 million people take a ride on Uber. If you can capture incrementally more and better feedback . . . we’re going to use that feedback to make the service better,” says Deng. Lyft still won’t let you rate until a ride is over.

Specifically, the data will be used to “recognize top-quality drivers . . . through a new program launching in June,” Uber tells me. “We’re going to be celebrating the drivers that provide really awesome service,” Deng says, though he declined to say whether that celebration will include financial rewards, access to extra driver perks or just a pat on the back.

But Uber will also now use the feedback options that appear when you give a less-than-perfect rating to tune the technology on its back end. So that way, if you say that the pickup was the issue, it might be classified as a “PLE – pickup location error,” and that data gets routed to the team that improves exactly where drivers are told to scoop you up. To ensure there’s no tension between you and the driver, Uber won’t share your feedback with them anonymously until the ride ends.

I asked if reminding users to buckle their seat belts would be in that Safety Center and Uber tells me it’s now planning to add info about buckling up. It’s been a personal quest of mine to dispel the myth that professionally driven vehicles are invulnerable to accidents. That idea, propagated by heavy-duty Ford Crown Victoria yellow cabs piloted by life-long drivers in cities they know doesn’t hold up, given Ubers are often lightweight hybrids often operating in places less familiar to the driver.

The launch follows the unveiling of Uber’s new in-app Safety Center last month that gives users access to insurance info, riding tips and an emergency 911 button. After a year of culture and legal issues, Uber needs to recruit users who deleted it or check an alternative first when they need transportation.

Enhanced safety and feedback could earn their respect. As competition for ride sharing heats up around the world, all the apps will be seeking ways to differentiate. They’re already battling for faster pick-ups and better routing algorithms. But helping riders feel like their complaints are heard and addressed could start to work some dents out of Uber’s public image.

Powered by WPeMatico

Lenovo has teased a new arrival that might top Apple’s iPhone X in a bid to deliver a true all-screen smartphone.

Apple’s iPhone X goes very close but for a tiny bezel and its distinctive notch, but Lenovo’s Z5 seems like it might go a step further, according to a teaser sketch (above) shared by Lenovo VP Chang Cheng on Weibo that was first noted by CNET.

The device is due in June and Cheng claimed it is the result of “four technological breakthroughs” and “18 patented technologies,” but he didn’t provide further details.

The executive previously shared a slice of the design — see right — on Weibo, with a claim that it boasts a 95 percent screen-to-body ratio.

Indeed, the image appears to show a device without a top screen notch à la the iPhone X. Where Lenovo will put the front-facing camera, mic, sensors and other components isn’t clear right now.

A number of Android phone-makers have copied Apple’s design fairly shamelessly. That’s ironic given that Apple was widely derided when it first unveiled the phone.

Nonetheless, the device has sold well and that’s captured the attention of Huawei, Andy Rubin’s Essential, Asus and others who have embraced the notch. The design is so common now that Google even moved the clock in Android P to make space for the notch.

Time will tell what Lenovo adds to the conversation. The company is in dire need of a hit phone — it trails the likes of Xiaomi, Oppo, Vivo and Huawei on home soil in China — and the hype on the Z5 is certainly enough to raise hope.

Powered by WPeMatico

There isn’t a software company out there worth its salt that doesn’t have some kind of artificial intelligence initiative in progress right now. These organizations understand that AI is going to be a game-changer, even if they might not have a full understanding of how that’s going to work just yet.

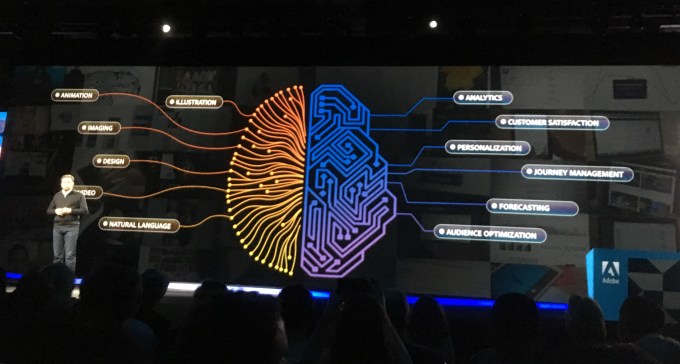

In March at the Adobe Summit, I sat down with Adobe executive vice president and CTO Abhay Parasnis, and talked about a range of subjects with him including the company’s goal to build a cloud platform for the next decade — and how AI is a big part of that.

Parasnis told me that he has a broad set of responsibilities starting with the typical CTO role of setting the tone for the company’s technology strategy, but it doesn’t stop there by any means. He also is in charge of operational execution for the core cloud platform and all the engineering building out the platform — including AI and Sensei. That includes managing a multi-thousand person engineering team. Finally, he’s in charge of all the digital infrastructure and the IT organization — just a bit on his plate.

The company’s transition from selling boxed software to a subscription-based cloud company began in 2013, long before Parasnis came on board. It has been a highly successful one, but Adobe knew it would take more than simply shedding boxed software to survive long-term. When Parasnis arrived, the next step was to rearchitect the base platform in a way that was flexible enough to last for at least a decade — yes, a decade.

“When we first started thinking about the next generation platform, we had to think about what do we want to build for. It’s a massive lift and we have to architect to last a decade,” he said. There’s a huge challenge because so much can change over time, especially right now when technology is shifting so rapidly.

That meant that they had to build in flexibility to allow for these kinds of changes over time, maybe even ones they can’t anticipate just yet. The company certainly sees immersive technology like AR and VR, as well as voice as something they need to start thinking about as a future bet — and their base platform had to be adaptable enough to support that.

But Adobe also needed to get its ducks in a row around AI. That’s why around 18 months ago, the company made another strategic decision to develop AI as a core part of the new platform. They saw a lot of companies looking at a more general AI for developers, but they had a different vision, one tightly focussed on Adobe’s core functionality. Parasnis sees this as the key part of the company’s cloud platform strategy. “AI will be the single most transformational force in technology,” he said, adding that Sensei is by far the thing he is spending the most time on.”

Photo: Ron Miller

The company began thinking about the new cloud platform with the larger artificial intelligence goal in mind, building AI-fueled algorithms to handle core platform functionality. Once they refined them for use in-house, the next step was to open up these algorithms to third-party developers to build their own applications using Adobe’s AI tools.

It’s actually a classic software platform play, whether the service involves AI or not. Every cloud company from Box to Salesforce has been exposing their services for years, letting developers take advantage of their expertise so they can concentrate on their core knowledge. They don’t have to worry about building something like storage or security from scratch because they can grab those features from a platform that has built-in expertise and provides a way to easily incorporate it into applications.

The difference here is that it involves Adobe’s core functions, so it may be intelligent auto cropping and smart tagging in Adobe Experience Manager or AI-fueled visual stock search in Creative Cloud. These are features that are essential to the Adobe software experience, which the company is packaging as an API and delivering to developers to use in their own software.

Whether or not Sensei can be the technology that drives the Adobe cloud platform for the next 10 years, Parasnis and the company at large are very much committed to that vision. We should see more announcements from Adobe in the coming months and years as they build more AI-powered algorithms into the platform and expose them to developers for use in their own software.

Parasnis certainly recognizes this as an ongoing process. “We still have a lot of work to do, but we are off in an extremely good architectural direction, and AI will be a crucial part,” he said.

Powered by WPeMatico