TC

Auto Added by WPeMatico

Auto Added by WPeMatico

Upskill has been working on a platform to support augmented and mixed reality for almost as long as most people have been aware of the concept. It began developing an agnostic AR/MR platform way back in 2010. Google Glass didn’t even appear until two years later. Today, the company announced the early release of Skylight for Microsoft HoloLens.

Upskill has been developing Skylight as an operating platform to work across all devices, regardless of the manufacturer, but company co-founder and CEO Brian Ballard sees something special with HoloLens. “What HoloLens does for certain types of experiences, is it actually opens up a lot more real estate to display information in a way that users can take advantage of,” Ballard explained.

He believes the Microsoft device fits well within the broader approach his company has been taking over the last several years to support the range of hardware on the market while developing solutions for hands-free and connected workforce concepts.

“This is about extending Skylight into the spatial computing environment making sure that the workflows, the collaboration, the connectivity is seamless across all of these different devices,” he told TechCrunch.

Microsoft itself just announced some new HoloLens use cases for its Dynamics 365 platform around remote assistance and 3D layout, use cases which play to the HoloLens strengths, but Ballard says his company is a partner with Microsoft, offering an enhanced, full-stack solution on top of what Microsoft is giving customers out of the box.

That is certainly something Microsoft’s Terry Farrell, director of product marketing for mixed reality at Microsoft recognizes and acknowledges. “As adoption of Microsoft HoloLens continues to rapidly increase in industrial settings, Skylight offers a software platform that is flexible and can scale to meet any number of applications well suited for mixed reality experiences,” he said in a statement.

That involves features like spatial content placement, which allows employees to work with digital content in HoloLens, while keeping their hands free to work in the real world. They enhance this with the ability to see multiple reference materials across multiple windows at the same time, something we are used to doing with a desktop computer, but not with a device on our faces like HoloLens. Finally, workers can use hand gestures and simple gazes to navigate in virtual space, directing applications or moving windows, as we are used to doing with keyboard or mouse.

Upskill also builds on the Windows 10 capabilities in HoloLens with its broad experience securely connecting to back-end systems to pull the information into the mixed reality setting wherever it lives in the enterprise.

The company is based outside of Washington, D.C. in Vienna, Virginia. It has raised over $45 million, according to Crunchbase. Ballard says the company currently has 70 employees. Customers using Skylight include Boeing, GE, Coca-Cola, Telstra and Accenture.

Powered by WPeMatico

It’s been less than six months since Adobe acquired commerce platform Magento for $1.68 billion and today, at Magento’s annual conference, the company announced the first set of integrations that bring the analytics and personalization features of Adobe’s Experience Cloud to Magento’s Commerce Cloud.

In many ways, the acquisition of Magento helps Adobe close the loop in its marketing story by giving its customers a full spectrum of services that go from analytics, marketing and customer acquisition all the way to closing the transaction. It’s no surprise then that the Experience Cloud and Commerce Cloud are growing closer to, in Adobe’s words, “make every experience shoppable.”

“From the time that this company started to today, our focus has been pretty much exactly the same,” Adobe’s SVP of Strategic Marketing Aseem Chandra told me. “This is, how do we deliver better experiences across any channel in which our customers are interacting with a brand? If you think about the way that customers interact today, every experience is valuable and important. […] It’s no longer just about the product, it’s more about the experience that we deliver around that product that really counts.”

“From the time that this company started to today, our focus has been pretty much exactly the same,” Adobe’s SVP of Strategic Marketing Aseem Chandra told me. “This is, how do we deliver better experiences across any channel in which our customers are interacting with a brand? If you think about the way that customers interact today, every experience is valuable and important. […] It’s no longer just about the product, it’s more about the experience that we deliver around that product that really counts.”

So with these new integrations, Magento Commerce Cloud users will get access to an integration with Adobe Target, for example, the company’s machine learning-based tool for personalizing shopping experiences. Similarly, they’ll get easy access to predictive analytics from Adobe Analytics to analyze their customers’ data and predict future churn and purchasing behavior, among other things.

These kinds of AI/ML capabilities were something Magento had long been thinking about, Magento’s former CEO and new Adobe SVP fo Commerce Mark Lavelle told me, but it took the acquisition by Adobe to really be able to push ahead with this. “Where the world’s going for Magento clients — and really for all of Adobe’s clients — is you can’t do this yourself,” he said. “you need to be associated with a platform that has not just technology and feature functionality, but actually has this living and breathing data environment that that learns and delivers intelligence back into the product so that your job is easier. That’s what Amazon and Google and all of the big companies that we’re all increasingly competing against or cooperating with have. They have that type of scale.” He also noted that at least part of this match-up of Adobe and Magento is to give their clients that kind of scale, even if they are small- or medium-sized merchants.

The other new Adobe-powered feature that’s now available is an integration with the Adobe Experience Manager. That’s Adobe’s content management tool that itself integrates many of these AI technologies for building personalized mobile and web content and shopping experiences.

“The goal here is really in unifying that profile, where we have a lot of behavioral information about our consumers,” said Aseem. “And what Magento allows us to do is bring in the transactional information and put those together so we get a much richer view of who the consumers are and how we personalize that experience with the next interaction that they have with a Magento-based commerce site.”

It’s worth noting that Magento is also launching a number of other new features to its Commerce Cloud that include a new drag-and-drop editing tool for site content, support for building Progressive Web Applications, a streamlined payment tool with improved risk management capabilities, as well as a new integration with the Amazon Sales Channel so Magento stores can sync their inventory with Amazon’s platform. Magneto is also announcing integrations with Google’s Merchant Center and Advertising Channels for Google Smart Shopping Campaigns.

Powered by WPeMatico

Zocdoc founder Cyrus Massoumi and Indiegogo founder Slava Rubin have created a new $30 million fund called Humbition aimed at early stage, founder-led companies in New York.

“The fund is focused on connecting startups with investors and advisors experienced in building and growing successful businesses,” said Rubin.

“We are seeking to fill a void in NYC, where the vast majority of early stage investors have no significant experience building and scaling businesses,” he said. “The fund’s main areas of investment include marketplaces, consumer and health tech. But the primary criteria for investments is high quality founders. The fund is also seeking out mission-driven businesses because the companies that are socially responsible will be the most successful in the coming decades.”

The fund has brought on ClassPass founder Payal Kadakia, Warby Parker founder Neil Blumenthal, Charity: Water CEO and founder Scott Harrison, and Casper founder and CEO Philip Krim as advisors. They have already invested some of the $30 million raise in Burrow, a couch-on-demand service.

“New York City is home to a tremendous number of mission-driven startups that are simply not receiving the same level of support as their peers in the Bay Area. This void presents a unique opportunity for humbition to reach the incredible local talent who need the funding and guidance to build and grow their businesses in New York City,” said Rubin.

Powered by WPeMatico

Fortnite Battle Royale was undoubtedly the big game of 2017, and 2018 is shaping up to be very similar. And with such popularity inevitably comes a swath of critics.

Take, for example, YouTuber Max Box. Using Fortnite’s replay mode, Max Box created a YouTube video that shows what Fortnite would look like in first-person mode.

The video is slightly buggy, but it’s about as close as we may ever get to seeing what Fortnite would look like in first person.

As it stands now, Fortnite uses third-person view, showing the player a view of themselves and the rest of the world from the perspective of their character’s right shoulder. Because of these mechanics, players are able to peek over cover or around walls without exposing themselves to incoming fire.

Because third-person view allows gamers to see their character in full, it also makes Epic’s main Fortnite revenue generator, premium skins and emotes, all the more valuable.

For those reasons, it seems unlikely that Epic would introduce a first-person mode.

That said, Epic will face new competition in the Battle Royale space with the introduction of CoD: Black Ops 4 Blackout mode on October 12. The game jumps in the ring with Fortnite, PUBG and H1Z1 as a first-person Battle Royale shooter.

Powered by WPeMatico

London ‘proptech’ startup Goodlord, which offers cloud-based software to help estate agents, landlords and tenants manage the rental process, has raised £7 million in Series B funding. The round is led by Finch Capital, with participation from existing investor Rocket Internet/GFC, and is roughly equal in size to Goodlord’s Series A in 2017. However, it would be fair to say a lot has happened since then.

In January, we reported that Goodlord had let go of nearly 40 employees, and that co-founder and CEO Richard White was leaving the company (we also speculated that the company’s CTO had departed, too, which proved to be correct). In signs of a potential turnaround, Goodlord then announced a new CEO later that month: serial entrepreneur and investor William Reeve (pictured), a veteran of the London tech scene, would now head up the property technology startup.

As I wrote at the time, Reeve’s appointment could be viewed as somewhat of a coup for Goodlord and showed how seriously its backers — which, along with Rocket Internet (and now Finch), also includes LocalGlobe and Ribbit Capital — were treating their investment and the turn-around/refocus of the company. With today’s Series B and news that Reeve has appointed a new CTO, Donovan Frew, that effort seems to be paying off.

Founded in 2014, unlike other startups in the rental market space that want to essentially destroy traditional brick ‘n mortar letting agents with an online equivalent, Goodlord’s Software-as-a-Service is designed to support all stakeholders, including traditional high-street letting agents, as well as landlords and, of course, tenants.

The Goodlord SaaS enables letting agents to “digitize” the moving-in process, including utilizing e-signatures and collecting rental payments online. In addition, the company sells landlord insurance, and has been working on other related products, such as rental guarantees, and “tenant passports.”

If Goodlord can reach enough scale, it wants to let tenants easily take their rental transaction history and landlord references with them when moving from one rental property to another as proof that they are a trustworthy tenant.

Meanwhile, the company says new funding will be used to build new products, grow its customer base, and invest in the further development of its proprietary technology to continue to make “renting simple and more transparent for letting agents, tenants and landlords”.

Powered by WPeMatico

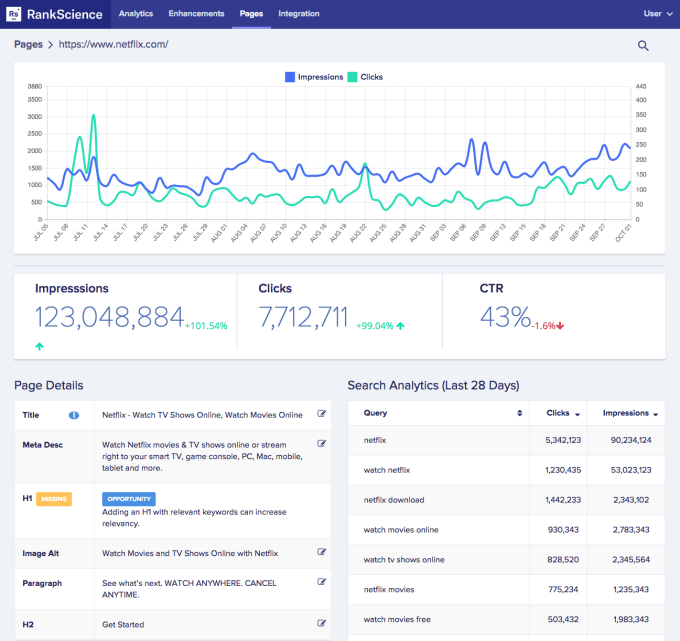

A couple of years ago YC-backed RankScience, which offers AI-enhanced SEO split-testing, put a few SEO experts’ noses out of joint when the fledgling startup brashly talked about replacing human expertise with automation.

Two years on its pitch has mellowed, with the team saying their self-service platform is “augmenting human SEO ability rather than replacing them”.

The startup has also — finally — closed a seed round, announcing $1.8M led by Initialized Capital, along with Adam D’Angelo, Michael Seibel, BoxGroup, Liquid2 Ventures, FundersClub, and Jenny 8 Lee participating.

The new roster of investors join a list of prior backers that includes Y Combinator, 500 Startups, Christina Cacioppo, and Jack Groetzinger.

So what took them so long? Founder Ryan Bednar tells TechCrunch they wanted to take their time with the seed, rather than raise more money than they needed — a position that was possible thanks to already being profitable at YC Demo Day.

“I admit that this is unusual,” he says of the slow seed, though he also says they did raise a “small amount” after demo day, before filling out the rest this month.

“I saw many YC batchmates raising massive rounds pre product-market-fit, which can end up being a mistake,” he adds. “We probably could have raised a few million at Demo Day but ultimately didn’t feel we were ready for it. I didn’t know what I would spend the money on, and we were growing without it, so we chose not to. I wanted to raise capital when I felt we were ready to use it for growth, and now’s that time.”

Bednar also says he is “selective” when it comes to investors — and “specifically” wanted to work with Initialized, saying he’s “known Garry and Alexis personally for years, and trust that they would support us in building a long-term scalable business”.

Commenting on the funding to TechCrunch, Initialized Capital’s Alexis Ohanian tells us: “Even though so many businesses depend on traffic from search, it’s a challenge for them to be data-driven about SEO. RankScience makes it easy to test changes to your website that can lift search traffic. They also automate a growing number of technical SEO tasks, which otherwise would take engineers away from building product and infrastructure, which is really exciting.”

RankScience plans to use the fresh funding to hire more AI and machine learning engineers, with headcount growth targeted at its SF office.

While the founders have stepped back from pronouncing ‘the death of the SEO expert’, they are still touting the power of automation AI for SEO — noting how, after crawling a customer’s site/s, the software automatically proposes “SEO enhancements and experiments” to customers — for “one-click [human] approval”.

It also includes what Bednar bills as a “self-driving car mode” — where the tech will deploy the touted “enhancements and experiments” without customer approval. But he concedes it’s not for all RankScience users.

“For about half of our customers, we’re their only SEO vendor so we automate SEO services 100% for them, and for the other half, our software augments human SEO ability, either from in-house marketers or agencies,” he says, explaining how the team has evolved their thinking on automation vs human agency and expertise.

“When we launched we didn’t think hard enough about what sorts of controls SEO managers at larger websites would want, and we tried to automate everything without giving marketers enough control. This was a mistake and we’ve worked hard on correcting it.

“This should have been obvious but it turns out that SEO managers are highly selective about what sorts of HTML changes our software might make to their webpages. So we’ve spent the past year building tools to give SEO marketers complete control over everything our software does, and also advanced editors and tools so they can create their own SEO enhancements and run SEO split tests through the platform.”

For those who make use of RankScience’s ‘Self-Driving Car Mode’ the software is replacing SEO staff “completely”, but he adds: “This works especially well for startups and medium size businesses. But SEO is such a multifaceted problem, we want to give larger companies with marketing teams complete control over our platform, and so we work with both types of customers.”

As well as (finally) closing out its seed round now, RankScience is also launching a new self-service platform for startups and SMEs — touting greater controls.

On the customer front, Bednar says they have “hundreds” of sites on the platform now — and are serving “hundreds of millions of page views per month”. Cumulatively he says they’ve deployed “millions” of SEO split tests at this point.

“Our customers run the gamut from startups just getting started with SEO to publicly-traded companies,” he continues. “Our best industries are SaaS, ecommerce, marketplaces, healthcare, publishing, and location-based sites.

“We’ve recently been working with more consumer goods brands, and we’ve also launched a partnership program so that we can work with SEO and Digital Marketing Agencies and independent consultants.”

He says the vast majority of RankScience users are based in the US at this stage but adds that Europe is a “growing market”.

In terms of competition, Bednar name-checks the likes of Moz, Conductor (acquired this year by WeWork), BloomReach and BrightEdge — so it is swimming in a pool with some very big fish.

“Most of these products are more akin to advanced SEO analytics suites, and we differ in that RankScience is 100% focused on data-driven SEO automation,” he says, fleshing out the differences and RankScience’s edge, as he sees it. “Our software doesn’t just tell you what changes to make to your site to increase search traffic, it actually makes the changes for you. (Now with more controls!)”

Powered by WPeMatico

WeWork has partnered with Lemonade to provide renters insurance to WeLive members.

WeLive is the residential offering from WeWork, offering members a fully-furnished apartment, complete with amenities like housekeeping, mailroom, and on-site laundry, on a flexible rental schedule. In other words, bicoastal workers or generally nomadic individuals can rent a short-term living space without worrying about all the extras.

As part of that package, WeLive is now referring new and existing WeLive members to Lemonade for renters insurance.

WeLive currently has two locations — one in New York and one in D.C. — collectively representing more than 400 units. WeWork says that both units are nearly at capacity. The company has plans to open a third location in Seattle Washington by Spring 2020.

Lemonade, meanwhile, is an up-and-coming insurance startup that is rethinking the centuries-old industry. The company’s first big innovation was the digitization of getting insurance. The company uses a chatbot to lead prospective customers through the process in under a minute.

The second piece of Lemoande’s strategy is rooted in the business model. Unlike incumbent insurance providers, Lemonade takes its profit up-front, raking away a percentage of customers’ monthly payments. The rest, however, is set aside to fulfill claims. Whatever goes unclaimed at the end of the year is donated to the charity of each customer’s choice.

To date, Lemonade has raised a total of $180 million. WeWork, on the other hand, has raised just over $9 billion, with a reported valuation as high as $35 billion.

Of course, part of the reason for that lofty valuation is the fact that WeWork is a real estate behemoth, with Re/Code reporting that the company is Manhattan’s second biggest private office tenant. But beyond sheer square footage, WeWork has spent the past few years filling its arsenal with various service providers for its services store.

With 175,000 members (as of end of 2017, so that number is likely much higher now), WeWork has a considerable userbase with which it can negotiate deals with service providers, from enterprise software makers to… well, insurance providers.

Lemonade is likely just the beginning of WeWork’s stretch into developing a suite of services and partnerships for its residential members.

Powered by WPeMatico

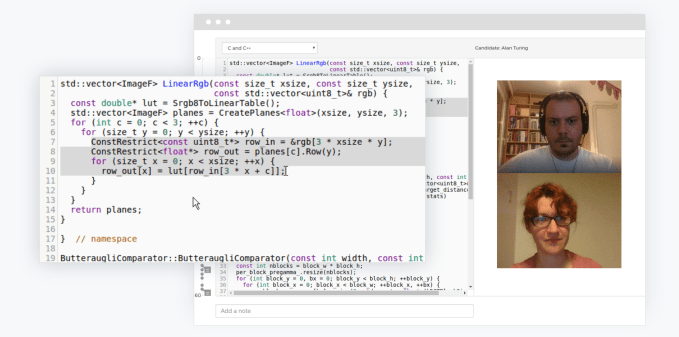

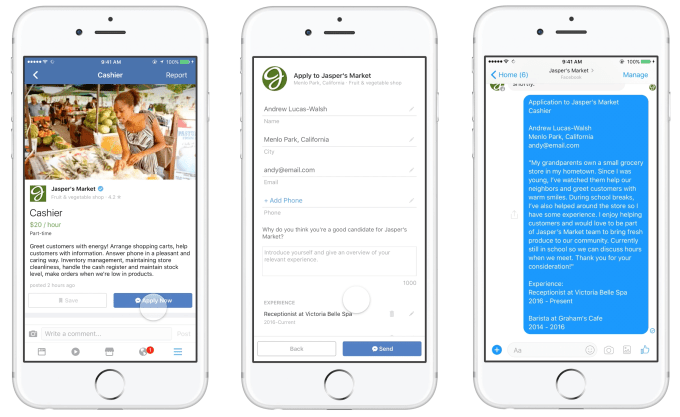

Facebook just snatched some talent to fuel its invasion of LinkedIn’s turf. A source tells TechCrunch that members of coding interview practice startup Refdash including at least some of its executives have been hired by Facebook. The social network confirmed to TechCrunch that members of Refdash’s leadership team are joining to work on Facebook’s Jobs feature that lets business promote employment openings that users can instantly apply for.

Facebook’s big opportunity here is that it’s a place people already browse naturally, so they can be exposed to Job listings even when they’re not actively looking for a company or career change. Since launching the feature in early 2017, Facebook has focused on blue-collar jobs like service and retail industry jobs that constantly need filling. But the Refdash team could give it more experience in recruiting for technical roles, connecting high-skilled workers like computer programmers to positions that need filling. These hirers might be willing to pay high prices to advertise their job listings on Facebook, siphoning revenue away from LinkedIn.

Facebook confirms that this is not an acquisition or technically a full acquihire, as there’s no overarching deal to buy assets or talent as a package. It’s so far unclear what exactly will happen to Refdash now that its team members are starting at Facebook this week, though it’s possible it will shut down now that its leaders have left for the tech giant’s cushy campuses and premium perks. Refdash’s website now says that “We’ve temporarily suspended interviews in order to make product changes that we believe will make your job search experience significantly better.”

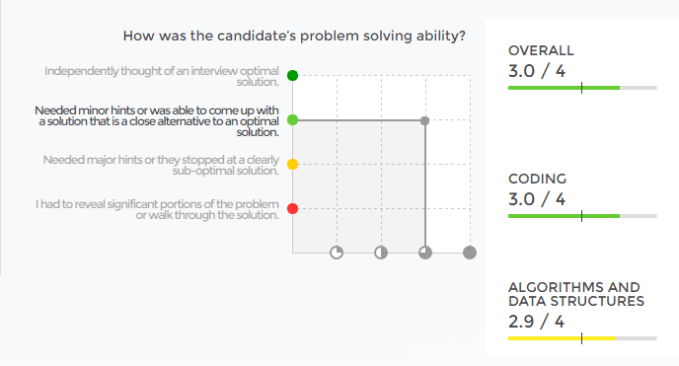

Founded in 2016 in Mountain View with an undisclosed amount of funding from Founder Friendly Labs, Refdash gave programmers direct qualitative and scored feedback on their coding interviews. Users would do a mock interview, get graded, and then have their performance anonymously shared with potential employers to match them with the right companies and positions for their skills. This saved engineers from having to endure grueling interrogations with tons of different hirers. Refdash claimed to place users at startups like Coinbase, Cruise, Lyft, and Mixpanel.

A source tells us that Refdash focused on understanding people’s deep professional expertise and sending them to the perfect employer without having to judge by superficial resumes that can introduce bias to the process. It also touted allowing hirers to browse candidates without knowing their biographical details, which could also cut down on discrimination and helps ensure privacy in the job hunting process (especially if people are still working elsewhere and are trying to be discreet in their job hunt).

It’s easy to imagine Facebook building its own coding challenge and puzzles that programmers could take to then get paired with appropriate hirers through its Jobs product. Perhaps Facebook could even build a similar service to Refdash, though the one-on-one feedback sessions it’d conduct might not be scalable enough for Menlo Park’s liking. If Facebook can make it easier to not only apply for jobs but interview for them too, it could lure talent and advertisers away from LinkedIn to a product that’s already part of people’s daily lives.

The co-founders of Refdash have something of a track record in building companies that get acquihired to help add new features to existing services. Nicola Otasevic and Andrew Kearney were respectively the founder and tech lead for Room 77, which was picked up by Google in 2014 to help rebuild its travel search vertical. At the time it was described as a licensing deal although Refdash’s founders these days call it an acquisition.

Building tools to improve the basic process of hiring via remote testing could help Facebook get an edge on technical recruiting, but it’s not the only one building such features. LinkedIn’s stablemate Skype (like LinkedIn, owned by Microsoft) last year unveiled Interviews to let recruiters test developers and others applying for technical jobs with a real-time code editor. LinkedIn has not (yet?) incorporated it into its platform.

Powered by WPeMatico

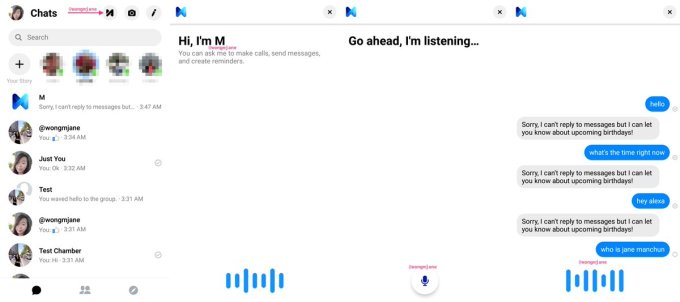

Facebook Messenger could soon let you use your voice to dictate and send messages, initiate voice calls and create reminders. Messenger for Android’s code reveals a new M assistant button atop the message thread screen that activates listening for voice commands for those functionalities. Voice control could make Messenger simpler to use hands-free or while driving, more accessible for the vision or dexterity-impaired and, perhaps one day, easier for international users whose native languages are hard to type.

Facebook Messenger was previously spotted testing speech transcription as part of the Aloha voice assistant believed to be part of Facebook’s upcoming Portal video chat screen device. But voice commands in the M assistant are new, and demonstrate an evolution in Facebook’s strategy since its former head of Messenger David Marcus told me voice “is not something we’re actively working on right now” in September 2016 onstage at TechCrunch Disrupt.

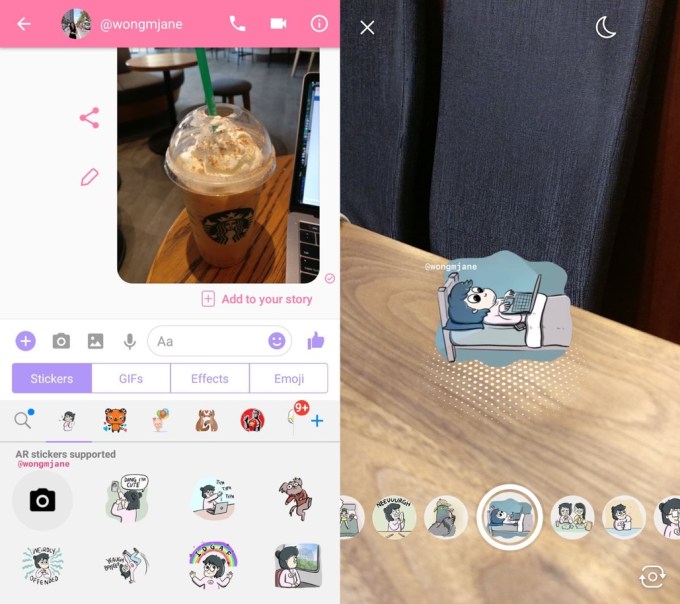

The prototype was discovered by all-star TechCrunch tipster Jane Manchun Wong, who’d previously discovered prototypes of Instagram Video Calling, Facebook’s screen time digital well-being dashboard and Lyft’s scooter rentals before they officially launched. When reached for comment, a Facebook Messenger spokesperson confirmed to TechCrunch that Facebook is internally testing the voice command feature. They told TechCrunch, “We often experiment with new experiences on Messenger with employees. We have nothing more to share at this time.”

Messenger is eager to differentiate itself from SMS, Snapchat, Android Messages and other texting platforms. The app has aggressively adopted visual communication features like Facebook Stories, augmented reality filters and more. Wong today spotted Messenger prototyping augmented reality camera effects being rolled into the GIFs, Stickers and Emoji menu in the message composer. Facebook confirms this is now in testing with a small percentage of Messenger users.

Facebook has found that users aren’t so keen on tons of bells and whistles like prominent camera access or games getting in the way of chat, so Facebook plans to bury those more in a forthcoming simplified redesign of Messenger. But voice controls add pure utility without obstructing Messenger’s core value proposition and could end up getting users to chat more if they’re eventually rolled out.

Powered by WPeMatico

Outside the crop of construction cranes that now dot Vancouver’s bright, downtown greenways, in a suburban business park that reminds you more of dentists and tax preparers, is a small office building belonging to D-Wave. This office — squat, angular and sun-dappled one recent cool Autumn morning — is unique in that it contains an infinite collection of parallel universes.

Founded in 1999 by Geordie Rose, D-Wave worked in relative obscurity on esoteric problems associated with quantum computing. When Rose was a PhD student at the University of British Columbia, he turned in an assignment that outlined a quantum computing company. His entrepreneurship teacher at the time, Haig Farris, found the young physicists ideas compelling enough to give him $1,000 to buy a computer and a printer to type up a business plan.

The company consulted with academics until 2005, when Rose and his team decided to focus on building usable quantum computers. The result, the Orion, launched in 2007, and was used to classify drug molecules and play Sodoku. The business now sells computers for up to $10 million to clients like Google, Microsoft and Northrop Grumman.

“We’ve been focused on making quantum computing practical since day one. In 2010 we started offering remote cloud access to customers and today, we have 100 early applications running on our computers (70 percent of which were built in the cloud),” said CEO Vern Brownell. “Through this work, our customers have told us it takes more than just access to real quantum hardware to benefit from quantum computing. In order to build a true quantum ecosystem, millions of developers need the access and tools to get started with quantum.”

Now their computers are simulating weather patterns and tsunamis, optimizing hotel ad displays, solving complex network problems and, thanks to a new, open-source platform, could help you ride the quantum wave of computer programming.

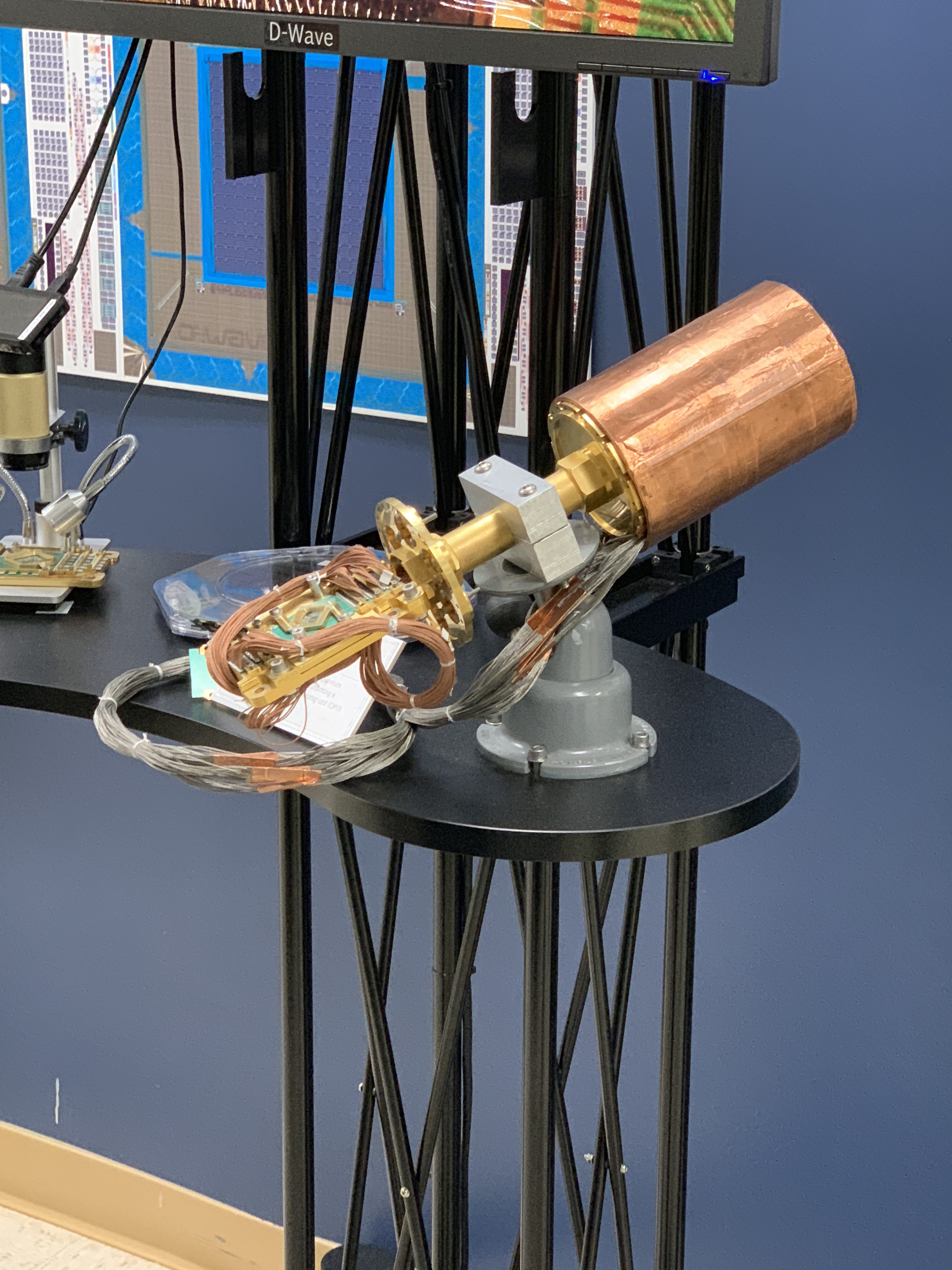

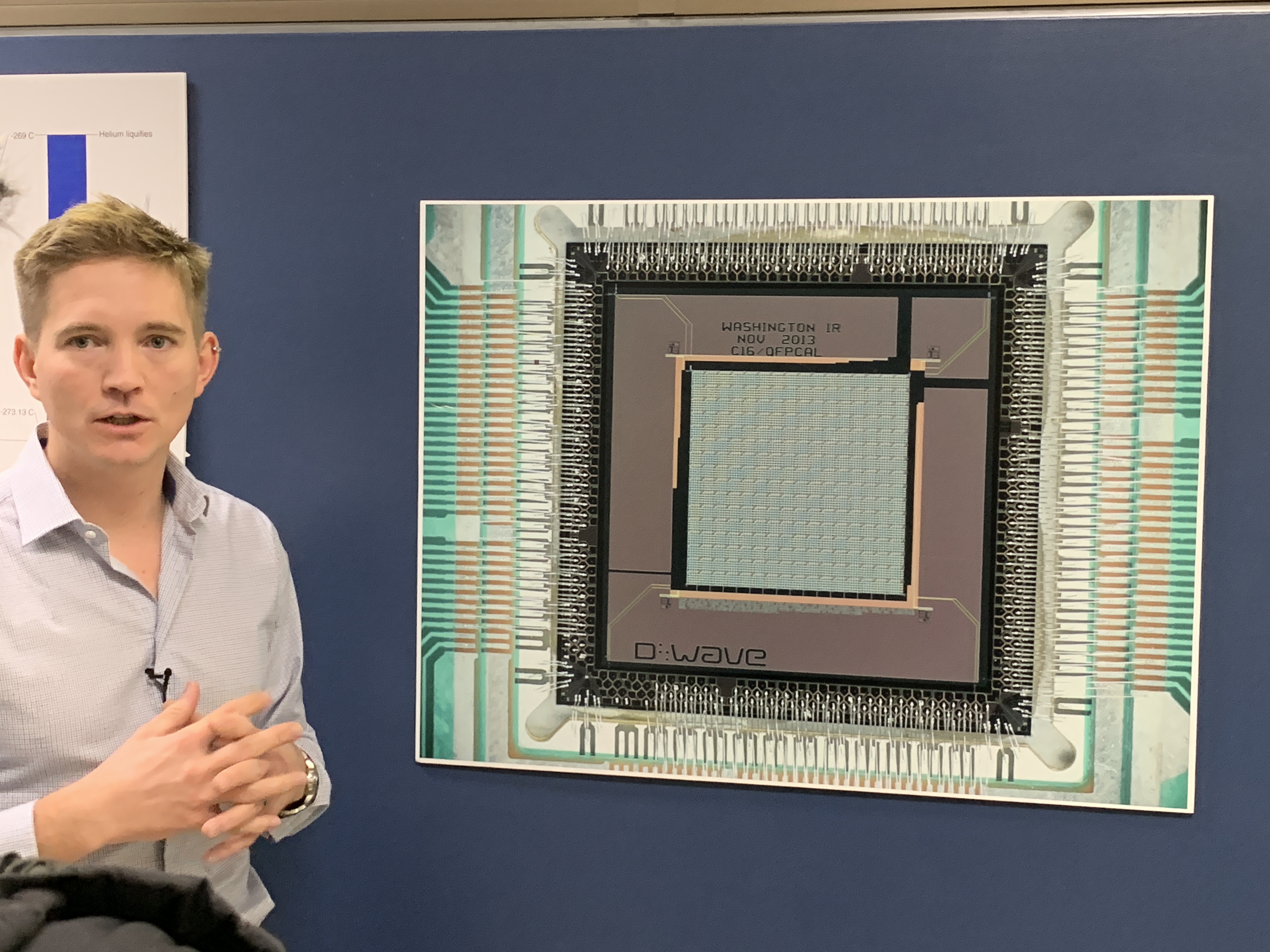

When I went to visit D-Wave they gave us unprecedented access to the inside of one of their quantum machines. The computers, which are about the size of a garden shed, have a control unit on the front that manages the temperature as well as queuing system to translate and communicate the problems sent in by users.

Inside the machine is a tube that, when fully operational, contains a small chip super-cooled to 0.015 Kelvin, or -459.643 degrees Fahrenheit or -273.135 degrees Celsius. The entire system looks like something out of the Death Star — a cylinder of pure data that the heroes must access by walking through a little door in the side of a jet-black cube.

It’s quite thrilling to see this odd little chip inside its super-cooled home. As the computer revolution maintained its predilection toward room-temperature chips, these odd and unique machines are a connection to an alternate timeline where physics is wrestled into submission in order to do some truly remarkable things.

And now anyone — from kids to PhDs to everyone in-between — can try it.

Learning to program a quantum computer takes time. Because the processor doesn’t work like a classic universal computer, you have to train the chip to perform simple functions that your own cellphone can do in seconds. However, in some cases, researchers have found the chips can outperform classic computers by 3,600 times. This trade-off — the movement from the known to the unknown — is why D-Wave exposed their product to the world.

“We built Leap to give millions of developers access to quantum computing. We built the first quantum application environment so any software developer interested in quantum computing can start writing and running applications — you don’t need deep quantum knowledge to get started. If you know Python, you can build applications on Leap,” said Brownell.

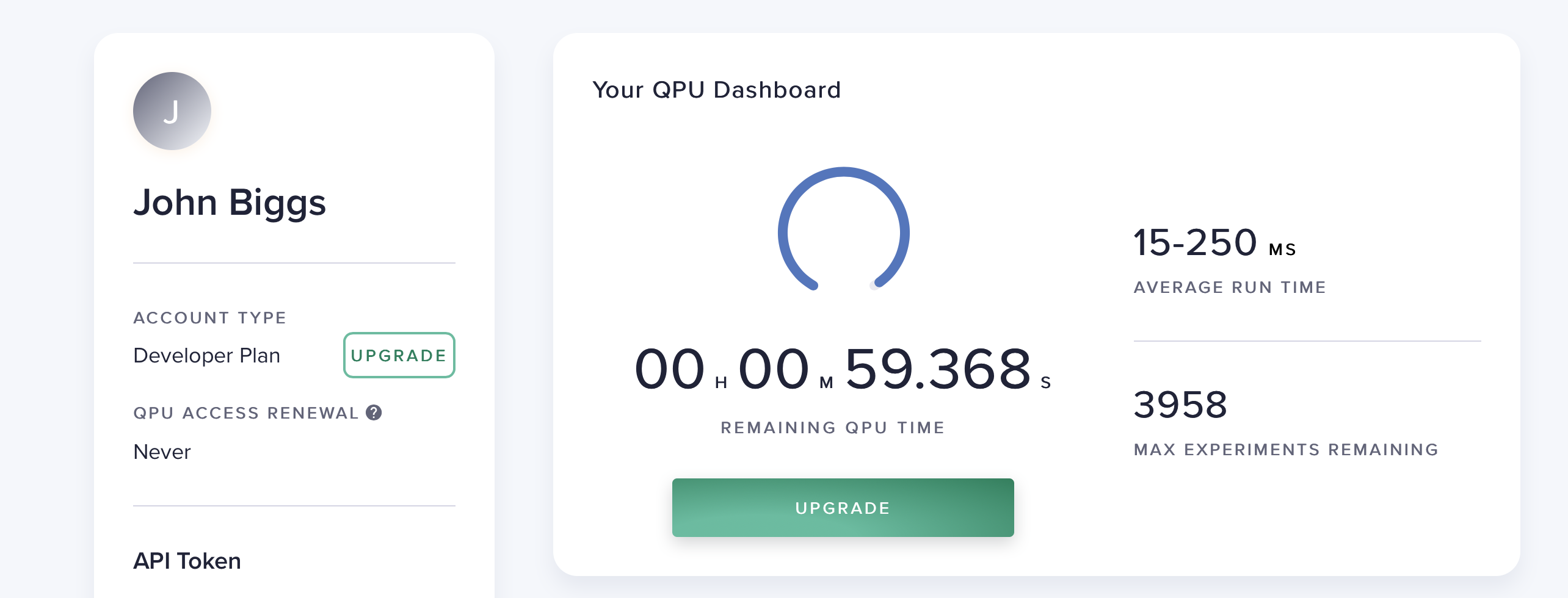

To get started on the road to quantum computing, D-Wave built the Leap platform. The Leap is an open-source toolkit for developers. When you sign up you receive one minute’s worth of quantum processing unit time which, given that most problems run in milliseconds, is more than enough to begin experimenting. A queue manager lines up your code and runs it in the order received and the answers are spit out almost instantly.

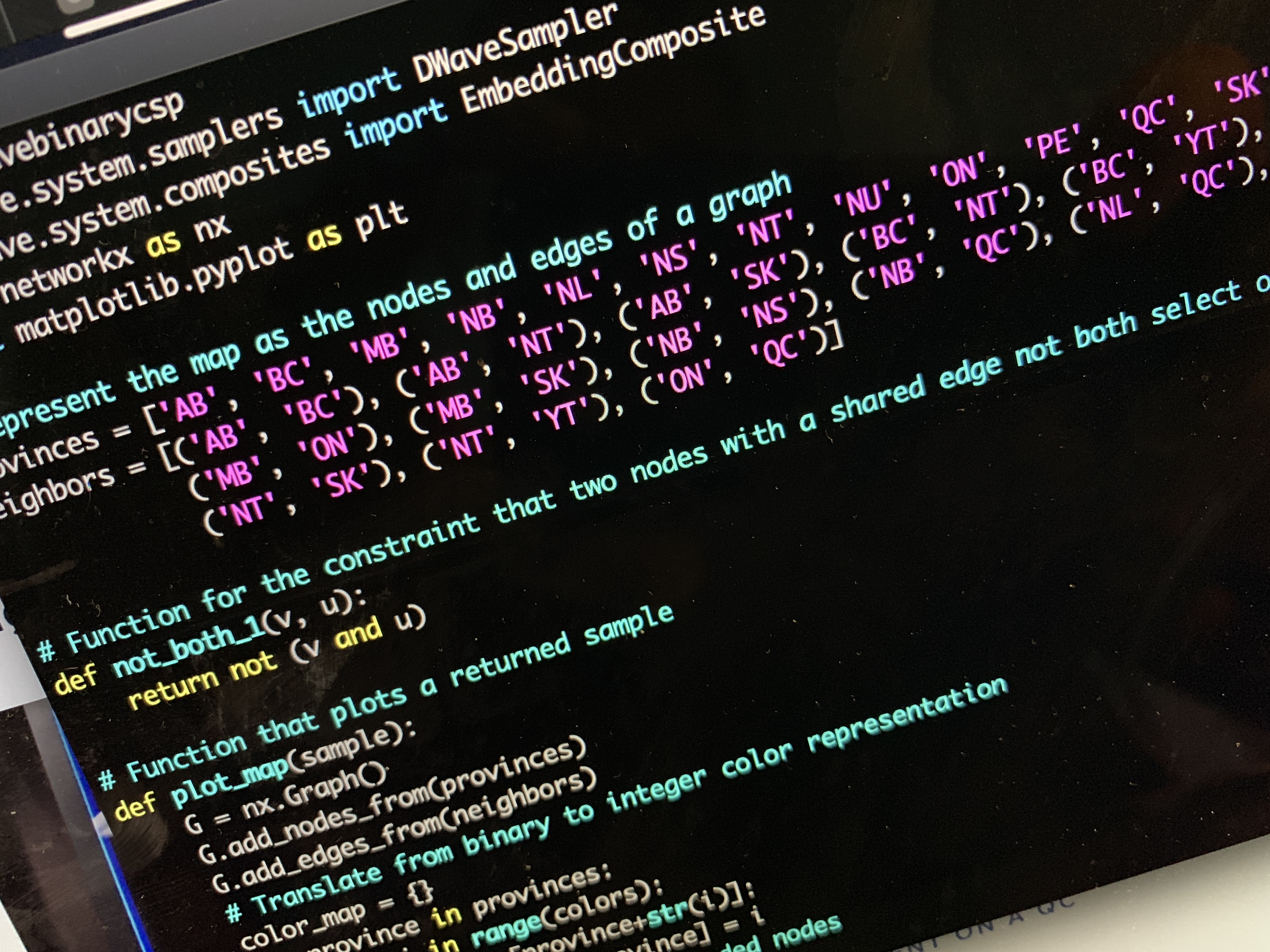

You can code on the QPU with Python or via Jupiter notebooks, and it allows you to connect to the QPU with an API token. After writing your code, you can send commands directly to the QPU and then output the results. The programs are currently pretty esoteric and require a basic knowledge of quantum programming but, it should be remembered, classic computer programming was once daunting to the average user.

I downloaded and ran most of the demonstrations without a hitch. These demonstrations — factoring programs, network generators and the like — essentially turned the concepts of classical programming into quantum questions. Instead of iterating through a list of factors, for example, the quantum computer creates a “parallel universe” of answers and then collapses each one until it finds the right answer. If this sounds odd it’s because it is. The researchers at D-Wave argue all the time about how to imagine a quantum computer’s various processes. One camp sees the physical implementation of a quantum computer to be simply a faster methodology for rendering answers. The other camp, itself aligned with Professor David Deutsch’s ideas presented in The Beginning of Infinity, sees the sheer number of possible permutations a quantum computer can traverse as evidence of parallel universes.

What does the code look like? It’s hard to read without understanding the basics, a fact that D-Wave engineers factored for in offering online documentation. For example, below is most of the factoring code for one of their demo programs, a bit of code that can be reduced to about five lines on a classical computer. However, when this function uses a quantum processor, the entire process takes milliseconds versus minutes or hours.

# Python Program to find the factors of a number

def print_factors(x):

print(“The factors of”,x,”are:”)

for i in range(1, x + 1):

if x % i == 0:

print(i)

num = 320

#num = int(input(“Enter a number: “))

print_factors(num)

@qpu_ha

def factor(P, use_saved_embedding=True):

####################################################################################################

####################################################################################################

construction_start_time = time.time()

validate_input(P, range(2 ** 6))

csp = dbc.factories.multiplication_circuit(3)

bqm = dbc.stitch(csp, min_classical_gap=.1)

p_vars = [‘p0’, ‘p1’, ‘p2’, ‘p3’, ‘p4’, ‘p5’]

fixed_variables = dict(zip(reversed(p_vars), “{:06b}”.format(P)))

fixed_variables = {var: int(x) for(var, x) in fixed_variables.items()}

for var, value in fixed_variables.items():

bqm.fix_variable(var, value)

log.debug(‘bqm construction time: %s’, time.time() – construction_start_time)

####################################################################################################

####################################################################################################

sample_time = time.time()

sampler = DWaveSampler(solver_features=dict(online=True, name=’DW_2000Q.*’))

_, target_edgelist, target_adjacency = sampler.structure

if use_saved_embedding:

from factoring.embedding import embeddings

embedding = embeddings[sampler.solver.id]

else:

embedding = minorminer.find_embedding(bqm.quadratic, target_edgelist)

if bqm and not embedding:

raise ValueError(“no embedding found”)

bqm_embedded = dimod.embed_bqm(bqm, embedding, target_adjacency, 3.0)

kwargs = {}

if ‘num_reads’ in sampler.parameters:

kwargs[‘num_reads’] = 50

if ‘answer_mode’ in sampler.parameters:

kwargs[‘answer_mode’] = ‘histogram’

response = sampler.sample(bqm_embedded, **kwargs)

response = dimod.unembed_response(response, embedding, source_bqm=bqm)

sampler.client.close()

log.debug(’embedding and sampling time: %s’, time.time() – sample_time)

“The industry is at an inflection point and we’ve moved beyond the theoretical, and into the practical era of quantum applications. It’s time to open this up to more smart, curious developers so they can build the first quantum killer app. Leap’s combination of immediate access to live quantum computers, along with tools, resources, and a community, will fuel that,” said Brownell. “For Leap’s future, we see millions of developers using this to share ideas, learn from each other and contribute open-source code. It’s that kind of collaborative developer community that we think will lead us to the first quantum killer app.”

The folks at D-Wave created a number of tutorials as well as a forum where users can learn and ask questions. The entire project is truly the first of its kind and promises unprecedented access to what amounts to the foreseeable future of computing. I’ve seen lots of technology over the years, and nothing quite replicated the strange frisson associated with plugging into a quantum computer. Like the teletype and green-screen terminals used by the early hackers like Bill Gates and Steve Wozniak, D-Wave has opened up a strange new world. How we explore it us up to us.

Powered by WPeMatico