computing

Auto Added by WPeMatico

Auto Added by WPeMatico

It’s only been a few weeks since Google brought the Assistant to Google Maps to help you reply to messages, play music and more. This feature first launched in English and will soon start rolling out to all Assistant phone languages. In addition, Google also today announced that the Assistant will come to Android Messages, the standard text messaging app on Google’s mobile operating system, in the coming months.

If you remember Allo, Google’s last failed messaging app, then a lot of this will sound familiar. For Allo, after all, Assistant support was one of the marquee features. The different, though, is that for the time being, Google is mostly using the Assistant as an additional layer of smarts in Messages while in Allo, you could have full conversations with a special Assistant bot.

In Messages, the Assistant will automatically pop up suggestion chips when you are having conversations with somebody about movies, restaurants and the weather. That’s a pretty limited feature set for now, though Google tells us that it plans to expand it over time.

What’s important here is that the suggestions are generated on your phone (and that may be why the machine learning model is limited, too, since it has to run locally). Google is clearly aware that people don’t want the company to get any information about their private text chats. Once you tap on one of the Assistant suggestions, though, Google obviously knows that you were talking about a specific topic, even though the content of the conversation itself is never sent to Google’s servers. The person you are chatting with will only see the additional information when you push it to them.

Powered by WPeMatico

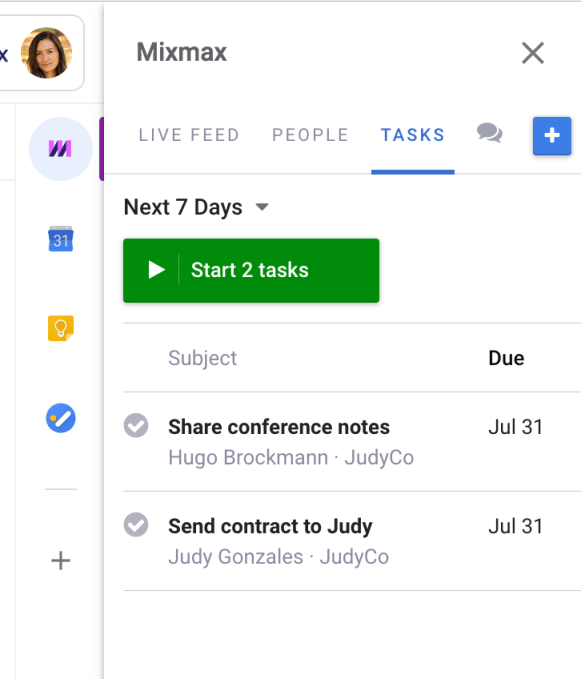

Mixmax today introduced version 2.0 of its Gmail-based tool and plugin for Chrome that promises to make your daily communications chores a bit easier to handle.

With version 2.0, Mixmax gets an updated editor that better integrates with the current Gmail interface and that gets out of the way of popular extensions like Grammarly. That’s table stakes, of course, but I’ve tested it for a bit and the new version does indeed do a better job of integrating itself into the current Gmail interface and feels a bit faster, too.

What’s more interesting is that the service now features a better integration with LinkedIn . There’s both an integration with the LinkedIn Sales Navigator, LinkedIn’s tool for generating sales leads and contacting them, and LinkedIn’s messaging tools for sending InMail and connection requests — and sees info about a recipient’s LinkedIn profile, including the LinkedIn Icebreakers section — right from the Mixmax interface.

Together with its existing Salesforce integration, this should make the service even more interesting to sales people. And the Salesforce integration, too, is getting a bit of a new feature that can now automatically create a new contact in the CRM tool when a prospect’s email address — maybe from LinkedIn — isn’t in your database yet.

Also new in Mixmax 2.0 is something the company calls “Beast Mode.” Not my favorite name, I have to admit, but it’s an interesting task automation tool that focuses on helping customer-facing users prioritize and complete batches of tasks quickly and that extends the service’s current automation tools.

Finally, Mixmax now also features a Salesforce-linked dialer widget for making calls right from the Chrome extension.

Finally, Mixmax now also features a Salesforce-linked dialer widget for making calls right from the Chrome extension.

“We’ve always been focused on helping business people communicate better, and everything we’re rolling out for Mixmax 2.0 only underscores that focus,” said Mixmax CEO and co-founder Olof Mathé. “Many of our users live in Gmail and our integration with LinkedIn’s Sales Navigator ensures users can conveniently make richer connections and seamlessly expand their networks as part of their email workflow.”

Whether you get these new features depends on how much you pay, though. Everybody, including free users, gets access to the refreshed interface. Beast Mode and the dialer are available with the enterprise plan, the company’s highest-level plan which doesn’t have a published price. The dialer is also available for an extra $20/user/month on the $49/month/user Growth plan. LinkedIn Sales Navigator support is available with the growth and enterprise plans.

Sadly, that means that if you are on the cheaper Starter and Small Business plans ($9/user/month and $24/user/month respectively), you won’t see any of these new features anytime soon.

Powered by WPeMatico

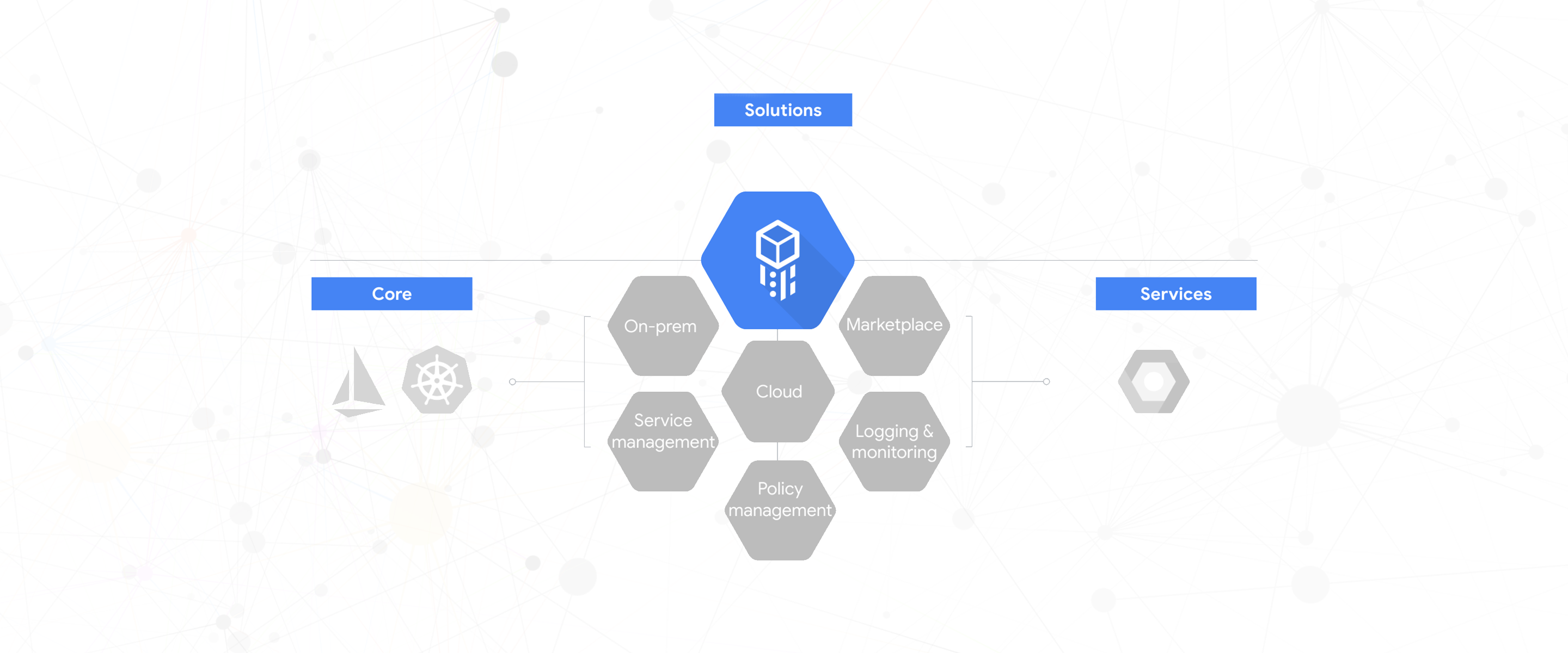

Last July, at its Cloud Next conference, Google announced the Cloud Services Platform, its first real foray into bringing its own cloud services into the enterprise data center as a managed service. Today, the Cloud Services Platform (CSP) is launching into beta.

It’s important to note that the CSP isn’t — at least for the time being — Google’s way of bringing all of its cloud-based developer services to the on-premises data center. In other words, this is a very different project from something like Microsoft’s Azure Stack. Instead, the focus is on the Google Kubernetes Engine, which allows enterprises to then run their applications in both their own data centers and on virtually any cloud platform that supports containers. As Google Cloud engineering director Chen Goldberg told me, the idea here it to help enterprises innovate and modernize. “Clearly, everybody is very excited about cloud computing, on-demand compute and managed services, but customers have recognized that the move is not that easy,” she said and noted that the vast majority of enterprises are adopting a hybrid approach. And while containers are obviously still a very new technology, she feels good about this bet on the technology because most enterprises are already adopting containers and Kubernetes — and they are doing so at exactly the same time as they are adopting cloud and especially hybrid clouds.

As Google Cloud engineering director Chen Goldberg told me, the idea here it to help enterprises innovate and modernize. “Clearly, everybody is very excited about cloud computing, on-demand compute and managed services, but customers have recognized that the move is not that easy,” she said and noted that the vast majority of enterprises are adopting a hybrid approach. And while containers are obviously still a very new technology, she feels good about this bet on the technology because most enterprises are already adopting containers and Kubernetes — and they are doing so at exactly the same time as they are adopting cloud and especially hybrid clouds.

It’s important to note that CSP is a managed platform. Google handles all of the heavy lifting like upgrades and security patches. And for enterprises that need an easy way to install some of the most popular applications, the platform also supports Kubernetes applications from the GCP Marketplace.

As for the tech itself, Goldberg stressed that this isn’t just about Kubernetes. The service also uses Istio, for example, the increasingly popular service mesh that makes it easier for enterprises to secure and control the flow of traffic and API calls between its applications.

With today’s release, Google is also launching its new CSP Config Management tool to help users create multi-cluster policies and set up and enforce access controls, resource quotas and more. CSP also integrates with Google’s Stackdriver Monitoring service and continuous delivery platforms.

“On-prem is not easy,” Goldberg said, and given that this is the first time the company is really supporting software in a data center that is not its own, that’s probably an understatement. But Google also decided that it didn’t want to force users into a specific set of hardware specifications like Azure Stack does, for example. Instead, CSP sits on top of VMware’s vSphere server virtualization platform, which most enterprises already use in their data centers anyway. That surely simplifies things, given that this is a very well-understood platform.

Powered by WPeMatico

For the longest time, Arm was basically synonymous with chip designs for smartphones and very low-end devices. But more recently, the company launched solutions for laptops, cars, high-powered IoT devices and even servers. Today, ahead of MWC 2019, the company is officially launching two new products for cloud and edge applications, the Neoverse N1 and E1. Arm unveiled the Neoverse brand a few months ago, but it’s only now that it is taking concrete form with the launch of these new products.

“We’ve always been anticipating that this market is going to shift as we move more towards this world of lots of really smart devices out at the endpoint — moving beyond even just what smartphones are capable of doing,” Drew Henry, Arms’ SVP and GM for Infrastructure, told me in an interview ahead of today’s announcement. “And when you start anticipating that, you realize that those devices out of those endpoints are going to start creating an awful lot of data and need an awful lot of compute to support that.”

To address these two problems, Arm decided to launch two products: one that focuses on compute speed and one that is all about throughput, especially in the context of 5G.

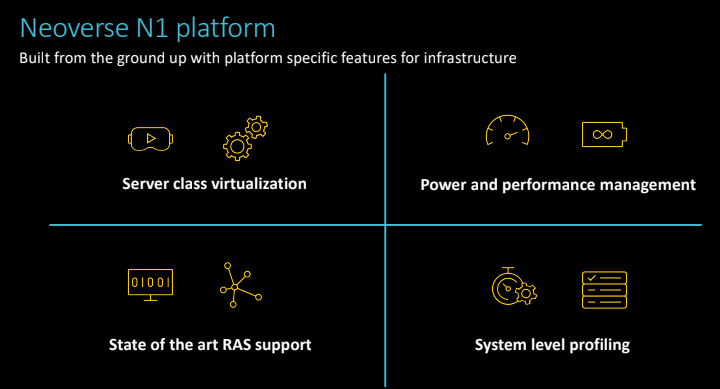

The Neoverse N1 platform is meant for infrastructure-class solutions that focus on raw compute speed. The chips should perform significantly better than previous Arm CPU generations meant for the data center and the company says that it saw speedups of 2.5x for Nginx and MemcacheD, for example. Chip manufacturers can optimize the 7nm platform for their needs, with core counts that can reach up to 128 cores (or as few as 4).

“This technology platform is designed for a lot of compute power that you could either put in the data center or stick out at the edge,” said Henry. “It’s very configurable for our customers so they can design how big or small they want those devices to be.”

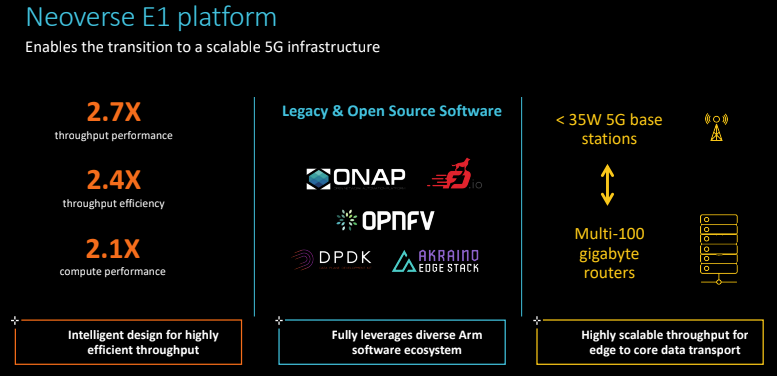

The E1 is also a 7nm platform, but with a stronger focus on edge computing use cases where you also need some compute power to maybe filter out data as it is generated, but where the focus is on moving that data quickly and efficiently. “The E1 is very highly efficient in terms of its ability to be able to move data through it while doing the right amount of compute as you move that data through,” explained Henry, who also stressed that the company made the decision to launch these two different platforms based on customer feedback.

There’s no point in launching these platforms without software support, though. A few years ago, that would have been a challenge because few commercial vendors supported their data center products on the Arm architecture. Today, many of the biggest open-source and proprietary projects and distributions run on Arm chips, including Red Hat Enterprise Linux, Ubuntu, Suse, VMware, MySQL, OpenStack, Docker, Microsoft .Net, DOK and OPNFV. “We have lots of support across the space,” said Henry. “And then as you go down to that tier of languages and libraries and compilers, that’s a very large investment area for us at Arm. One of our largest investments in engineering is in software and working with the software communities.”

And as Henry noted, AWS also recently launched its Arm-based servers — and that surely gave the industry a lot more confidence in the platform, given that the biggest cloud supplier is now backing it, too.

Powered by WPeMatico

Like virtually every big enterprise company, a few years ago, the German auto giant Daimler decided to invest in its own on-premises data centers. And while those aren’t going away anytime soon, the company today announced that it has successfully moved its on-premises big data platform to Microsoft’s Azure cloud. This new platform, which the company calls eXtollo, is Daimler’s first major service to run outside of its own data centers, though it’ll probably not be the last.

As Daimler’s head of its corporate center of excellence for advanced analytics and big data Guido Vetter told me, the company started getting interested in big data about five years ago. “We invested in technology — the classical way, on-premise — and got a couple of people on it. And we were investigating what we could do with data because data is transforming our whole business as well,” he said.

By 2016, the size of the organization had grown to the point where a more formal structure was needed to enable the company to handle its data at a global scale. At the time, the buzz phrase was “data lakes” and the company started building its own in order to build out its analytics capacities.

Electric lineup, Daimler AG

“Sooner or later, we hit the limits as it’s not our core business to run these big environments,” Vetter said. “Flexibility and scalability are what you need for AI and advanced analytics and our whole operations are not set up for that. Our backend operations are set up for keeping a plant running and keeping everything safe and secure.” But in this new world of enterprise IT, companies need to be able to be flexible and experiment — and, if necessary, throw out failed experiments quickly.

So about a year and a half ago, Vetter’s team started the eXtollo project to bring all the company’s activities around advanced analytics, big data and artificial intelligence into the Azure Cloud, and just over two weeks ago, the team shut down its last on-premises servers after slowly turning on its solutions in Microsoft’s data centers in Europe, the U.S. and Asia. All in all, the actual transition between the on-premises data centers and the Azure cloud took about nine months. That may not seem fast, but for an enterprise project like this, that’s about as fast as it gets (and for a while, it fed all new data into both its on-premises data lake and Azure).

If you work for a startup, then all of this probably doesn’t seem like a big deal, but for a more traditional enterprise like Daimler, even just giving up control over the physical hardware where your data resides was a major culture change and something that took quite a bit of convincing. In the end, the solution came down to encryption.

“We needed the means to secure the data in the Microsoft data center with our own means that ensure that only we have access to the raw data and work with the data,” explained Vetter. In the end, the company decided to use the Azure Key Vault to manage and rotate its encryption keys. Indeed, Vetter noted that knowing that the company had full control over its own data was what allowed this project to move forward.

Vetter tells me the company obviously looked at Microsoft’s competitors as well, but he noted that his team didn’t find a compelling offer from other vendors in terms of functionality and the security features that it needed.

Today, Daimler’s big data unit uses tools like HD Insights and Azure Databricks, which covers more than 90 percents of the company’s current use cases. In the future, Vetter also wants to make it easier for less experienced users to use self-service tools to launch AI and analytics services.

While cost is often a factor that counts against the cloud, because renting server capacity isn’t cheap, Vetter argues that this move will actually save the company money and that storage costs, especially, are going to be cheaper in the cloud than in its on-premises data center (and chances are that Daimler, given its size and prestige as a customer, isn’t exactly paying the same rack rate that others are paying for the Azure services).

As with so many big data AI projects, predictions are the focus of much of what Daimler is doing. That may mean looking at a car’s data and error code and helping the technician diagnose an issue or doing predictive maintenance on a commercial vehicle. Interestingly, the company isn’t currently bringing to the cloud any of its own IoT data from its plants. That’s all managed in the company’s on-premises data centers because it wants to avoid the risk of having to shut down a plant because its tools lost the connection to a data center, for example.

Powered by WPeMatico

As online gaming becomes the new social forum for living out virtual lives, a new startup called Medal.tv has raised $3.5 million for its in-game clipping service to capture and share the Kodak moments and digital memories that are increasingly happening in places like Fortnite or Apex Legends.

Digital worlds like Fortnite are now far more than just a massively multiplayer gaming space. They’re places where communities form, where social conversations happen and where, increasingly, people are spending the bulk of their time online. They even host concerts — like the one from EDM artist Marshmello, which drew (according to the DJ himself) roughly 10 million players onto the platform.

While several services exist to provide clips of live streams from gamers who broadcast on platforms like Twitch, Medal.tv bills itself as the first to offer clipping services for the private games that more casual gamers play among friends and far-flung strangers around the world.

“Essentially the next generation is spending the same time inside games that we used to playing sports outside and things like that,” says Medal.tv’s co-founder and chief executive, Pim DeWitte. “It’s not possible to tell how far it will go. People will capture as many if not more moments for the reason that it’s simpler.”

The company marks a return to the world of gaming for DeWitte, a serial entrepreneur who first started coding when he was 13 years old.

Hailing from a small town in the Netherlands called Nijmegen, DeWitte first reaped the rewards of startup success with a gaming company called SoulSplit. Built on the back of his popular YouTube channel, the SoulSplit game was launched with DeWitte’s childhood friend, Iggy Harmsen, and a fellow online gamer, Josh Lipson, who came on board as SoulSplit’s chief technology officer.

At its height, SoulSplit was bringing in $1 million in revenue and employed roughly 30 people, according to interviews with DeWitte.

The company shut down in 2015 and the co-founders split up to pursue other projects. For DeWitte that meant a stint working with Doctors Without Borders on an app called MapSwipe that would use satellite imagery to better locate people in the event of a humanitarian crisis. He also helped the nonprofit develop a tablet that could be used by doctors deployed to treat Ebola outbreaks.

Then in 2017, as social gaming was becoming more popular on games like Fortnite, DeWitte and his co-founders returned to the industry to launch Medal.tv.

It initially started as a marketing tool to get people interested in playing the games that DeWitte and his co-founders were hoping to develop. But as the clipping service took off, DeWitte and co. realized they potentially had a more interesting social service on their hands.

“We were going to build a mobile app and were going to load a bunch of videos of people playing games and then we we’re going to load videos of our games,” DeWitte says.

The service allows users to capture the last 15 seconds of gameplay using different recording mechanisms based on game type. Medal.tv captures gameplay on a device and users can opt-in to record sound as well.

“It is programmed so that it only records the game,” DeWitte says. “There is no inbound connection. It only calls for the API [and] all of the things that would be somewhat dangerous from a privacy perspective are all opt-in.”

There are roughly 30,000 users on the platform every week and around 15,000 daily active users, according to DeWitte. Launched last May, the company has been growing between 5 percent and 10 percent weekly, according to DeWitte. Typically, users are sharing clips through Discord, WhatsApp and Instagram direct messages, DeWitte said.

In addition to the consumer-facing clipping service, Medal also offers a data collection service that aggregates information about the clips that are shared by Medal’s users so game developers and streamers can get a sense of how clips are being shared across which platform.

“We look at clips as a form of communication and in most activity that we see, that’s how it’s being used,” says DeWitte.

But that information is also valuable to esports organizations to determine where they need to allocate new resources.

“Medal.tv Metrics is spectacular,” said Peter Levin, chairman of the Immortals esports organization, in a statement. “With it, any gaming organization gains clear, actionable insights into the organic reach of their content, and can build a roadmap to increase it in a measurable way.”

The activity that Medal was seeing was impressive enough to attract the attention of investors led by Backed VC and Initial Capital. Ridge Ventures, Makers Fund and Social Starts participated in the company’s $3.5 million round as well, with Alex Brunicki, a founding partner at Backed, and Matteo Vallone, principal at Initial, joining the company’s board.

“Emerging generations are experiencing moments inside games the same way we used to with sports and festivals growing up. Digital and physical identity are merging and the technology for gamers hasn’t evolved to support that,” said Brunicki in a statement.

Medal’s platform works with games like Apex Legends, Fortnite, Roblox, Minecraft and Oldschool Runescape (where DeWitte first cut his teeth in gaming).

“Friends are the main driver of game discovery, and game developers benefit from shareable games as a result. Medal.tv is trying to enable that without the complexity of streaming,” said Vallone, who previously headed up games for Google Play Europe, and now sits on the Medal board.

Powered by WPeMatico

Redis Labs, a startup that offers commercial services around the Redis in-memory data store (and which counts Redis creator and lead developer Salvatore Sanfilippo among its employees), today announced that it has raised a $60 million Series E funding round led by private equity firm Francisco Partners.

The firm didn’t participate in any of Redis Labs’ previous rounds, but existing investors Goldman Sachs Private Capital Investing, Bain Capital Ventures, Viola Ventures and Dell Technologies Capital all participated in this round.

In total, Redis Labs has now raised $146 million and the company plans to use the new funding to accelerate its go-to-market strategy and continue to invest in the Redis community and product development.

Current Redis Labs users include the likes of American Express, Staples, Microsoft, Mastercard and Atlassian . In total, the company now has more than 8,500 customers. Because it’s pretty flexible, these customers use the service as a database, cache and message broker, depending on their needs. The company’s flagship product is Redis Enterprise, which extends the open-source Redis platform with additional tools and services for enterprises. The company offers managed cloud services, which give businesses the choice between hosting on public clouds like AWS, GCP and Azure, as well as their private clouds, in addition to traditional software downloads and licenses for self-managed installs.

Redis Labs CEO Ofer Bengal told me the company’s isn’t cash positive yet. He also noted that the company didn’t need to raise this round but that he decided to do so in order to accelerate growth. “In this competitive environment, you have to spend a lot and push hard on product development,” he said.

It’s worth noting that he stressed that Francisco Partners has a reputation for taking companies forward and the logical next step for Redis Labs would be an IPO. “We think that we have a very unique opportunity to build a very large company that deserves an IPO,” he said.

Part of this new competitive environment also involves competitors that use other companies’ open-source projects to build their own products without contributing back. Redis Labs was one of the first of a number of open-source companies that decided to offer its newest releases under a new license that still allows developers to modify the code but that forces competitors that want to essentially resell it to buy a commercial license. Ofer specifically notes AWS in this context. It’s worth noting that this isn’t about the Redis database itself but about the additional modules that Redis Labs built. Redis Enterprise itself is closed-source.

“When we came out with this new license, there were many different views,” he acknowledged. “Some people condemned that. But after the initial noise calmed down — and especially after some other companies came out with a similar concept — the community now understands that the original concept of open source has to be fixed because it isn’t suitable anymore to the modern era where cloud companies use their monopoly power to adopt any successful open source project without contributing anything to it.”

Powered by WPeMatico

Peltarion, a Swedish startup founded by former execs from companies like Spotify, Skype, King, TrueCaller and Google, today announced that it has raised a $20 million Series A funding round led by Euclidean Capital, the family office for hedge fund billionaire James Simons. Previous investors FAM and EQT Ventures also participated, and this round brings the company’s total funding to $35 million.

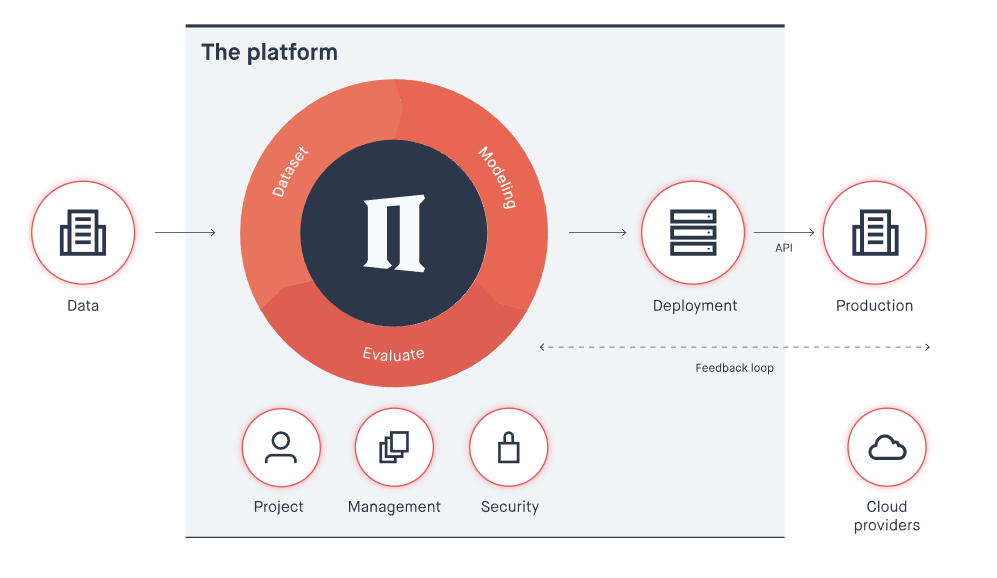

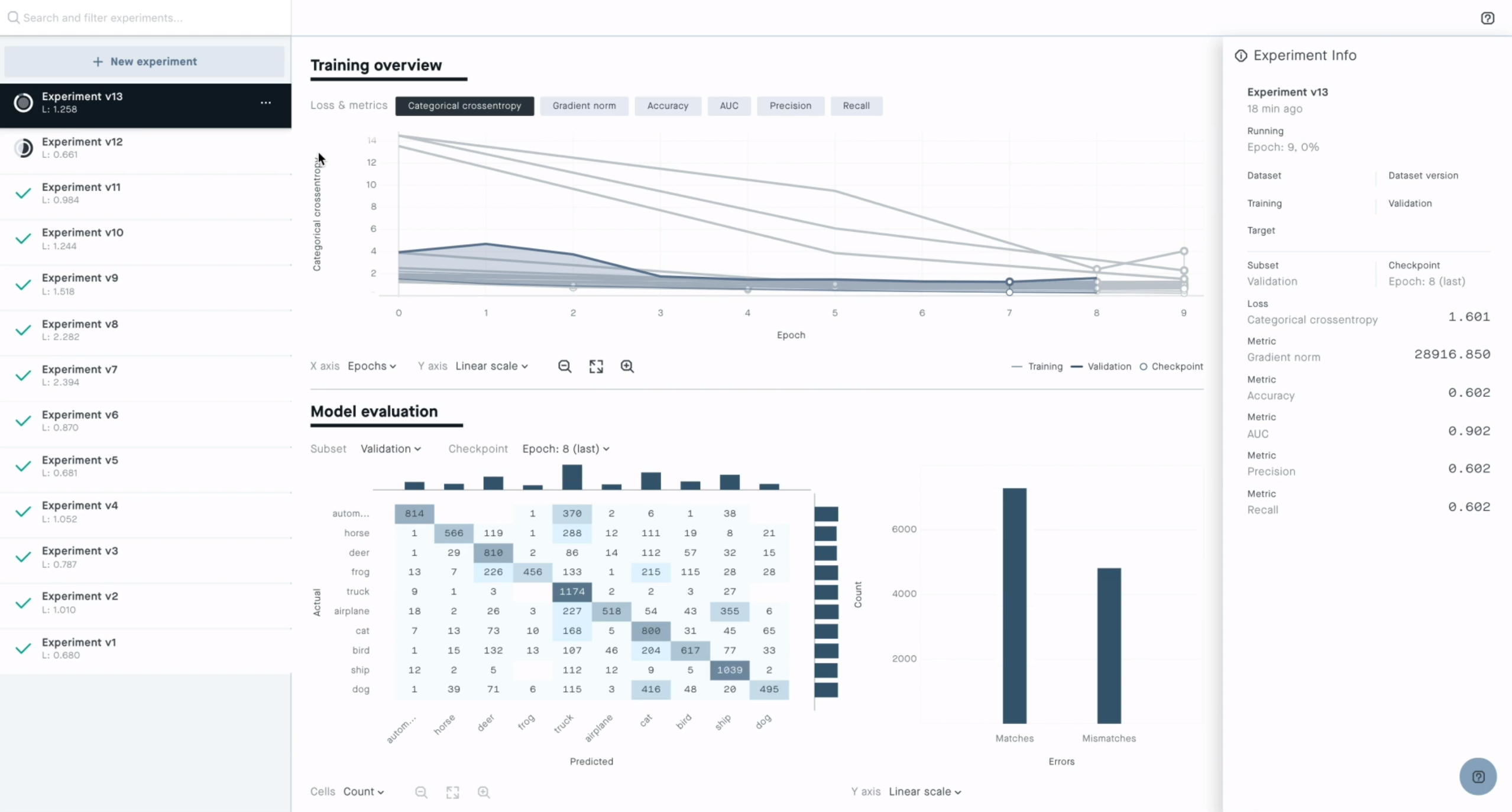

There is obviously no dearth of AI platforms these days. Peltarion focus on what it calls “operational AI.” The service offers an end-to-end platform that lets you do everything from pre-processing your data to building models and putting them into production. All of this runs in the cloud and developers get access to a graphical user interface for building and testing their models. All of this, the company stresses, ensures that Peltarion’s users don’t have to deal with any of the low-level hardware or software and can instead focus on building their models.

“The speed at which AI systems can be built and deployed on the operational platform is orders of magnitude faster compared to the industry standard tools such as TensorFlow and require far fewer people and decreases the level of technical expertise needed,” Luka Crnkovic-Friis, of Peltarion’s CEO and co-founder, tells me. “All this results in more organizations being able to operationalize AI and focusing on solving problems and creating change.”

In a world where businesses have a plethora of choices, though, why use Peltarion over more established players? “Almost all of our clients are worried about lock-in to any single cloud provider,” Crnkovic-Friis said. “They tend to be fine using storage and compute as they are relatively similar across all the providers and moving to another cloud provider is possible. Equally, they are very wary of the higher-level services that AWS, GCP, Azure, and others provide as it means a complete lock-in.”

Peltarion, of course, argues that its platform doesn’t lock in its users and that other platforms take far more AI expertise to produce commercially viable AI services. The company rightly notes that, outside of the tech giants, most companies still struggle with how to use AI at scale. “They are stuck on the starting blocks, held back by two primary barriers to progress: immature patchwork technology and skills shortage,” said Crnkovic-Friis.

The company will use the new funding to expand its development team and its teams working with its community and partners. It’ll also use the new funding for growth initiatives in the U.S. and other markets.

Powered by WPeMatico

Taiwanese technology giant Foxconn International is backing Carbon Relay, a Boston-based startup emerging from stealth today that’s harnessing the algorithms used by companies like Facebook and Google for artificial intelligence to curb greenhouse gas emissions in the technology industry’s own backyard — the data center.

Already, the computing demands of the technology industry are responsible for 3 percent of total energy consumption — and the addition of new technologies like Bitcoin to the mix could add another half a percent to that figure within the next few years, according to Carbon Relay’s chief executive, Matt Provo.

That’s $25 billion in spending on energy per year across the industry, Provo says.

A former Apple employee, Provo went to Harvard Business School because he knew he wanted to be an entrepreneur and start his own business — and he wanted that business to solve a meaningful problem, he said.

Variability and dynamic nature of the data center relating to thermodynamics and the makeup of a facility or building is interesting for AI because humans can’t keep up.

“We knew what we wanted to focus on,” said Provo of himself and his two co-founders. “All three of us have an environmental sciences background as well… We were fired up about building something that was true AI that has positive value… the risk associated [with climate change] is going to hit in our lifetime, we were very inspired to build a company whose technology would have an impact on that.”

Carbon Relay’s mission and founding team, including Thibaut Perol and John Platt (two Harvard graduates with doctorates in applied mathematics) was able to attract some big backers.

The company has raised $6 million from industry giants like Foxconn and Boston-based angel investors, including Dr. James Cash — a director on the boards of Walmart, Microsoft, GE and State Street; Black Duck Software founder, Douglas Levin; Karim Lakhani, a director on the Mozilla Corporation board; and Paul Deninger, a director on the board of the building operations management company, Resideo (formerly Honeywell).

Provo and his team didn’t just raise the money to tackle data centers — and Foxconn’s involvement hints at the company’s broader goals. “My vision is that commercial HVAC systems or any machinery that operates in a business would not ship without our intelligence inside of it,” says Provo.

What’s more compelling is that the company’s technology works without exposing the underlying business to significant security risks, Provo says.

“In the end all we’re doing are sending these floats… these values. These values are mathematical directions for the actions that need to be taken,” he says.

Carbon Relay is already profitable, generating $4 million in revenue last year and on track for another year of steady growth, according to Provo.

Carbon Relay offers two products: Optimize and Predict, that gather information from existing HVAC devices and then control those systems continuously and automatically with continuous decision making.

“Each data center is unique and enormously complex, requiring its own approach to managing energy use over time,” said Cash, who’s serving as the company’s chairman. “The Carbon Relay team is comprised of people who are passionate about creating a solution that will adapt to the needs of every large data center, creating a tangible and rapid impact on the way these organizations do business.”

Powered by WPeMatico

Andela, the company that connects Africa’s top software developers with technology companies from the U.S. and around the world, has raised $100 million in a new round of funding.

The new financing from Generation Investment Management (the investment fund co-founded by former Vice President Al Gore) puts the valuation of the company at somewhere between $600 million and $700 million, based on data available from PitchBook on the company’s valuation following its previous $40 million funding.

Previous investors from that financing, including the Chan Zuckerberg Initiative, GV, Spark Capital and CRE Venture Capital, also participated.

“It’s increasingly clear that the future of work will be distributed, in part due to the severe shortage of engineering talent,” says Jeremy Johnson, co-founder and CEO of Andela. “Given our access to incredible talent across Africa, as well as what we’ve learned from scaling hundreds of engineering teams around the world, Andela is able to provide the talent and the technology to power high-performing teams and help companies adopt the distributed model faster.”

The company now has more than 200 customers paying for access to the roughly 1,100 developers Andela has trained and manages.

Since its founding in 2014, Andela has seen more than 130,000 applicants for those 1,100 slots. After a promising developer is onboarded and goes through a six-month training bootcamp at one of the company’s coding campuses in Nigeria, Kenya, Rwanda or Uganda, they’re placed with an Andela customer to work as a remote, full-time employee.

Andela receives anywhere from $50,000 to $120,000 per developer from a company and passes one-third of that directly on to the developer, with the remainder going to support the company’s operations and cover the cost of training and maintaining its facilities in Africa. Coders working with Andela sign a four-year commitment (with a two-year requirement to work at the company), after which they’re able to do whatever they want.

Even after the two-year period is up, Andela boasts a 98 percent retention rate for developers, according to a person with knowledge of the company’s operations.

With the new cash in hand, Andela says it will double in size, hiring another thousand developers, and invest in new product development and its own engineering and data resources. Part of that product development will focus on refining its performance monitoring and management toolkit for overseeing remote workforces.

“We believe Andela is a transformational model to develop software engineers and deploy them at scale into the future enterprise,” says Lilly Wollman, co-head of Growth Equity at Generation Investment Management, in a statement. “The global demand for software engineers far exceeds supply, and that gap is projected to widen. Andela’s leading technology enables firms to effectively build and manage distributed engineering teams.”

Powered by WPeMatico