computing

Auto Added by WPeMatico

Auto Added by WPeMatico

Google Cloud today announced the launch of Filestore High Scale, a new storage option — and tier of Google’s existing Filestore service — for workloads that can benefit from access to a distributed high-performance storage option.

With Filestore High Scale, which is based on technology Google acquired when it bought Elastifile in 2019, users can deploy shared file systems with hundreds of thousands of IOPS, 10s of GB/s of throughput and at a scale of 100s of TBs.

“Virtual screening allows us to computationally screen billions of small molecules against a target protein in order to discover potential treatments and therapies much faster than traditional experimental testing methods,” says Christoph Gorgulla, a postdoctoral research fellow at Harvard Medical School’s Wagner Lab., which already put the new service through its paces. “As researchers, we hardly have the time to invest in learning how to set up and manage a needlessly complicated file system cluster, or to constantly monitor the health of our storage system. We needed a file system that could handle the load generated concurrently by thousands of clients, which have hundreds of thousands of vCPUs.”

The standard Google Cloud Filestore service already supports some of these use cases, but the company notes that it specifically built Filestore High Scale for high-performance computing (HPC) workloads. In today’s announcement, the company specifically focuses on biotech use cases around COVID-19. Filestore High Scale is meant to support tens of thousands of concurrent clients, which isn’t necessarily a standard use case, but developers who need this kind of power can now get it in Google Cloud.

In addition to High Scale, Google also today announced that all Filestore tiers now offer beta support for NFS IP-based access controls, an important new feature for those companies that have advanced security requirements on top of their need for a high-performance, fully managed file storage service.

Powered by WPeMatico

The OpenStack Foundation today announced that StarlingX, a container-based system for running edge deployments, is now a top-level project. With this, it joins the main OpenStack private and public cloud infrastructure project, the Airship lifecycle management system, Kata Containers and the Zuul CI/CD platform.

What makes StarlingX a bit different from some of these other projects is that it is a full stack for edge deployments — and in that respect, it’s maybe more akin to OpenStack than the other projects in the foundation’s stable. It uses open-source components from the Ceph storage platform, the KVM virtualization solution, Kubernetes and, of course, OpenStack and Linux. The promise here is that StarlingX can provide users with an easy way to deploy container and VM workloads to the edge, all while being scalable, lightweight and providing low-latency access to the services hosted on the platform.

Early StarlingX adopters include China UnionPay, China Unicom and T-Systems. The original codebase was contributed to the foundation by Intel and Wind River System in 2018. Since then, the project has seen 7,108 commits from 211 authors.

“The StarlingX community has made great progress in the last two years, not only in building great open source software but also in building a productive and diverse community of contributors,” said Ildiko Vancsa, ecosystem technical lead at the OpenStack Foundation. “The core platform for low-latency and high-performance applications has been enhanced with a container-based, distributed cloud architecture, secure booting, TPM device enablement, certificate management and container isolation. StarlingX 4.0, slated for release later this year, will feature enhancements such as support for Kata Containers as a container runtime, integration of the Ussuri version of OpenStack, and containerization of the remaining platform services.”

It’s worth remembering that the OpenStack Foundation has gone through a few changes in recent years. The most important of these is that it is now taking on other open-source infrastructure projects that are not part of the core OpenStack project but are strategically aligned with the organization’s mission. The first of these to graduate out of the pilot project phase and become top-level projects were Kata Containers and Zuul in April 2019, with Airship joining them in October.

Currently, the only pilot project for the OpenStack Foundation is its OpenInfra Labs project, a community of commercial vendors and academic institutions, including the likes of Boston University, Harvard, MIT, Intel and Red Hat, that are looking at how to better test open-source code in production-like environments.

Powered by WPeMatico

After a series of developer previews, Google today released the first beta of Android 11, and with that, it is also making these pre-release versions available for over-the-air updates. This time around, the list of supported devices only includes the Pixel 2, 3, 3a and 4.

If you’re brave enough to try this early version (and I wouldn’t do so on your daily driver until a few more people have tested it), you can now enroll here. Like always, Google is also making OS images available for download and an updated emulator is available, too.

Google says the beta focuses on three key themes: people, controls and privacy.

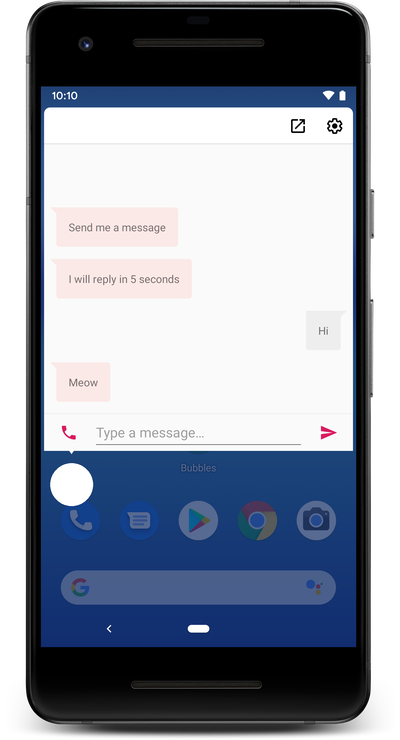

Like in previous updates, Google once again worked on improving notifications — in this case, conversation notifications, which now appear in a dedicated section at the top of the pull-down shade. From there, you will be able to take actions right from inside the notification or ask the OS to remind you of this conversation at a later time. Also new is built-in support in the notification system for what are essentially chat bubbles, which messaging apps can now use to notify you even as you are working (or playing) in another app.

Like in previous updates, Google once again worked on improving notifications — in this case, conversation notifications, which now appear in a dedicated section at the top of the pull-down shade. From there, you will be able to take actions right from inside the notification or ask the OS to remind you of this conversation at a later time. Also new is built-in support in the notification system for what are essentially chat bubbles, which messaging apps can now use to notify you even as you are working (or playing) in another app.

Another new feature is consolidated keyboard suggestions. With these, Autofill apps and Input Method Editors (think password managers and third-party keyboards), can now securely offer context-specific entries in the suggestion strip. Until now, enabling autofill for a password manager, for example, often involved delving into multiple settings and the whole experience often felt like a bit of a hack.

For those users who rely on voice to control their phones, Android now uses a new on-device system that aims to understand what is on the screen and then automatically generates labels and access points for voice commands.

As for controls, Google is now letting you long-press the power button to bring up controls for your smart home devices (though companies that want to appear in this new menu need to make use of Google’s new API for this). In one of the next beta releases, Google will also enable media controls that will make it easier to switch the output device for their audio and video content.

In terms of privacy, Google is adding one-time permissions so that an app only gets access to your microphone, camera or location once, as well as auto-resets for permissions when you haven’t used an app for a while.

A few months ago, Google said that developers would need to get a user’s approval to access background location. That caused a bit of a stir among developers and now Google will keep its current policies in place until 2021 to give developers more time to update their apps.

In addition to these user-facing features, Google is also launching a series of updates aimed at Android developers. You can read more about them here.

Powered by WPeMatico

The IBM Cloud is currently suffering a major outage, and with that, multiple services that are hosted on the platform are also down, including everybody’s favorite tech news aggregator, Techmeme.

It looks like the problems started around 2:30pm PT and spread from there. Best we can tell, this is a worldwide problem and involves a networking issue, but IBM’s own status page isn’t actually loading anymore and returns an internal server error, so we don’t quite know the extent of the outage or what triggered it. IBM Cloud’s Twitter account has also remained silent, though we found a status page for IBM Aspera hosted on a third-party server, which seems to confirm that this is likely a worldwide networking issue.

IBM Cloud, which published a paper about ensuring zero downtime in April, also suffered a minor outage in its Dallas data center in March.

We’ve reached out to IBM’s PR team and will update this post once we get more information.

Update #1 (5:06pm PT): we are seeing some reports that IBM Cloud is slowly coming back online, but the company’s status page also now seems to be functioning again and still shows that the cloud outage continues for the time being.

Update #2 (5:25pm PT): IBM keeps adding additional information to its status page, though networking issues seem to be at the core of this issue.

Powered by WPeMatico

Axiom, a startup that helps companies deal with their internal data, has secured a new $4 million seed round led by U.K.-based Crane Venture Partners, with participation from LocalGlobe, Fly VC and Mango Capital. Notable angel investors include former Xamarin founder and current GitHub CEO Nat Friedman and Heroku co-founder Adam Wiggins. The company is also emerging from a relative stealth mode to reveal that is has now raised $7 million in funding since it was founded in 2017.

The company says it is also launching with an enterprise-grade solution to manage and analyze machine data “at any scale, across any type of infrastructure.” Axiom gives DevOps teams a cloud-native, enterprise-grade solution to store and query their data all the time in one interface — without the overhead of maintaining and scaling data infrastructure.

DevOps teams have spent a great deal of time and money managing their infrastructure, but often without being able to own and analyze their machine data. Despite all the tools at hand, managing and analyzing critical data has been difficult, slow and resource-intensive, taking up far too much money and time for organizations. This is what Axiom is addressing with its platform to manage machine data and surface insights, more cheaply, they say, than other solutions.

Co-founder and CEO Neil Jagdish Patel told TechCrunch: “DevOps teams are stuck under the pressure of that, because it’s up to them to deliver a solution to that problem. And the solutions that existed are quite, well, they’re very complex. They’re very expensive to run and time-consuming. So with Axiom, our goal is to try and reduce the time to solve data problems, but also allow businesses to store more data to query at whenever they want.”

Why did they work with Crane? “We needed to figure out how enterprise sales work and how to take this product to market in a way that makes sense for the people who need it. We spoke to different investors, but when I sat down with Crane they just understood where we were. They have this razor-sharp focus on how they get you to market and how you make sure your sales process and marketing is a success. It’s been beneficial to us as were three engineers, so you need that,” said Patel.

Commenting, Scott Sage, founder and partner at Crane Venture Partners added: “Neil, Seif and Gord are a proven team that have created successful products that millions of developers use. We are proud to invest in Axiom to allow them to build a business helping DevOps teams turn logging challenges from a resource-intense problem to a business advantage.”

Axiom co-founders Neil Jagdish Patel, Seif Lotfy and Gord Allott previously created Xamarin Insights that enabled developers to monitor and analyse mobile app performance in real time for Xamarin, the open-source cross-platform app development framework. Xamarin was acquired by Microsoft for between $400 and $500 million in 2016. Before working at Xamarin, the co-founders also worked together at Canonical, the private commercial company behind the Ubuntu Project.

Powered by WPeMatico

Atlassian today launched a slew of DevOps-centric updates to a variety of its services, ranging from Bitbucket Cloud and Pipelines to Jira and others. While it’s quite a grab-bag of announcements, the overall idea behind them is to make it easier for teams to collaborate across functions as companies adopt DevOps as their development practice of choice.

“I’ve seen a lot of these tech companies go through their agile and DevOps transformations over the years,” Tiffany To, the head of agile and DevOps solutions at Atlassian told me. “Everyone wants the benefits of DevOps, but — we know it — it gets complicated when we mix these teams together, we add all these tools. As we’ve talked with a lot of our users, for them to succeed in DevOps, they actually need a lot more than just the toolset. They have to enable the teams. And so that’s what a lot of these features are focused on.”

As To stressed, the company also worked with several ecosystem partners, for example, to extend the automation features in Jira Software Cloud, which can now also be triggered by commits and pull requests in GitHub, GitLab and other code repositories that are integrated into Jira Software Cloud. “Now you get these really nice integrations for DevOps where we are enabling these developers to not spend time updating the issues,” To noted.

Indeed, a lot of the announcements focus on integrations with third-party tools. This, To said, is meant to allow Atlassian to meet developers where they are. If your code editor of choice is VS Code, for example, you can now try Atlassian’s now VS Code extension, which brings your task like from Jira Software Cloud to the editor, as well as a code review experience and CI/CD tracking from Bitbucket Pipelines.

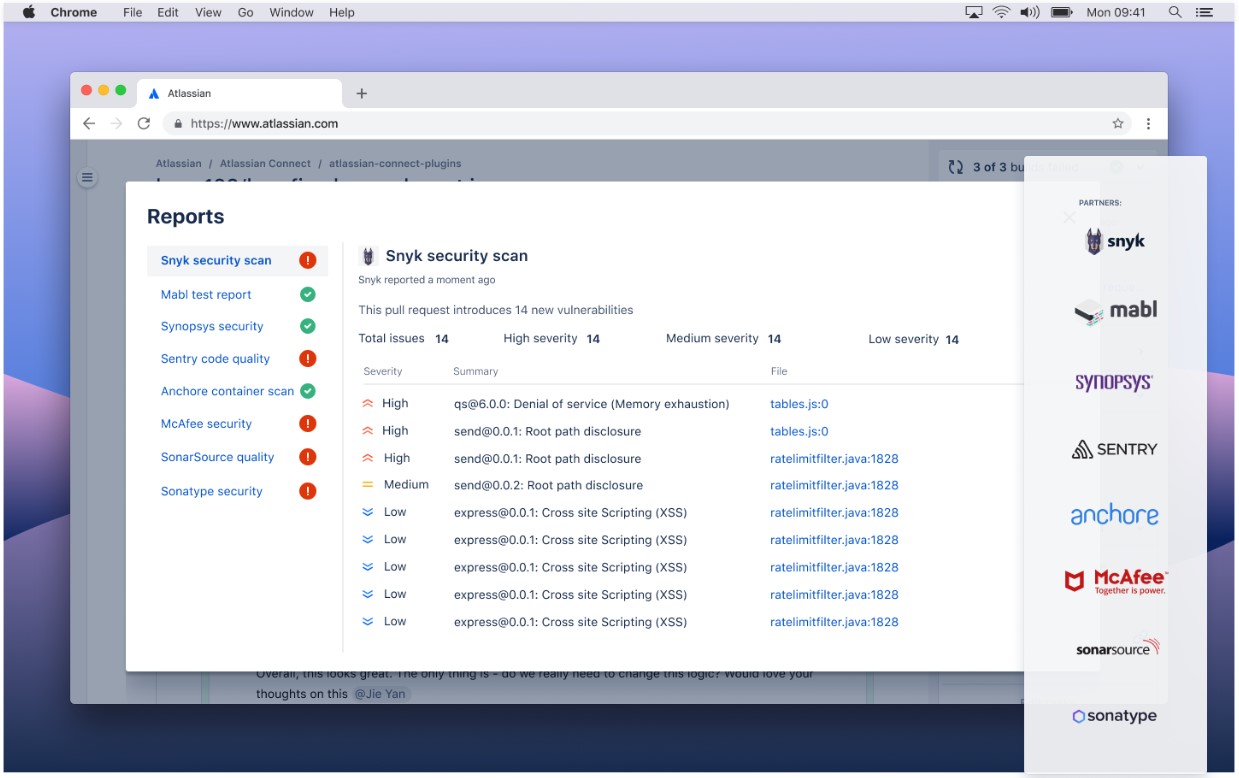

Also new is the “Your Work” dashboard in Bitbucket Cloud, which can now show you all of your assigned Jira issues, as well as Code Insights in Bitbucket Cloud. Code Insights features integrations with Mabl for test automation, Sentry for monitoring and Snyk for finding security vulnerabilities. These integrations were built on top of an open API, so teams can build their own integrations, too.

“There’s a really important trend to shift left. How do we remove the bugs and the security issues earlier in that dev cycle, because it costs more to fix it later,” said To. “You need to move that whole detection process much earlier in the software lifecycle.”

Jira Service Desk Cloud is getting a new Risk Management Engine that can score the risk of changes and auto-approve low-risk ones, as well as a new change management view to streamline the approval process.

Finally, there is new Opsgenie and Bitbucket Cloud integration that centralizes alerts and promises to filter out the noise, as well as a nice incident investigation dashboard to help teams take a look at the last deployment that happened before the incident occurred.

“The reason why you need all these little features is that as you stitch together a very large number of tools […], there is just lots of these friction points,” said To. “And so there is this balance of, if you bought a single toolchain, all from one vendor, you would have fewer of these friction points, but then you don’t get to choose best of breed. Our mission is to enable you to pick the best tools because it’s not one-size-fits-all.”

Powered by WPeMatico

Shortly after its use exploded in the post-office world of COVID-19, Zoom was banned by a variety of private and public actors, including SpaceX and the government of Taiwan. Critics allege its data strategy, particularly its privacy and security measures, were insufficiently robust, especially putting vulnerable populations, like children, at risk. NYC’s Department of Education, for instance, mandated teachers switch to alternative platforms like Microsoft Teams.

This isn’t a problem specific to Zoom. Other technology giants, from Alphabet, Apple to Facebook, have struggled with these strategic data issues, despite wielding armies of lawyers and data engineers, and have overcome them.

To remedy this, data leaders cannot stop at identifying how to improve their revenue-generating functions with data, what the former Chief Data Officer of AIG (one of our co-authors) calls “offensive” data strategy. Data leaders also protect, fight for, and empower their key partners, like users and employees, or promote “defensive” data strategy. Data offense and defense are core to trustworthy data-driven products.

While these data issues apply to most organizations, highly-regulated innovators in industries with large social impact (the “third wave”) must pay special attention. As Steve Case and the World Economic Forum articulate, the next phase of innovation will center on industries that merge the digital and the physical worlds, affecting the most intimate aspects of our lives. As a result, companies that balance insight and trust well, Boston Consulting group predicts, will be the new winners.

Drawing from our work across the public, corporate, and startup worlds, we identify a few “insight killers” — then identify the trustworthy alternative. While trustworthy data strategy should involve end users and other groups outside the company as discussed here, the lessons below focus on the complexities of partnering within organizations, which deserve attention in their own right.

From the beginning of a data project, a trustworthy data leader asks, “Who are our partners and what prevents them from achieving their goals?” In other words: listen. This question can help identify the unmet needs of the 46% of surveyed technology and business teams who found their data groups have little value to offer them.

Putting this to action is the data leader of one highly-regulated AI health startup — Cognoa — who listened to tensions between its defensive and offensive data functions. Cognoa’s Chief AI Officer identified how healthcare data laws, like the Health Insurance Portability and Accountability Act, resulted in friction between his key partners: compliance officers and machine learning engineers. Compliance officers needed to protect end users’ privacy while data and machine learning engineers wanted faster access to data.

To meet these multifaceted goals, Cognoa first scoped down its solution by prioritizing its highest-risk databases. It then connected all of those databases using a single access-and-control layer.

This redesign satisfied its compliance officers because Cognoa’s engineers could then only access health data based on strict policy rules informed by healthcare data regulations. Furthermore, since these rules could be configured and transparently explained without code, it bridged communication gaps between its data and compliance roles. Its engineers were also elated because they no longer had to wait as long to receive privacy-protected copies.

Because its data leader started by listening to the struggles of its two key partners, Cognoa met both its defensive and offensive goals.

Powered by WPeMatico

In a surprise move, Mirantis acquired Docker’s Enterprise platform business at the end of last year, and while Docker itself is refocusing on developers, Mirantis kept the Docker Enterprise name and product. Today, Mirantis is rolling out its first major update to Docker Enterprise with the release of version 3.1.

For the most part, these updates are in line with what’s been happening in the container ecosystem in recent months. There’s support for Kubernetes 1.17 and improved support for Kubernetes on Windows (something the Kubernetes community has worked on quite a bit in the last year or so). Also new is Nvidia GPU integration in Docker Enterprise through a pre-installed device plugin, as well as support for Istio Ingress for Kubernetes and a new command-line tool for deploying clusters with the Docker Engine.

In addition to the product updates, Mirantis is also launching three new support options for its customers that now give them the option to get 24×7 support for all support cases, for example, as well as enhanced SLAs for remote managed operations, designated customer success managers and proactive monitoring and alerting. With this, Mirantis is clearly building on its experience as a managed service provider.

What’s maybe more interesting, though, is how this acquisition is playing out at Mirantis itself. Mirantis, after all, went through its fair share of ups and downs in recent years, from high-flying OpenStack platform to layoffs and everything in between.

“Why we do this in the first place and why at some point I absolutely felt that I wanted to do this is because I felt that this would be a more compelling and interesting company to build, despite maybe some of the short-term challenges along the way, and that very much turned out to be true. It’s been fantastic,” Mirantis CEO and co-founder Adrian Ionel told me. “What we’ve seen since the acquisition, first of all, is that the customer base has been dramatically more loyal than people had thought, including ourselves.”

“Why we do this in the first place and why at some point I absolutely felt that I wanted to do this is because I felt that this would be a more compelling and interesting company to build, despite maybe some of the short-term challenges along the way, and that very much turned out to be true. It’s been fantastic,” Mirantis CEO and co-founder Adrian Ionel told me. “What we’ve seen since the acquisition, first of all, is that the customer base has been dramatically more loyal than people had thought, including ourselves.”

Ionel admitted that he thought some users would defect because this is obviously a major change, at least from the customer’s point of view. “Of course we have done everything possible to have something for them that’s really compelling and we put out the new roadmap right away in December after the acquisition — and people bought into it at very large scale,” he said. With that, Mirantis retained more than 90% of the customer base and the vast majority of all of Docker Enterprise’s largest users.

Ionel, who almost seemed a bit surprised by this, noted that this helped the company to turn in two “fantastic” quarters and was profitable in the last quarter, despite COVID-19.

“We wanted to go into this acquisition with a sober assessment of risks because we wanted to make it work, we wanted to make it successful because we were well aware that a lot of acquisitions fail,” he explained. “We didn’t want to go into it with a hyper-optimistic approach in any way — and we didn’t — and maybe that’s one of the reasons why we are positively surprised.”

He argues that the reason for the current success is that enterprises are doubling down on their container journeys and because they actually love the Docker Enterprise platform, like infrastructure independence, its developer focus, security features and ease of use. One thing many large customers asked for was better support for multi-cluster management at scale, which today’s update delivers.

“Where we stand today, we have one product development team. We have one product roadmap. We are shipping a very big new release of Docker Enterprise. […] The field has been completely unified and operates as one salesforce, with record results. So things have been extremely busy, but good and exciting.”

Powered by WPeMatico

When Docker sold off its enterprise division to Mirantis last fall, that didn’t mark the end of the company. In fact, Docker still exists and has refocused as a cloud-native developer tools vendor. Today it announced an expanded partnership with Microsoft around simplifying running Docker containers in Azure.

As its new mission suggests, it involves tighter integration between Docker and a couple of Azure developer tools including Visual Studio Code and Azure Container Instances (ACI). According to Docker, it can take developers hours or even days to set up their containerized environment across the two sets of tools.

The idea of the integration is to make it easier, faster and more efficient to include Docker containers when developing applications with the Microsoft tool set. Docker CEO Scott Johnston says it’s a matter of giving developers a better experience.

“Extending our strategic relationship with Microsoft will further reduce the complexity of building, sharing and running cloud-native, microservices-based applications for developers. Docker and VS Code are two of the most beloved developer tools and we are proud to bring them together to deliver a better experience for developers building container-based apps for Azure Container Instances,” Johnston said in a statement.

Among the features they are announcing is the ability to log into Azure directly from the Docker command line interface, a big simplification that reduces going back and forth between the two sets of tools. What’s more, developers can set up a Microsoft ACI environment complete with a set of configuration defaults. Developers will also be able to switch easily between their local desktop instance and the cloud to run applications.

These and other integrations are designed to make it easier for Azure and Docker common users to work in in the Microsoft cloud service without having to jump through a lot of extra hoops to do it.

It’s worth noting that these integrations are starting in Beta, but the company promises they should be released some time in the second half of this year.

Powered by WPeMatico

Microsoft today announced the launch of the Microsoft Cloud for Healthcare, an industry-specific cloud solution for healthcare providers. This is the first in what is likely going to be a set of cloud offerings that target specific verticals and extends a trend we’ve seen among large cloud providers (especially Google) that tailor specific offerings to the needs of individual industries.

“More than ever, being connected is critical to create an individualized patient experience,” writes Tom McGuinness, corporate vice president, Worldwide Health at Microsoft, and Dr. Greg Moore, corporate vice president, Microsoft Health, in today’s announcement. “The Microsoft Cloud for Healthcare helps healthcare organizations to engage in more proactive ways with their patients, allows caregivers to improve the efficiency of their workflows and streamline interactions with Classified as Microsoft Confidential patients with more actionable results.”

Like similar Microsoft-branded offerings from the company, Cloud for Healthcare is about bringing together a set of capabilities that already exist inside of Microsoft. In this case, that includes Microsoft 365, Dynamics, Power Platform and Azure, including Azure IoT for monitoring patients. The solution sits on top of a common data model that makes it easier to share data between applications and analyze the data they gather.

“By providing the right information at the right time, the Microsoft Cloud for Healthcare will help hospitals and care providers better manage the needs of patients and staff and make resource deployments more efficient,” Microsoft says in its press materials. “This solution also improves end-to-end security compliance and accessibility of data, driving better operational outcomes.”

Since Microsoft never passes up a chance to talk up Teams, the company also notes that its communications service will allow healthcare workers to more efficiently communicate with each other, but it also notes that Teams now includes a Bookings app to help its users — including healthcare providers — schedule, manage and conduct virtual visits in Teams. Some of the healthcare systems that are already using Teams include St. Luke’s University Health Network, Stony Brook Medicine, Confluent Health and Calderdale & Huddersfield NHS Foundation Trust in the U.K.

In addition to Microsoft’s own tools, the company is also working with its large partner ecosystem to provide healthcare providers with specialized services. These include the likes of Epic, Allscripts, GE Healthcare, Adaptive Biotechnologies and Nuance.

Powered by WPeMatico