computing

Auto Added by WPeMatico

Auto Added by WPeMatico

After a series of closed alpha tests, Microsoft’s Xbox Game Studios and Asobo Studio today announced that the next-gen Microsoft Flight Simulator 2020 will launch on August 18. Pre-orders are now live and FS 2020 will come in three editions, standard ($59.99), deluxe ($89.99) and premium deluxe ($119.99), with the more expensive versions featuring more planes and handcrafted international airports.

The last part may come as a bit of a surprise, given that Microsoft and Asobo are using assets from Bing Maps and some AI magic on Azure to essentially recreate the Earth — and all of its airports — in Flight Simulator 2020. Still, the team must have spent some extra time on making some of these larger airports especially realistic and today, if you were to buy even one of these larger airports as an add-on for Flight Simulator X or X-Plane, you’d easily be spending $30 or more.

The default edition features 20 planes and 30 hand-modeled airports, while the deluxe edition bumps that up to 25 planes and 35 airports and the high-end version comes with 30 planes and 40 airports.

Among those airports not modeled in all their glorious detail in the default edition (they are still available there, by the way — just without some of the extra detail) are the likes of Amsterdam Schiphol, Chicago O’Hare, Denver, Frankfurt, Heathrow and San Francisco.

The same holds true for planes, with the 787 only available in the deluxe package, for example. Still, based on what Asobo has shown in its regular updates so far, even the 20 planes in the standard edition have been modeled in far more detail than in previous versions, and maybe even beyond what some add-ons provide today.

Because a lot of what Microsoft and Adobo are doing here involves using cloud technology to, for example, stream some of the more detailed scenery to your computer on demand, chances are we’ll see regular content updates for these various editions as well, though the details here aren’t yet clear.

“Your fleet of planes and detailed airports from whatever edition you choose are all available on launch day as well as access to the ongoing content updates that will continually evolve and expand the flight simulation platform,” is what Microsoft has to say about this for the time being.

Chances are we will get more details in the coming weeks, as Flight Simulator 2020 is about to enter its closed beta phase.

Powered by WPeMatico

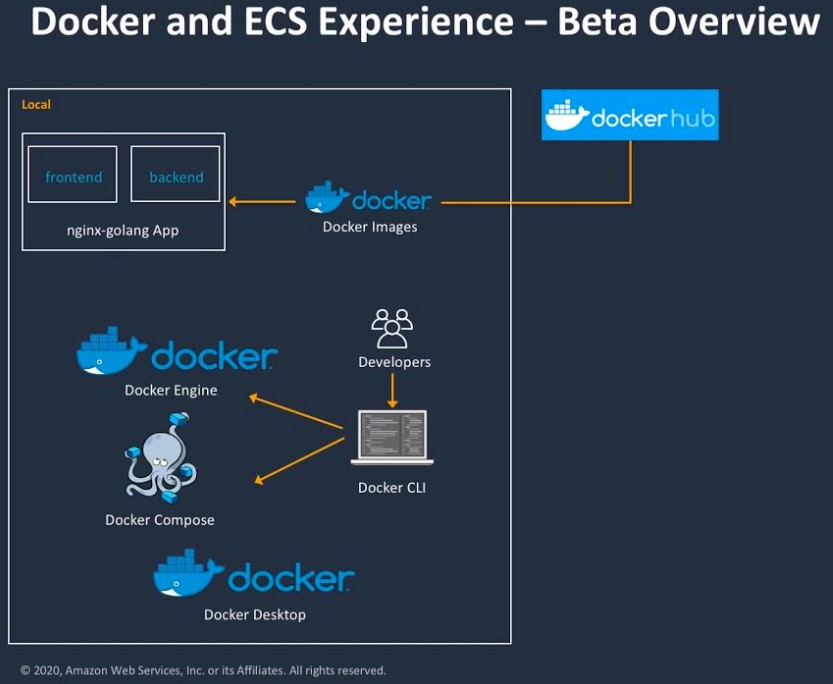

Docker and AWS today announced a new collaboration that introduces a deep integration between Docker’s Compose and Desktop developer tools and AWS’s Elastic Container Service (ECS) and ECS on AWS Fargate. Previously, the two companies note, the workflow to take Compose files and run them on ECS was often challenging for developers. Now, the two companies simplified this process to make switching between running containers locally and on ECS far easier.

“With a large number of containers being built using Docker, we’re very excited to work with Docker to simplify the developer’s experience of building and deploying containerized applications to AWS,” said Deepak Singh, the VP for compute services at AWS. “Now customers can easily deploy their containerized applications from their local Docker environment straight to Amazon ECS. This accelerated path to modern application development and deployment allows customers to focus more effort on the unique value of their applications, and less time on figuring out how to deploy to the cloud.”

“With a large number of containers being built using Docker, we’re very excited to work with Docker to simplify the developer’s experience of building and deploying containerized applications to AWS,” said Deepak Singh, the VP for compute services at AWS. “Now customers can easily deploy their containerized applications from their local Docker environment straight to Amazon ECS. This accelerated path to modern application development and deployment allows customers to focus more effort on the unique value of their applications, and less time on figuring out how to deploy to the cloud.”

In a bit of a surprise move, Docker last year sold off its enterprise business to Mirantis to solely focus on cloud-native developer experiences.

“In November, we separated the enterprise business, which was very much focused on operations, CXOs and a direct sales model, and we sold that business to Mirantis,” Docker CEO Scott Johnston told TechCrunch’s Ron Miller earlier this year. “At that point, we decided to focus the remaining business back on developers, which was really Docker’s purpose back in 2013 and 2014.”

Today’s move is an example of this new focus, given that the workflow issues this partnership addresses had been around for quite a while already.

It’s worth noting that Docker also recently engaged in a strategic partnership with Microsoft to integrate the Docker developer experience with Azure’s Container Instances.

Powered by WPeMatico

Google, in collaboration with a number of academic leaders and its consulting partner SADA Systems, today announced the launch of the Open Usage Commons, a new organization that aims to help open-source projects manage their trademarks.

To be fair, at first glance, open-source trademarks may not sound like it would be a major problem (or even a really interesting topic), but there’s more here than meets the eye. As Google’s director of open source Chris DiBona told me, trademarks have increasingly become an issue for open-source projects, not necessarily because there have been legal issues around them, but because commercial entities that want to use the logo or name of an open-source project on their websites, for example, don’t have the reassurance that they are free to use those trademarks.

“One of the things that’s been rearing its ugly head over the last couple years has been trademarks,” he told me. “There’s not a lot of trademarks in open-source software in general, but particularly at Google, and frankly the higher tier, the more popular open-source projects, you see them more and more over the last five years. If you look at open-source licensing, they don’t treat trademarks at all the way they do copyright and patents, even Apache, which is my favorite license, they basically say, nope, not touching it, not our problem, you go talk.”

Traditionally, open-source licenses didn’t cover trademarks because there simply weren’t a lot of trademarks in the ecosystem to worry about. One of the exceptions here was Linux, a trademark that is now managed by the Linux Mark Institute on behalf of Linus Torvalds.

With that, commercial companies aren’t sure how to handle this situation and developers also don’t know how to respond to these companies when they ask them questions about their trademarks.

“What we wanted to do is give guidance around how you can share trademarks in the same way that you would share patents and copyright in an open-source license […],” DiBona explained. “And the idea is to basically provide that guidance, you know, provide that trademarks file, if you will, that you include in your source code.”

Google itself is putting three of its own open-source trademarks into this new organization: the Angular web application framework for mobile, the Gerrit code review tool and the Istio service mesh. “All three of them are kind of perfect for this sort of experiment because they’re under active development at Google, they have a trademark associated with them, they have logos and, in some cases, a mascot.”

One of those mascots is Diffi, the Kung Fu Code Review Cuckoo, because, as DiBona noted, “we were trying to come up with literally the worst mascot we could possibly come up with.” It’s now up to the Open Usage Commons to manage that trademark.

DiBona also noted that all three projects have third parties shipping products based on these projects (think Gerrit as a service).

Another thing DiBona stressed is that this is an independent organization. Besides himself, Jen Phillips, a senior engineering manager for open source at Google is also on the board. But the team also brought in SADA’s CTO Miles Ward (who was previously at Google); Allison Randal, the architect of the Parrot virtual machine and member of the board of directors of the Perl Foundation and OpenStack Foundation, among others; Charles Lee Isbell Jr., the dean of the Georgia Institute of Technology College of Computing, and Cliff Lampe, a professor at the School of Information at the University of Michigan and a “rising star,” as DiBona pointed out.

“These are people who really have the best interests of computer science at heart, which is why we’re doing this,” DiBona noted. “Because the thing about open source — people talk about it all the time in the context of business and all the rest. The reason I got into it is because through open source we could work with other people in this sort of fertile middle space and sort of know what the deal was.”

Update: even though Google argues that the Open Usage Commons are complementary to other open source organizations, the Cloud Native Computing Foundation (CNCF) released the following statement by Chris Aniszczyk, the CNCF’s CTO: “Our community members are perplexed that Google has chosen to not contribute the Istio project to the Cloud Native Computing Foundation (CNCF), but we are happy to help guide them to resubmit their old project proposal from 2017 at any time. In the end, our community remains focused on building and supporting our service mesh projects like Envoy, linkerd and interoperability efforts like the Service Mesh Interface (SMI). The CNCF will continue to be the center of gravity of cloud native and service mesh collaboration and innovation.”

Powered by WPeMatico

Suse, which describes itself as “the world’s largest independent open source company,” today announced that it has acquired Rancher Labs, a company that has long focused on making it easier for enterprises to make their container clusters.

The two companies did not disclose the price of the acquisition, but Rancher was well funded, with a total of $95 million in investments. It’s also worth mentioning that it has only been a few months since the company announced its $40 million Series D round led by Telstra Ventures. Other investors include the likes of Mayfield and Nexus Venture Partners, GRC SinoGreen and F&G Ventures.

Like similar companies, Rancher’s original focus was first on Docker infrastructure before it pivoted to putting its emphasis on Kubernetes, once that became the de facto standard for container orchestration. Unsurprisingly, this is also why Suse is now acquiring this company. After a number of ups and downs — and various ownership changes — Suse has now found its footing again and today’s acquisition shows that its aiming to capitalize on its current strengths.

Just last month, the company reported the annual contract value of its booking increased by 30% year over year and that it saw a 63% increase in customer deals worth more than $1 million in the last quarter, with its cloud revenue growing 70%. While it is still in the Linux distribution business that the company was founded on, today’s Suse is a very different company, offering various enterprise platforms (including its Cloud Foundry-based Cloud Application Platform), solutions and services. And while it already offered a Kubernetes-based container platform, Rancher’s expertise will only help it to build out this business.

“This is an incredible moment for our industry, as two open source leaders are joining forces. The merger of a leader in Enterprise Linux, Edge Computing and AI with a leader in Enterprise Kubernetes Management will disrupt the market to help customers accelerate their digital transformation journeys,” said Suse CEO Melissa Di Donato in today’s announcement. “Only the combination of SUSE and Rancher will have the depth of a globally supported and 100% true open source portfolio, including cloud native technologies, to help our customers seamlessly innovate across their business from the edge to the core to the cloud.”

The company describes today’s acquisition as the first step in its “inorganic growth strategy” and Di Donato notes that this acquisition will allow the company to “play an even more strategic role with cloud service providers, independent hardware vendors, systems integrators and value-added resellers who are eager to provide greater customer experiences.”

Powered by WPeMatico

Nvidia today announced that its new Ampere-based data center GPUs, the A100 Tensor Core GPUs, are now available in alpha on Google Cloud. As the name implies, these GPUs were designed for AI workloads, as well as data analytics and high-performance computing solutions.

The A100 promises a significant performance improvement over previous generations. Nvidia says the A100 can boost training and inference performance by over 20x compared to its predecessors (though you’ll mostly see 6x or 7x improvements in most benchmarks) and tops out at about 19.5 TFLOPs in single-precision performance and 156 TFLOPs for Tensor Float 32 workloads.

“Google Cloud customers often look to us to provide the latest hardware and software services to help them drive innovation on AI and scientific computing workloads,” said Manish Sainani, Director of Product Management at Google Cloud, in today’s announcement. “With our new A2 VM family, we are proud to be the first major cloud provider to market Nvidia A100 GPUs, just as we were with Nvidia’s T4 GPUs. We are excited to see what our customers will do with these new capabilities.”

Google Cloud users can get access to instances with up to 16 of these A100 GPUs, for a total of 640GB of GPU memory and 1.3TB of system memory.

Powered by WPeMatico

When Troy Hunt launched Have I Been Pwned in late 2013, he wanted it to answer a simple question: Have you fallen victim to a data breach?

Seven years later, the data-breach notification service processes thousands of requests each day from users who check to see if their data was compromised — or pwned with a hard ‘p’ — by the hundreds of data breaches in its database, including some of the largest breaches in history. As it’s grown, now sitting just below the 10 billion breached-records mark, the answer to Hunt’s original question is more clear.

“Empirically, it’s very likely,” Hunt told me from his home on Australia’s Gold Coast. “For those of us that have been on the internet for a while it’s almost a certainty.”

What started out as Hunt’s pet project to learn the basics of Microsoft’s cloud, Have I Been Pwned quickly exploded in popularity, driven in part by its simplicity to use, but largely by individuals’ curiosity.

As the service grew, Have I Been Pwned took on a more proactive security role by allowing browsers and password managers to bake in a backchannel to Have I Been Pwned to warn against using previously breached passwords in its database. It was a move that also served as a critical revenue stream to keep down the site’s running costs.

But Have I Been Pwned’s success should be attributed almost entirely to Hunt, both as its founder and its only employee, a one-man band running an unconventional startup, which, despite its size and limited resources, turns a profit.

As the workload needed to support Have I Been Pwned ballooned, Hunt said the strain of running the service without outside help began to take its toll. There was an escape plan: Hunt put the site up for sale. But, after a tumultuous year, he is back where he started.

Ahead of its next big 10-billion milestone mark, Have I Been Pwned shows no signs of slowing down.

Even long before Have I Been Pwned, Hunt was no stranger to data breaches.

By 2011, he had cultivated a reputation for collecting and dissecting small — for the time — data breaches and blogging about his findings. His detailed and methodical analyses showed time and again that internet users were using the same passwords from one site to another. So when one site was breached, hackers already had the same password to a user’s other online accounts.

Then came the Adobe breach, the “mother of all breaches” as Hunt described it at the time: Over 150 million user accounts had been stolen and were floating around the web.

Hunt obtained a copy of the data and, with a handful of other breaches he had already collected, loaded them into a database searchable by a person’s email address, which Hunt saw as the most common denominator across all the sets of breached data.

And Have I Been Pwned was born.

It didn’t take long for its database to swell. Breached data from Sony, Snapchat and Yahoo soon followed, racking up millions more records in its database. Have I Been Pwned soon became the go-to site to check if you had been breached. Morning news shows would blast out its web address, resulting in a huge spike in users — enough at times to briefly knock the site offline. Hunt has since added some of the biggest breaches in the internet’s history: MySpace, Zynga, Adult Friend Finder, and several huge spam lists.

As Have I Been Pwned grew in size and recognition, Hunt remained its sole proprietor, responsible for everything from organizing and loading the data into the database to deciding how the site should operate, including its ethics.

Hunt takes a “what do I think makes sense” approach to handling other people’s breached personal data. With nothing to compare Have I Been Pwned to, Hunt had to write the rules for how he handles and processes so much breach data, much of it highly sensitive. He does not claim to have all of the answers, but relies on transparency to explain his rationale, detailing his decisions in lengthy blog posts.

His decision to only let users search for their email address makes logical sense, driven by the site’s only mission, at the time, to tell a user if they had been breached. But it was also a decision centered around user privacy that helped to future-proof the service against some of the most sensitive and damaging data he would go on to receive.

In 2015, Hunt obtained the Ashley Madison breach. Millions of people had accounts on the site, which encourages users to have an affair. The breach made headlines, first for the breach, and again when several users died by suicide in its wake.

The hack of Ashley Madison was one of the most sensitive entered into Have I Been Pwned, and ultimately changed how Hunt approached data breaches that involved people’s sexual preferences and other personal data. (AP Photo/Lee Jin-man, File)

Hunt diverged from his usual approach, acutely aware of its sensitivities. The breach was undeniably different. He recounted a story of one person who told him how their local church posted a list of the names of everyone in the town who was in the data breach.

“It’s clearly casting a moral judgment,” he said, referring to the breach. “I don’t want Have I Been Pwned to enable that.”

Unlike earlier, less sensitive breaches, Hunt decided that he would not allow anyone to search for the data. Instead, he purpose-built a new feature allowing users who had verified their email addresses to see if they were in more sensitive breaches.

“The purposes for people being in that data breach were so much more nuanced than what anyone ever thought,” Hunt said. One user told him he was in there after a painful break-up and had since remarried but was labeled later as an adulterer. Another said she created an account to catch her husband, suspected of cheating, in the act.

“There is a point at which being publicly searchable poses an unreasonable risk to people, and I make a judgment call on that,” he explained.

The Ashely Madison breach reinforced his view on keeping as little data as possible. Hunt frequently fields emails from data breach victims asking for their data, but he declines every time.

“It really would not have served my purpose to load all of the personal data into Have I Been Pwned and let people look up their phone numbers, their sexualities, or whatever was exposed in various data breaches,” said Hunt.

“If Have I Been Pwned gets pwned, it’s just email addresses,” he said. “I don’t want that to happen, but it’s a very different situation if, say, there were passwords.”

But those remaining passwords haven’t gone to waste. Hunt also lets users search more than half a billion standalone passwords, allowing users to search to see if any of their passwords have also landed in Have I Been Pwned.

Anyone — even tech companies — can access that trove of Pwned Passwords, he calls it. Browser makers and password managers, like Mozilla and 1Password, have baked-in access to Pwned Passwords to help prevent users from using a previously breached and vulnerable password. Western governments, including the U.K. and Australia, also rely on Have I Been Pwned to monitor for breached government credentials, which Hunt also offers for free.

“It’s enormously validating,” he said. “Governments, for the most part, are trying to do things to keep countries and individuals safe — working under extreme duress and they don’t get paid much,” he said.

“There have been similar services that have popped up. They’ve been for-profit — and they’ve been indicted.”

Troy Hunt

Hunt recognizes that Have I Been Pwned, as much as openness and transparency is core to its operation, lives in an online purgatory under which any other circumstances — especially in a commercial enterprise — he would be drowning in regulatory hurdles and red tape. And while the companies whose data Hunt loads into his database would probably prefer otherwise, Hunt told me he has never received a legal threat for running the service.

“I’d like to think that Have I Been Pwned is at the far-legitimate side of things,” he said.

Others who have tried to replicate the success of Have I Been Pwned haven’t been as lucky.

“There have been similar services that have popped up,” said Hunt. “They’ve been for-profit — and they’ve been indicted,” he said.

LeakedSource was, for a time, one of the largest sellers of breach data on the web. I know, because my reporting broke some of their biggest gets: music streaming service Last.fm, adult dating site AdultFriendFinder, and Russian internet giant Rambler.ru to name a few. But what caught the attention of federal authorities was that LeakedSource, whose operator later pleaded guilty to charges related to trafficking identity theft information, indiscriminately sold access to anyone else’s breach data.

“There is a very legitimate case to be made for a service to give people access to their data at a price.”

Hunt said he would “sleep perfectly fine” charging users a fee to access their data. “I just wouldn’t want to be accountable for it if it goes wrong,” he said.

Five years into Have I Been Pwned, Hunt could feel the burnout coming.

“I could see a point where I would be if I didn’t change something,” he told me. “It really felt like for the sustainability of the project, something had to change.”

He said he went from spending a fraction of his time on the project to well over half. Aside from juggling the day-to-day — collecting, organizing, deduplicating and uploading vast troves of breached data — Hunt was responsible for the entirety of the site’s back office upkeep — its billing and taxes — on top of his own.

The plan to sell Have I Been Pwned was codenamed Project Svalbard, named after the Norweigian seed vault that Hunt likened Have I Been Pwned to, a massive stockpile of “something valuable for the betterment of humanity,” he wrote announcing the sale in June 2019. It would be no easy task.

Hunt said the sale was to secure the future of the service. It was also a decision that would have to secure his own. “They’re not buying Have I Been Pwned, they’re buying me,” said Hunt. “Without me, there’s just no deal.” In his blog post, Hunt spoke of his wish to build out the service and reach a larger audience. But, he told me, it was not about the money

As its sole custodian, Hunt said that as long as someone kept paying the bills, Have I Been Pwned would live on. “But there was no survivorship model to it,” he admitted. “I’m just one person doing this.”

By selling Have I Been Pwned, the goal was a more sustainable model that took the pressure off him, and, he joked, the site wouldn’t collapse if he got eaten by a shark, an occupational hazard for living in Australia.

But chief above all, the buyer had to be the perfect fit.

Hunt met with dozens of potential buyers, and many in Silicon Valley. He knew what the buyer would look like, but he didn’t yet have a name. Hunt wanted to ensure that whomever bought Have I Been Pwned upheld its reputation.

“Imagine a company that had no respect for personal data and was just going to abuse the crap out of it,” he said. “What does that do for me?” Some potential buyers were driven by profits. Hunt said any profits were “ancillary.” Buyers were only interested in a deal that would tie Hunt to their brand for years, buying the exclusivity to his own recognition and future work — that’s where the value in Have I Been Pwned is.

Hunt was looking for a buyer with whom he knew Have I Been Pwned would be safe if he were no longer involved. “It was always about a multiyear plan to try and transfer the confidence and trust people have in me to some other organizations,” he said.

Hunt testifies to the House Energy Subcommittee on Capitol Hill in Washington, Thursday, Nov. 30, 2017. (AP Photo/Carolyn Kaster)

The vetting process and due diligence was “insane,” said Hunt. “Things just drew out and drew out,” he said. The process went on for months. Hunt spoke candidly about the stress of the year. “I separated from my wife early last year around about the same time as the [sale process],” he said. They later divorced. “You can imagine going through this at the same time as the separation,” he said. “It was enormously stressful.”

Then, almost a year later, Hunt announced the sale was off. Barred from discussing specifics thanks to non-disclosure agreements, Hunt wrote in a blog post that the buyer, whom he was set on signing with, made an unexpected change to their business model that “made the deal infeasible.”

“It came as a surprise to everyone when it didn’t go through,” he told me. It was the end of the road.

Looking back, Hunt maintains it was “the right thing” to walk away. But the process left him back at square one without a buyer and personally down hundreds of thousands in legal fees.

After a bruising year for his future and his personal life, Hunt took time to recoup, clambering for a normal schedule after an exhausting year. Then the coronavirus hit. Australia fared lightly in the pandemic by international standards, lifting its lockdown after a brief quarantine.

Hunt said he will keep running Have I Been Pwned. It wasn’t the outcome he wanted or expected, but Hunt said he has no immediate plans for another sale. For now it’s “business as usual,” he said.

In June alone, Hunt loaded over 102 million records into Have I Been Pwned’s database. Relatively speaking, it was a quiet month.

“We’ve lost control of our data as individuals,” he said. But not even Hunt is immune. At close to 10 billion records, Hunt has been ‘pwned’ more than 20 times, he said.

Earlier this year Hunt loaded a massive trove of email addresses from a marketing database — dubbed ‘Lead Hunter’ — some 68 million records fed into Have I Been Pwned. Hunt said someone had scraped a ton of publicly available web domain record data and repurposed it as a massive spam database. But someone left that spam database on a public server, without a password, for anyone to find. Someone did, and passed the data to Hunt. Like any other breach, he took the data, loaded it in Have I Been Pwned, and sent out email notifications to the millions who have subscribed.

“Job done,” he said. “And then I got an email from Have I Been Pwned saying I’d been pwned.”

He laughed. “It still surprises me the places that I turn up.”

Related stories:

Powered by WPeMatico

When the inventor of AWS Lambda, Tim Wagner, and the former head of blockchain at AWS, Shruthi Rao, co-found a startup, it’s probably worth paying attention. Vendia, as the new venture is called, combines the best of serverless and blockchain to help build a truly multicloud serverless platform for better data and code sharing.

Today, the Vendia team announced that it has raised a $5.1 million seed funding round, led by Neotribe’s Swaroop ‘Kittu’ Kolluri. Correlation Ventures, WestWave Capital, HWVP, Firebolt Ventures, Floodgate and FuturePerfect Ventures also participated in this oversubscribed round.

Seeing Wagner at the helm of a blockchain-centric startup isn’t exactly a surprise. After building Lambda at AWS, he spent some time as VP of engineering at Coinbase, where he left about a year ago to build Vendia.

“One day, Coinbase approached me and said, ‘Hey, maybe we could do for the financial system what you’ve been doing over there for the cloud system,’” he told me. “And so I got interested in that. We had some conversations. I ended up going to Coinbase and spent a little over a year there as the VP of Engineering, helping them to set the stage for some of that platform work and tripling the size of the team.” He noted that Coinbase may be one of the few companies where distributed ledgers are actually mission-critical to their business, yet even Coinbase had a hard time scaling its Ethereum fleet, for example, and there was no cloud-based service available to help it do so.

“The thing that came to me as I was working there was why don’t we bring these two things together? Nobody’s thinking about how would you build a distributed ledger or blockchain as if it were a cloud service, with all the things that we’ve learned over the course of the last 10 years building out the public cloud and learning how to do it at scale,” he said.

Wagner then joined forces with Rao, who spent a lot of time in her role at AWS talking to blockchain customers. One thing she noticed was that while it makes a lot of sense to use blockchain to establish trust in a public setting, that’s really not an issue for enterprise.

“After the 500th customer, it started to make sense,” she said. “These customers had made quite a bit of investment in IoT and edge devices. They were gathering massive amounts of data. They also made investments on the other side, with AI and ML and analytics. And they said, ‘Well, there’s a lot of data and I want to push all of this data through these intelligent systems. I need a mechanism to get this data.’” But the majority of that data often comes from third-party services. At the same time, most blockchain proof of concepts weren’t moving into any real production usage because the process was often far too complex, especially enterprises that maybe wanted to connect their systems to those of their partners.

“We are asking these partners to spin up Kubernetes clusters and install blockchain nodes. Why is that? That’s because for blockchain to bring trust into a system to ensure trust, you have to own your own data. And to own your own data, you need your own node. So we’re solving fundamentally the wrong problem,” she explained.

The first product Vendia is bringing to market is Vendia Share, a way for businesses to share data with partners (and across clouds) in real-time, all without giving up control over that data. As Wagner noted, businesses often want to share large data sets but they also want to ensure they can control who has access to that data. For those users, Vendia is essentially a virtual data lake with provenance tracking and tamper-proofing built in.

The company, which mostly raised this round after the coronavirus pandemic took hold in the U.S., is already working with a couple of design partners in multiple industries to test out its ideas, and plans to use the new funding to expand its engineering team to build out its tools.

“At Neotribe Ventures, we invest in breakthrough technologies that stretch the imagination and partner with companies that have category creation potential built upon a deep-tech platform,” said Neotribe founder and managing director Kolluri. “When we heard the Vendia story, it was a no-brainer for us. The size of the market for multiparty, multicloud data and code aggregation is enormous and only grows larger as companies capture every last bit of data. Vendia’s serverless-based technology offers benefits such as ease of experimentation, no operational heavy lifting and a pay-as-you-go pricing model, making it both very consumable and highly disruptive. Given both Tim and Shruthi’s backgrounds, we know we’ve found an ideal ‘Founder fit’ to solve this problem! We are very excited to be the lead investors and be a part of their journey.”

Powered by WPeMatico

Hasura is an open-source engine that can connect to PostgreSQL databases and microservices across hybrid- and multi-cloud environments and then automatically build a GraphQL API backend for them, making it easier for developers to then build their own data-driven applications on top of this unified API . For a while now, the San Francisco-based startup has offered a paid version (Hasura Pro) with enterprise-ready reliability and security tools, in addition to its free open-source version. Today, the company launched Hasura Cloud, which takes the existing Pro version, adds a number of cloud-specific features like dynamic caching, auto-scaling and consumption-based pricing, and brings those together in a fully managed service.

At its core, Hasura’s service promises businesses the ability to bring together data from their various siloed databases and allow their developers to extract value from them through its GraphQL APIs. While GraphQL is still relatively new, the Facebook-incubated technology has quickly become extremely popular among many development teams.

Before founding the company and launching it in 2018, Hasura CEO and co-founder Tanmai Gopal worked for a consulting firm — and like with so many founders, that’s where he got the inspiration for the service.

“One of the key things that we noticed was that in the entire landscape, computing is becoming better, there are better frameworks, it is easier to deploy code, databases are becoming better and they kind of work everywhere,” he said. “But this kind of piece in the middle that is still a bottleneck and that there isn’t really a good solution for is this data access piece.” Almost by default, most companies host data in various SaaS services and databases — and now they were trying to figure out how to develop apps based on this for both internal and external consumers, noted Gopal. “This data distribution problem was this bottleneck where everybody would just spend massive amounts of time and money. And we invented a way of kind of automating that,” he explained.

The choice of GraphQL was also pretty straightforward, especially because GraphQL services are an easy way for developers to consume data (even though, as Gopal noted, it’s not always fun to build the GraphQL service itself). One thing that’s unusual and worth noting about the core Hasura engine itself is that it is written in Haskell, which is a rather unusual choice.

The team tells me that Hasura is now nearing 50 million downloads for its free version and the company is seeing large and small users from across various industries relying on its products, which is probably no surprise, given that the company is trying to solve a pretty universal problem around data access and consumption.

Over the last few quarters, the team worked on launching its cloud service. “We’ve been thinking of the cloud in a very different way,” Gopal said. “It’s not your usual, take the open-source solution and host it, like a MongoDB Atlas or Confluent. What we’ve done is we’ve said, we’re going to re-engineer the open-source solution to be entirely multi-tenant and be completely pay-per pricing.”

Given this philosophy, it’s no surprise that Hasura’s pricing is purely based on how much data a user moves through the service. “It’s much closer to our value proposition,” Hasura co-founder and COO Rajoshi Ghosh said. “The value proposition is about data access. The big part of it is the fact that you’re getting this data from your databases. But the very interesting part is that this data can actually come from anywhere. This data could be in your third-party services, part of your data could be living in Stripe and it could be living in Salesforce, and it could be living in other services. […] We’re the data access infrastructure in that sense. And this pricing also — from a mental model perspective — makes it much clearer that that’s the value that we’re adding.”

Now, there are obviously plenty of other data-centric API services on the market, but Gopal argues that Hasura has an advantage because of its advanced caching for dynamic data, for example.

Powered by WPeMatico

Overwolf, the in-game app-development toolkit and marketplace, has acquired Twitch’s CurseForge assets to provide a marketplace for modifications to complement its app development business.

Since its launch in 2009, developers have used Overwolf to build in-game applications for things like highlight clips, game-performance monitoring and metrics, and strategic analysis. Some of these developers have managed to earn anywhere between $100,000 and $1 million per year off revenue from app sales.

“CurseForge is the embodiment of how fostering a community of creators around games generates value for both players and game developers,” said Uri Marchand, Overwolf’s chief executive officer, in a statement. “As we move to onboard mods onto our platform, we’re positioning Overwolf as the industry standard for building in-game creations.”

It wouldn’t be a stretch to think of the company as the Roblox for applications for gamers, and now it’s moving deeper into the gaming world with the acquisition of CurseForge. As the company makes its pitch to current CurseForge users — hoping that the mod developers will stick with the marketplace, they’re offering to increase by 50% the revenue those developers will make.

Overwolf said it has around 30,000 developers who have built 90,000 mods and apps, on its platform already.

As a result of the acquisition, the CurseForge mod manager will move from being a Twitch client and become a standalone desktop app included in Overwolf’s suite of app offerings, and the acquisition won’t have any effect on existing tools and services.

“We’ve been deeply impressed by the level of passion and collaboration in the CurseForge modding community,” said Tim Aldridge, director of Engineering, Gaming Communities at Twitch. “CurseForge is an incredible asset for both creators and gamers. We are confident that the CurseForge community will thrive under Overwolf’s leadership, thanks to their commitment to empowering developers.”

The acquisition comes two years after Overwolf raised $16 million in a round of financing from Intel Capital, which had also partnered with the company on a $7 million fund to invest in app and mod developers for popular games.

“Overwolf’s position as a platform that serves millions of gamers, coupled with its partnership with top developers, means that Intel’s investment will convert into more value for PC gamers worldwide,” said John Bonini, VP and GM of VR, Esports and Gaming at Intel, in a statement at the time. “Intel has always prioritized gamers with high performance, industry-leading hardware. This round of investment in Overwolf advances Intel’s vision to deliver a holistic PC experience that will enhance the ways people interact with their favorite games on the software side as well.”

Other investors in the company include Liberty Technology Venture Capital, the investment arm of the media and telecommunications company, Liberty Media.

Powered by WPeMatico

Conduct an online search and you’ll find close to one million websites offering their own definition of DevSecOps.

Why is it that domain experts and practitioners alike continue to iterate on analogous definitions? Likely, it’s because they’re all correct. DevSecOps is a union between culture, practice and tools providing continuous delivery to the end user. It’s an attitude; a commitment to baking security into the engineering process. It’s a practice; one that prioritizes processes that deliver functionality and speed without sacrificing security or test rigor. Finally, it’s a combination of automation tools; correctly pieced together, they increase business agility.

The goal of DevSecOps is to reach a future state where software defines everything. To get to this state, businesses must realize the DevSecOps mindset across every tech team, implement work processes that encourage cross-organizational collaboration, and leverage automation tools, such as for infrastructure, configuration management and security. To make the process repeatable and scalable, businesses must plug their solution into CI/CD pipelines, which remove manual errors, standardize deployments and accelerate product iterations. Completing this process, everything becomes code. I refer to this destination as “IT-as-code.”

Whichever way you cut it, DevSecOps, as a culture, practice or combination of tools, is of increasing importance. Particularly these days, with more consumers and businesses leaning on digital, enterprises find themselves in the irrefutable position of delivering with speed and scale. Digital transformation that would’ve taken years, or at the very least would’ve undergone a period of premeditation, is now urgent and compressed into a matter of months.

Security and operations are a part of this new shift to IT, not just software delivery: A DevSecOps program succeeds when everyone, from security, to operations, to development, is not only part of the technical team but able to share information for repeatable use. Security, often seen as a blocker, will uphold the “secure by design” principle by automating security code testing and reviews, and educating engineers on secure design best practices. Operations, typically reactive to development, can troubleshoot incongruent merges between engineering and production proactively. However, currently, businesses are only familiar with utilizing automation for software delivery. They don’t know what automation means for security or operations. Figuring out how to apply the same methodology throughout the whole program and therefore the whole business is critical for success.

Powered by WPeMatico