Instagram now demotes vaguely ‘inappropriate’ content

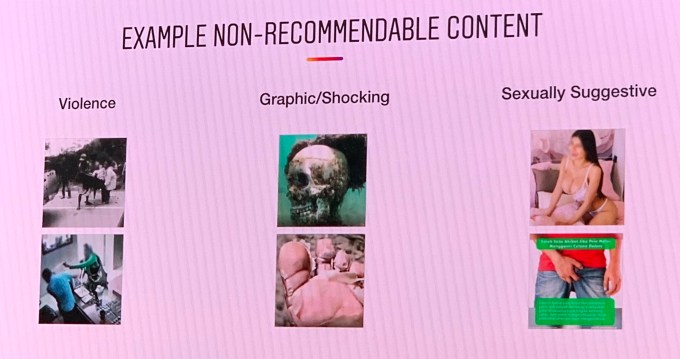

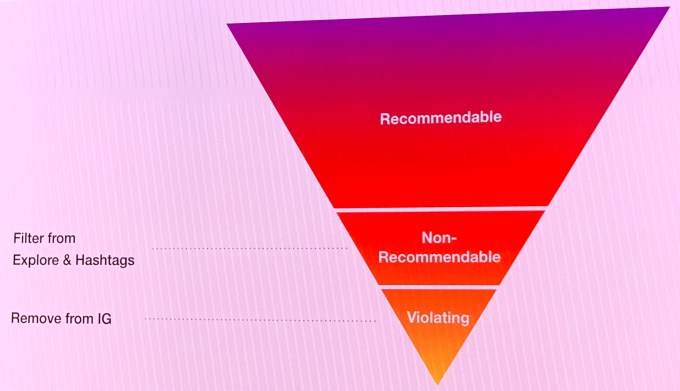

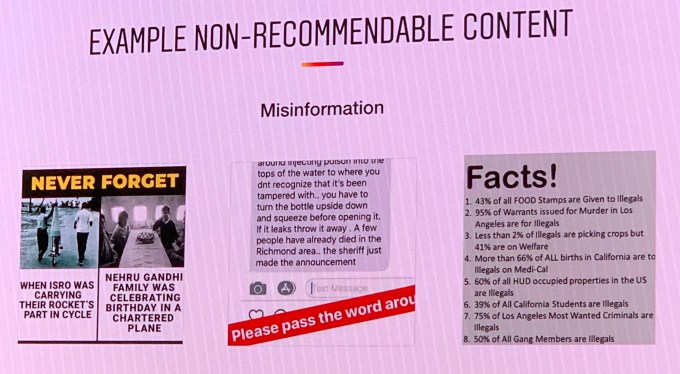

Instagram is home to plenty of scantily clad models and edgy memes that may start to get fewer views starting today. Now Instagram says, “We have begun reducing the spread of posts that are inappropriate but do not go against Instagram’s Community Guidelines.” That means if a post is sexually suggestive, but doesn’t depict a sex act or nudity, it could still get demoted. Similarly, if a meme doesn’t constitute hate speech or harassment, but is considered in bad taste, lewd, violent or hurtful, it could get fewer views.

Specifically, Instagram says, “this type of content may not appear for the broader community in Explore or hashtag pages,” which could severely hurt the ability of creators to gain new followers. The news came amidst a flood of “Integrity” announcements from Facebook to safeguard its family of apps revealed today at a press event at the company’s Menlo Park headquarters.

“We’ve started to use machine learning to determine if the actual media posted is eligible to be recommended to our community,” Instagram’s product lead for Discovery, Will Ruben, said. Instagram is now training its content moderators to label borderline content when they’re hunting down policy violations, and Instagram then uses those labels to train an algorithm to identify.

These posts won’t be fully removed from the feed, and Instagram tells me for now the new policy won’t impact Instagram’s feed or Stories bar. But Facebook CEO Mark Zuckerberg’s November manifesto described the need to broadly reduce the reach of this “borderline content,” which on Facebook would mean being shown lower in News Feed. That policy could easily be expanded to Instagram in the future. That would likely reduce the ability of creators to reach their existing fans, which can impact their ability to monetize through sponsored posts or direct traffic to ways they make money like Patreon.

Facebook’s Henry Silverman explained that, “As content gets closer and closer to the line of our Community Standards at which point we’d remove it, it actually gets more and more engagement. It’s not something unique to Facebook but inherent in human nature.” The borderline content policy aims to counteract this incentive to toe the policy line. Just because something is allowed on one of our apps doesn’t mean it should show up at the top of News Feed or that it should be recommended or that it should be able to be advertised,” said Facebook’s head of News Feed Integrity, Tessa Lyons.

This all makes sense when it comes to clickbait, false news and harassment, which no one wants on Facebook or Instagram. But when it comes to sexualized but not explicit content that has long been uninhibited and in fact popular on Instagram, or memes or jokes that might offend some people despite not being abusive, this is a significant step up of censorship by Facebook and Instagram.

Creators currently have no guidelines about what constitutes borderline content — there’s nothing in Instagram’s rules or terms of service that even mention non-recommendable content or what qualifies. The only information Instagram has provided was what it shared at today’s event. The company specified that violent, graphic/shocking, sexually suggestive, misinformation and spam content can be deemed “non-recommendable” and therefore won’t appear on Explore or hashtag pages.

[Update: After we published, Instagram posted to its Help Center a brief note about its borderline content policy, but with no visual examples, mentions of impacted categories other than sexually suggestive content, or indications of what qualifies content as “inappropriate.” So officially, it’s still leaving users in the dark.]

Instagram denied an account from a creator claiming that the app reduced their feed and Stories reach after one of their posts that actually violates the content policy taken down.

One female creator with around a half-million followers likened receiving a two-week demotion that massively reduced their content’s reach to Instagram defecating on them. “It just makes it like, ‘Hey, how about we just show your photo to like 3 of your followers? Is that good for you? . . . I know this sounds kind of tin-foil hatty but . . . when you get a post taken down or a story, you can set a timer on your phone for two weeks to the godd*mn f*cking minute and when that timer goes off you’ll see an immediate change in your engagement. They put you back on the Explore page and you start getting followers.”

As you can see, creators are pretty passionate about Instagram demoting their reach. Instagram’s Will Ruben said regarding the feed/Stories reach reduction: No, that’s not happening. We distinguish between feed and surfaces where you’ve taken the choice to follow somebody, and Explore and hashtag pages where Instagram is recommending content to people.”

The questions now are whether borderline content demotions are ever extended to Instagram’s feed and Stories, and how content is classified as recommendable, non-recommendable or violating. With artificial intelligence involved, this could turn into another situation where Facebook is seen as shirking its responsibilities in favor of algorithmic efficiency — but this time in removing or demoting too much content rather than too little.

Given the lack of clear policies to point to, the subjective nature of deciding what’s offensive but not abusive, Instagram’s 1 billion user scale and its nine years of allowing this content, there are sure to be complaints and debates about fair and consistent enforcement.

Powered by WPeMatico

The definitive Niantic reading guide

In just a few years, Niantic has evolved from internal side project into an independent industry trailblazer. Having reached tremendous scale in such a short period of time, Niantic acts as a poignant crash course for founders and company builders. As our EC-1 deep-dive into the company shows, lessons from the team’s experience building the Niantic’s product offering remain just as fresh as painful flashbacks to the problems encountered along the way.

As we did for our Patreon EC-1, we’ve poured through every analysis we could find on Niantic and have compiled a supplemental list of resources and readings that are particularly useful for getting up to speed on the company.

Reading time for this article is about 9.5 minutes. It is part of the Extra Crunch EC-1 on Niantic. Feature illustration by Bryce Durbin / TechCrunch.

I. Background: The Story of Niantic

Google-Incubated Niantic, Maker of Ingress, Stepping Out on Its Own | August 2015 | In August of 2015, Niantic announced that it would spin out from Google and become an independent company. As discussed in WSJ’s coverage of the news, Niantic looked at the spin out as a way to accelerate growth and collaborate with the broader entertainment ecosystem.

Powered by WPeMatico

The right way to do AI in security

Artificial intelligence applied to information security can engender images of a benevolent Skynet, sagely analyzing more data than imaginable and making decisions at lightspeed, saving organizations from devastating attacks. In such a world, humans are barely needed to run security programs, their jobs largely automated out of existence, relegating them to a role as the button-pusher on particularly critical changes proposed by the otherwise omnipotent AI.

Such a vision is still in the realm of science fiction. AI in information security is more like an eager, callow puppy attempting to learn new tricks – minus the disappointment written on their faces when they consistently fail. No one’s job is in danger of being replaced by security AI; if anything, a larger staff is required to ensure security AI stays firmly leashed.

Arguably, AI’s highest use case currently is to add futuristic sheen to traditional security tools, rebranding timeworn approaches as trailblazing sorcery that will revolutionize enterprise cybersecurity as we know it. The current hype cycle for AI appears to be the roaring, ferocious crest at the end of a decade that began with bubbly excitement around the promise of “big data” in information security.

But what lies beneath the marketing gloss and quixotic lust for an AI revolution in security? How did AL ascend to supplant the lustrous zest around machine learning (“ML”) that dominated headlines in recent years? Where is there true potential to enrich information security strategy for the better – and where is it simply an entrancing distraction from more useful goals? And, naturally, how will attackers plot to circumvent security AI to continue their nefarious schemes?

How did AI grow out of this stony rubbish?

The year AI debuted as the “It Girl” in information security was 2017. The year prior, MIT completed their study showing “human-in-the-loop” AI out-performed AI and humans individually in attack detection. Likewise, DARPA conducted the Cyber Grand Challenge, a battle testing AI systems’ offensive and defensive capabilities. Until this point, security AI was imprisoned in the contrived halls of academia and government. Yet, the history of two vendors exhibits how enthusiasm surrounding security AI was driven more by growth marketing than user needs.

Powered by WPeMatico

Daily Crunch: Meet the new CEO of Google Cloud

The Daily Crunch is TechCrunch’s roundup of our biggest and most important stories. If you’d like to get this delivered to your inbox every day at around 9am Pacific, you can subscribe here.

1. Google Cloud’s new CEO on gaining customers, startups, supporting open source and more

Thomas Kurian, who came to Google Cloud after 22 years at Oracle, said the team is rolling out new contracts and plans to simplify pricing.

Most importantly, though, Google will go on a hiring spree: “A number of customers told us ‘we just need more people from you to help us.’ So that’s what we’ll do.”

2. Walmart to expand in-store tech, including Pickup Towers for online orders and robots

Walmart is doubling down on technology in its brick-and-mortar stores in an effort to better compete with Amazon. The retailer says it will add to its U.S. stores 1,500 new autonomous floor cleaners, 300 more shelf scanners, 1,200 more FAST Unloaders and 900 new Pickup Towers.

3. Udacity restructures operations, lays off 20 percent of its workforce

The objective is to do more than simply keep the company afloat, according to co-founder Sebastian Thrun. Instead, Thrun says these measures will allow Udacity to move from a money-losing operation to a “break-even or profitable company by next quarter and then moving forward.”

Photo By Bill Clark/CQ Roll Call via Getty Images

4. The government is about to permanently bar the IRS from creating a free electronic filing system

That’s right, members of Congress are working to prohibit a branch of the federal government from providing a much-needed service that would make the lives of all of their constituents much easier.

5. Here’s the first image of a black hole

Say hello to the black hole deep inside the Messier 87, a galaxy located in the Virgo cluster some 55 million light years away.

6. Movo grabs $22.5M to get more cities in LatAm scooting

The Spanish startup targets cities in its home market and in markets across Latin America, offering last-mile mobility via rentable electric scooters.

7. Uber, Lyft and the challenge of transportation startup profits

An article arguing that everything you know about the cost of transportation is wrong. (Extra Crunch membership required.)

Powered by WPeMatico

Google Cloud takes aim at verticals starting with new set of tools for retailers

Google might not be Adobe or Salesforce, but it has a particular set of skills, which fit nicely with retailer requirements and can over time help improve the customer experience, even if that means just simply making sure the website or app is running, even on peak demand. Today, at Google Cloud Next, the company showed off a package of solutions as an example its vertical strategy.

Just this morning, the company announced a new phase of its partnership with Salesforce, where it’s using its contact center AI tools and chatbot technology in combination with Salesforce data to produce a product that plays to each company’s strengths and helps improve the customer service experience.

But Google didn’t stop with a high profile partnership. It has a few tricks of its own for retailers, starting with the classic retailer Black Friday kind of scenario. The easiest way to explain the value of cloud scaling is to look at a retail event like Black Friday when you know servers are going to be bombarded with traffic.

The cloud has always been good at scaling up for those kind of events, but it’s not perfect, as Amazon learned last year when it slowed down on Prime Day. Google wants to help companies avoid those kinds of disasters because a slow or down website translates into lots of lost revenue.

The company offers eCommerce Hosting, designed specifically for online retailers, and it is offering a special premium program, so retailers get “white glove treatment with technical architecture reviews and peak season operations support…” according to the company. In other words, it wants to help these companies avoid disastrous, money-losing results when a site goes down due to demand.

In addition, Google is offering real-time inventory tools, so customers and clerks can know exactly what stock is on hand, and it’s applying its AI expertise to this, as well with tools like Google Contact Center AI solution to help deliver better customer service experiences or Cloud Vision technology to help customers point their cameras at a product and see similar or related products. They also offer Recommendations AI, a tool, that says, if you bought these things, you might like this too, among other tools.

The company counts retail customers like Shopify and Ikea. In addition, the company is working with SI partners like Accenture, CapGemini and Deloitte and software partners like Salesforce, SAP and Tableau.

All of this is about creating a set of services created specifically for a given vertical to help that industry take advantage of the cloud. It’s one more way for Google Cloud to bring solutions to market and help increase its marketshare.

Powered by WPeMatico

Google Cloud announces Traffic Director, a networking management tool for service mesh

With each new set of technologies comes a new set of terms. In the containerized world, applications are broken down into discrete pieces or micro services. As these services proliferate, it creates a service mesh, a network of services and the interactions that take place as they interact. For each new technology like this, it requires a management layer, especially for the network administrators to understand and control the new concept, in this case, the service mesh.

Today at Google Cloud Next, the company announced the Beta of Traffic Director for open service mesh, specifically to help network managers understand what’s happening in their service mesh.

“To accelerate adoption and reduce the toil of managing service mesh, we’re excited to introduce Traffic Director, our new GCP-managed, enterprise-ready configuration and traffic control plane for service mesh that enables global resiliency, intelligent load balancing, and advanced traffic control capabilities like canary deployments,” Brad Calder, VP of engineering for technical infrastructure at Google Cloud, wrote in a blog post introducing the tool.

Traffic Director provides a way for operations to deploy a service mesh on their networks and have more control over how it works and interacts with the rest of the system. The tool works with Virtual Machines, Compute Engine on GCP, or in a containerized approach, GKE on GCP.

The product is just launching into Beta today, but the road map includes additional security features and support for hybrid environments, and eventually integration with Anthos, the hybrid management tool the company introduced yesterday at Google Cloud Next.

Powered by WPeMatico

Google launches new security tools for G Suite users

Google today launched a number of security updates to G Suite, its online productivity and collaboration platform. The focus of these updates is on protecting a company’s data inside G Suite, both through controlling who can access it and through providing new tools for prevening phishing and malware attacks.

To do this, Google is announcing the beta launch of its advanced phishing and malware protection, for example. This is meant to help admins protect users from malicious attachment and inbound email spoofing, among other things.

The most interesting feature here, though, is the new security sandbox, another beta feature for G Suite enterprise users. The sandbox allows admins to add an extra layer of protection on top of the standard attachment scans for known viruses and malware. Those existing tools can’t fully protect you against zero-day ransomware or sophisticated malware, though. So instead of just letting you open the attachment, this tool executes the attachment in a sandbox environment to check if there are any security issues.

With today’s launch, Google is announcing the beta launch of its new security and alert center for admins. These tools are meant to create a single services that features best practice recommendations, but also a unified notifications center and tools to triage and take actions against threats, all with focus on collaboration among admins. Also new is a security investigation tool that mostly focuses on allowing admins to create automated workflows for sending notifications or assigning ownership to security investigations.

Powered by WPeMatico

Google launches its coldest storage service yet

At its Cloud Next conference, Google today launched a new archival cold storage service. This new service, which doesn’t seem to have a fancy name, will complement the company’s existing Nearline and Coldline services for storing vast amounts of infrequently used data at an affordable low cost.

The new archive class takes this one step further, though. It’s cheap, with prices starting at $0.0012 per gigabyte and month. That’s $1.23 per terabyte and month.

The new service will become available later this year.

What makes Google cold storage different from the likes of AWS S3 Glacier, for example, is that the data is immediately available, without millisecond latency. Glacier and similar service typically make you wait a significant amount of time before the data can be used. Indeed, in a thinly veiled swipe at AWS, Google directors of product management Dominic Preuss and Dave Nettleton note that “unlike tape and other glacially slow equivalents, we have taken an approach that eliminates the need for a separate retrieval process and provides immediate, low-latency access to your content.”

To put that into context, a gigabyte stored in AWS Glacier will set you back $0.004 per month. AWS offers another option, though: AWS Glacier Deep Archive. This service recently went live, at the cost of $0.00099 per gigabyte and month, though with significantly longer retrieval times.

Google’s new object storage service uses the same APIs as Google’s other storage classes and Google promises that the data is always redundantly stored across availability zones, with eleven 9’s of annual durability.

In a press conference ahead of today’s official announcement, Preuss noted that this service mostly a replacement for on-premise tape backups, but now that many enterprises try to keep as much data as they can to then later train their machine learning models, for example, the amounts of fresh data that needs to be stored for the long term continues to increase rapidly, too.

With low latency and the promise of high availability, there obviously has to be a drawback here, otherwise Google wouldn’t (and couldn’t) offer this service at this price. “Just like when you’re going from our standard [storage] class to Nearline or Coldline, there’s a committed amount of time that you have to remain in that class,” Preuss explained. “So basically, to get a lower price you are committing to keep the data in the Google Cloud Storage bucket for a period of time.”

Correction: a previous version of the post said that AWS Glacier Deep Archive wasn’t available yet when it actually went live two weeks ago. We changed the post to reflect this.

Powered by WPeMatico

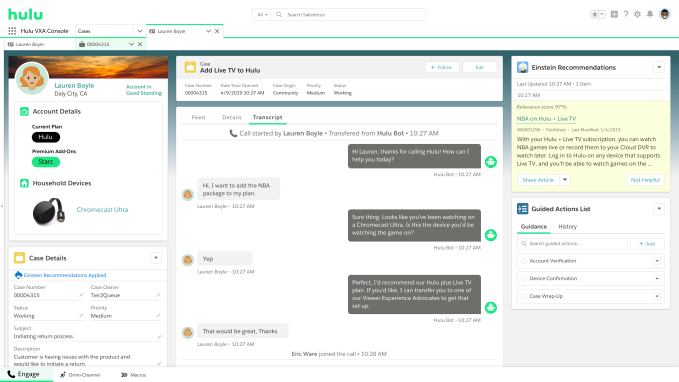

Salesforce and Google want to build a smarter customer service experience

Anyone who has dealt with bad customer service has felt frustration with the lack of basic understanding of who you are as a customer and what you need. Google and Salesforce feel your pain, and today the two companies expanded their partnership to try and create a smarter customer service experience.

The goal is to combine Salesforce’s customer knowledge with Google’s customer service-related AI products and build on the strengths of the combined solution to produce a better customer service experience, whether that’s with an agent or a chatbot..

Bill Patterson, executive vice president for Salesforce Service Cloud, gets that bad customer service is a source of vexation for many consumers, but his goal is to change that. Patterson points out that Google and Salesforce have been working together since 2017, but mostly on sales- and marketing-related projects. Today’s announcement marks the first time they are working on a customer service solution together.

For starters, the partnership is looking at the human customer service agent experience.”The combination of Google Contact Center AI, which highlights the language and the stream of intelligence that comes through that interaction, combined with the customer data and the business process information that that Salesforce has, really makes that an incredibly enriching experience for agents,” Patterson explained.

The Google software will understand voice and intent, and have access to a set of external information like weather or news events that might be having an impact on the customers, while Salesforce looks at the hard data it stores about the customer such as who they are, their buying history and previous interactions.

The companies believe that by bringing these two types of data together, they can surface relevant information in real time to help the agent give the best answer. It may be the best article or it could be just suggesting that a shipment might be late because of bad weather in the area.

Customer service agent screen showing information surfaced by intelligent layers in Google and Salesforce

The second part of the announcement involves improving the chatbot experience. We’ve all dealt with rigid chatbots, who can’t understand your request. Sure, it can sometimes channel your call to the right person, but if you have any question outside the most basic ones, it tends to get stuck, while you scream “Operator! I said OPERATOR!” (Or at least I do.)

Google and Salesforce are hoping to change that by bringing together Einstein, Salesforce’s artificial intelligence layer and Google Natural Language Understanding (NLU) in its Google Dialogflow product to better understand the request, monitor the sentiment and direct you to a human operator before you get frustrated.

Patterson’s department, which is on a $3.8 billion run rate, is poised to become the largest revenue producer in the Salesforce family by the end of the year. The company itself is on a run rate over $14 billion.

“So many organizations just struggle with primitives of great customer service and experience. We have a lot of passion for making everyday interaction better with agents,” he said. Maybe this partnership will bring some much needed improvement.

Powered by WPeMatico

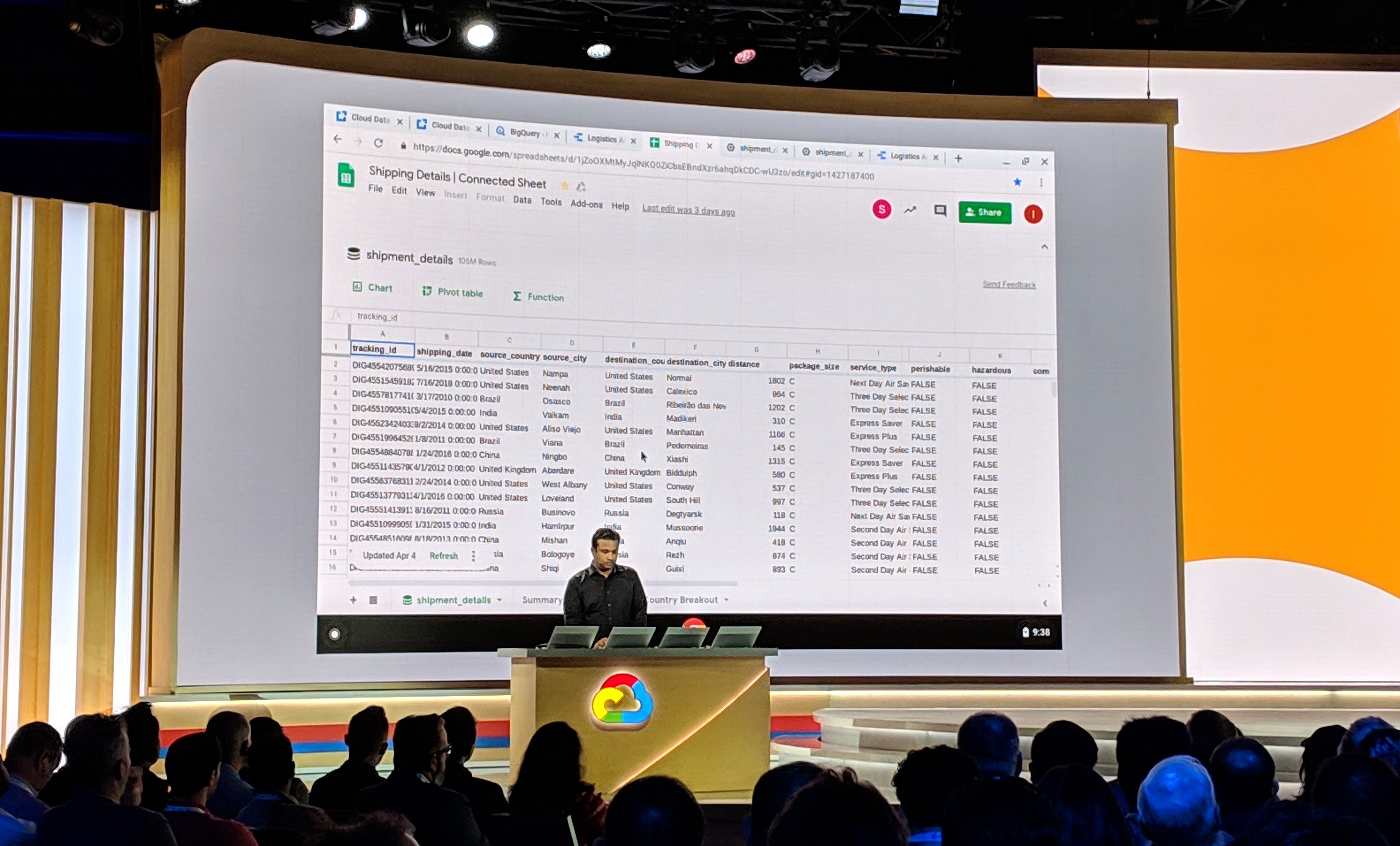

Google makes the power of BigQuery available in Sheets

Google today announced a new service that makes the power of BigQuery, its analytics data warehouse, available in Sheets, its web-based spreadsheet tool. These so-called “connected sheets” face none of the usual limitations of Google’s regular spreadsheets, meaning there are no row limits, for example. Instead, users can take a massive data set from BigQuery, with potentially billions of rows, and turn it into a pivot table.

The idea here, is to enable virtually anybody to make use of all the data that is stored in BigQuery. That’s because from the user’s perspective, this new kind of table is simply a spreadsheet, with all of the usual functionality you’d expect from a spreadsheet. With this, Sheets becomes a front end for BigQuery — and virtually any business user knows how to use a spreadsheet.

This also means you can use all of the usual visualization tools in Sheets and share your data with others in your organization.

“Connected sheets are helping us democratize data,” says Nikunj Shanti, chief product officer at AirAsia. “Analysts and business users are able to create pivots or charts, leveraging their existing skills on massive data sets, without needing SQL. This direct access to the underlying data in BigQuery provides access to the most granular data available for analysis. It’s a game changer for AirAsia.”

The beta of connected sheets should go live within the next few months.

In this context, it’s worth mentioning that Google also today announced the beta launch of BigQuery BI Engine, a new service for business users that connects BigQuery with Google Data Studio for building interactive dashboards and reports. This service, too, is available in Google Data Studio today and will also become available through third-party services like Tableau and Looker in the next few months.

“With BigQuery BI Engine behind the scenes, we’re able to gain deep insights very quickly in Data Studio,” says Rolf Seegelken, senior data analyst at Zalando. “The performance of even our most computationally intensive dashboards has sped up to the point where response times are now less than a second. Nothing beats ‘instant’ in today’s age, to keep our teams engaged in the data!”

Powered by WPeMatico